Data Science

Contents

[hide]- 1 Data Science and Machine Learning Courses

- [+]2 What is Machine Learning

- [+]3 Text Classification

- [+]4 Sentiment Analysis

- [+]5 Fake news detection

- 6 Confusion matrix

- 7 Document Term Matrices

- 8 Collecting data from Twitter using R

- 9 R Programming

- [+]10 Anaconda

- 11 RapidMiner

- 12 Jupyter Notebook

- 13 RTextTools - A Supervised Learning Package for Text Classification

Data Science and Machine Learning Courses

- www.udemy.com

What is Machine Learning

Al tratar de encontrar una definición para ML me di cuanta de que muchos expertos coinciden en que no hay una definición standard para ML.

En este post se explica bien la definición de ML: https://machinelearningmastery.com/what-is-machine-learning/

Estos vídeos también son excelentes para entender what ML is:

- https://www.youtube.com/watch?v=f_uwKZIAeM0

- https://www.youtube.com/watch?v=ukzFI9rgwfU

- https://www.youtube.com/watch?v=WXHM_i-fgGo

- https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v

Una de las definiciones más citadas es la definición de Tom Mitchell. This author provides in his book Machine Learning a definition in the opening line of the preface:

Tom Mitchell

The field of machine learning is concerned with the question of how to construct computer programs that automatically improve with experience.

So, in short we can say that ML is about write computer programs that improve themselves.

Tom Mitchell also provides a more complex and formal definition:

Tom Mitchell

A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.

Don't let the definition of terms scare you off, this is a very useful formalism. It could be used as a design tool to help us think clearly about:

- E: What data to collect.

- T: What decisions the software needs to make.

- P: How we will evaluate its results.

Suppose your email program watches which emails you do or do not mark as spam, and based on that learns how to better filter spam. In this case: https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v

- E: Watching you label emails as spam or not spam.

- T: Classifying emails as spam or not spam.

- P: The number (or fraction) of emails correctly classified as spam/not spam.

Types of Machine Learning

Supervised Learning

https://en.wikipedia.org/wiki/Supervised_learning

- Supervised learning is the machine learning task of learning a function that maps an input to an output based on example input-output pairs.

- In another words, it infers a function from labeled training data consisting of a set of training examples.

- In supervised learning, each example is a pair consisting of an input object (typically a vector) and a desired output value (also called the supervisory signal).

A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples.

https://machinelearningmastery.com/supervised-and-unsupervised-machine-learning-algorithms/

The majority of practical machine learning uses supervised learning.

Supervised learning is when you have input variables (x) and an output variable (Y) and you use an algorithm to learn the mapping function from the input to the output.

Y = f(X)

The goal is to approximate the mapping function so well that when you have new input data (x) that you can predict the output variables (Y) for that data.

It is called supervised learning because the process of an algorithm learning from the training dataset can be thought of as a teacher supervising the learning process. We know the correct answers, the algorithm iteratively makes predictions on the training data and is corrected by the teacher. Learning stops when the algorithm achieves an acceptable level of performance.

https://www.datascience.com/blog/supervised-and-unsupervised-machine-learning-algorithms

Supervised machine learning is the more commonly used between the two. It includes such algorithms as linear and logistic regression, multi-class classification, and support vector machines. Supervised learning is so named because the data scientist acts as a guide to teach the algorithm what conclusions it should come up with. It’s similar to the way a child might learn arithmetic from a teacher. Supervised learning requires that the algorithm’s possible outputs are already known and that the data used to train the algorithm is already labeled with correct answers. For example, a classification algorithm will learn to identify animals after being trained on a dataset of images that are properly labeled with the species of the animal and some identifying characteristics.

Supervised Classification

Supervised Classification with R: http://www.cmap.polytechnique.fr/~lepennec/R/Learning/Learning.html

Unsupervised Learning

Reinforcement Learning

Text Classification

https://monkeylearn.com/text-classification/

Text classification is the process of assigning tags or categories to text according to its content. It’s one of the fundamental tasks in Natural Language Processing (NLP) with broad applications such as sentiment analysis, topic labeling, spam detection, and intent detection.

Unstructured data in the form of text is everywhere: emails, chats, web pages, social media, support tickets, survey responses, and more. Text can be an extremely rich source of information, but extracting insights from it can be hard and time-consuming due to its unstructured nature. Businesses are turning to text classification for structuring text in a fast and cost-efficient way to enhance decision-making and automate processes.

But, what is text classification? How does text classification work? What are the algorithms used for classifying text? What are the most common business applications?

Text classification (a.k.a. text categorization or text tagging) is the task of assigning a set of predefined categories to free-text. Text classifiers can be used to organize, structure, and categorize pretty much anything. For example, new articles can be organized by topics, support tickets can be organized by urgency, chat conversations can be organized by language, brand mentions can be organized by sentiment, and so on.

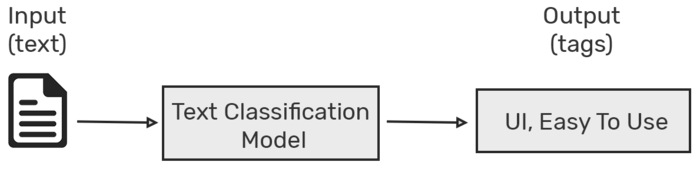

As an example, take a look at the following text below:

"The user interface is quite straightforward and easy to use."

A classifier can take this text as an input, analyze its content, and then and automatically assign relevant tags, such as UI and Easy To Use that represent this text:

There are many approaches to automatic text classification, which can be grouped into three different types of systems:

- Rule-based systems

- Machine Learning based systems

- Hybrid systems

Machine Learning Based Systems

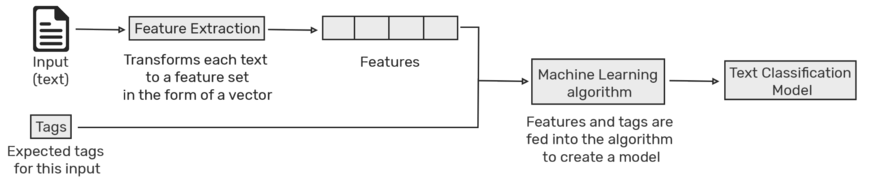

Instead of relying on manually crafted rules, text classification with machine learning learns to make classifications based on past observations. By using pre-labeled examples as training data, a machine learning algorithm can learn the different associations between pieces of text and that a particular output (i.e. tags) is expected for a particular input (i.e. text).

The first step towards training a classifier with machine learning is feature extraction: a method is used to transform each text into a numerical representation in the form of a vector. One of the most frequently used approaches is bag of words, where a vector represents the frequency of a word in a predefined dictionary of words.

For example, if we have defined our dictionary to have the following words {This, is, the, not, awesome, bad, basketball}, and we wanted to vectorize the text “This is awesome”, we would have the following vector representation of that text: (1, 1, 0, 0, 1, 0, 0).

Then, the machine learning algorithm is fed with training data that consists of pairs of feature sets (vectors for each text example) and tags (e.g. sports, politics) to produce a classification model:

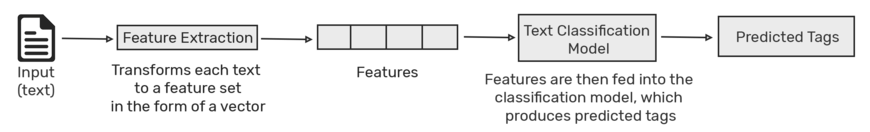

Once it’s trained with enough training samples, the machine learning model can begin to make accurate predictions. The same feature extractor is used to transform unseen text to feature sets which can be fed into the classification model to get predictions on tags (e.g. sports, politics):

Text Classification Algorithms

Some of the most popular machine learning algorithms for creating text classification models include the naive bayes family of algorithms, support vector machines, and deep learning.

Naive Bayes

Support vector machines

Deep learning

Sentiment Analysis

Sentiment Analysis with R

https://github.com/Twitter-Sentiment-Analysis/R

https://github.com/jayskhatri/Sentiment-Analysis-of-Twitter

https://hackernoon.com/text-processing-and-sentiment-analysis-of-twitter-data-22ff5e51e14c

http://tabvizexplorer.com/sentiment-analysis-using-r-and-twitter/

https://analyzecore.com/2017/02/08/twitter-sentiment-analysis-doc2vec/

https://github.com/rbhatia46/Twitter-Sentiment-Analysis-Web

http://dataaspirant.com/2018/03/22/twitter-sentiment-analysis-using-r/

https://www.r-bloggers.com/twitter-sentiment-analysis-with-r/

Training a machine to classify tweets according to sentiment

https://rpubs.com/imtiazbdsrpubs/236087

# https://rpubs.com/imtiazbdsrpubs/236087

library(twitteR)

library(base64enc)

library(tm)

library(RTextTools)

library(qdapRegex)

library(dplyr)

# -------------------------------------------------------------------------- -

# ���� Authentication through api to get the data ----

# -------------------------------------------------------------------------- -

consumer_key<-'EyViljD4yD3e3iNEnRvYnJRe8'

consumer_secret<-'mN8vjKR0oqDCReCr77Xx81BK0epQZ6MiPggPtDzYXDpxDzM5vu'

access_token<-'3065887431-u1mtPeXAOehZKuuhTCswbstoRZdadYghIrBiFHS'

access_secret<-'afNNt3hdZZ5MCFOEPC4tuq3dW3NPzU403ABPXHOHKtFFn'

# After this line of command type 1 for selection as Yes:

setup_twitter_oauth(consumer_key, consumer_secret, access_token, access_secret)

## [1] "Using direct authentication"

# -------------------------------------------------------------------------- -

# ���� Getting Tweets from the api and storing the data based on hashtags ----

# -------------------------------------------------------------------------- -

hashtags = c('#ClimateChange', '#Trump', '#Demonetization', '#Kejriwal', '#Technology')

totalTweets= list()

for (hashtag in hashtags){

tweets = searchTwitter(hashtag, n=20 ) # Search fot tweets (n specify the number of tweets)

tweets = twListToDF(tweets) # Convert from list to dataframe

tweets <- tweets %>% # Adding a column to specify which hashtag the tweet come from

mutate(hashtag = hashtag)

tweets.df = tweets[,1] # Assign tweets for cleaning

tweets.df = gsub("(RT|via)((?:\\b\\W*@\\w+)+)", "", tweets.df);head(tweets.df)

tweets.df = gsub("@\\w+", "", tweets.df);head(tweets.df) # Regex for removing @user

tweets.df = gsub("[[:punct:]]", "", tweets.df);head(tweets.df) # Regex for removing punctuation mark

tweets.df = gsub("[[:digit:]]", "", tweets.df);head(tweets.df) # Regex for removing numbers

tweets.df = gsub("http\\w+", "", tweets.df);head(tweets.df) # Regex for removing links

tweets.df = gsub("\n", " ", tweets.df);head(tweets.df) # Regex for removing new line (\n)

tweets.df = gsub("[ \t]{2,}", " ", tweets.df);head(tweets.df) # Regex for removing two blank space

tweets.df = gsub("[^[:alnum:]///' ]", " ", tweets.df) # Keep only alpha numeric

tweets.df = iconv(tweets.df, "latin1", "ASCII", sub="") # Keep only ASCII characters

tweets.df = gsub("^\\s+|\\s+$", "", tweets.df);head(tweets.df) # Remove leading and trailing white space

tweets[,1] = tweets.df # Save in Data frame

totalTweets[[paste0(gsub('#','',hashtag))]]=tweets

}

# Combining all the tweets into a single corpus

HashTagTweetsCombined = do.call("rbind", totalTweets)

dim(HashTagTweetsCombined)

str(HashTagTweetsCombined)

str(HashTagTweetsCombined)

# -------------------------------------------------------------------------- -

# ���� Text preprocessing using the qdap regex package ----

# -------------------------------------------------------------------------- -

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_url,pattern=pastex("@rm_twitter_url", "@rm_url")) # Cleaning the text: Removing URL's

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_email) # Removing emails from the tweet text(rm_email removes all the patterns which has @)

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_tag) # Removes user hash tags from the tweet text

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_number) # Removes numbers from the text

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_non_ascii) # Removes non ascii characters

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_white) # Removes extra white spaces

HashTagTweetsCombined$text=sapply(HashTagTweetsCombined$text,rm_date) # Removes dates from the text

# -------------------------------------------------------------------------- -

# ���� Manually label the polarity of the tweets into -neutral- -positive- and -negative- sentiments ----

# -------------------------------------------------------------------------- -

# Storing the file in local location so as to manually label the polarity

# write.table(HashTagTweetsCombined,"/home/anapaula/HashTagTweetsCombined.csv", append=T, row.names=F, col.names=T, sep=",")

# Reloading the file again after manually labelling

HashTagTweetsCombined=read.csv("/home/anapaula/HashTagTweetsCombined.csv")

# HashTagTweetsCombined$sentiment=factor(HashTagTweetsCombined$sentiment)

# -------------------------------------------------------------------------- -

# ���� Splitting the data into train and test data ----

# -------------------------------------------------------------------------- -

data <- HashTagTweetsCombined %>% # Taking just the columns we are interested in (slicing the data)

select(text, sentiment, hashtag)

set.seed(16102016) # To fix the sample

# Randomly Taking the 70% of the rows (70% records will be used for training sampling without replacement. The remaining 30% will be used for testing)

samp_id = sample(1:nrow(data), # do ?sample to examine the sample() func

round(nrow(data)*.70),

replace = F)

train = data[samp_id,] # 70% of training data set, examine struc of samp_id obj

test = data[-samp_id,] # remaining 30% of training data set

dim(train) ; dim(test)

# Join the data sets

train.data = rbind(train,test)

# -------------------------------------------------------------------------- -

# ���� Text processing ----

# -------------------------------------------------------------------------- -

train.data$text = tolower(train.data$text) # Convert to lower case

text = train.data$text # Taking just the text column

text = removePunctuation(text) # Remove punctuation marks

text = removeNumbers(text) # Remove numbers

text = stripWhitespace(text) # Remove blank space

# -------------------------------------------------------------------------- -

# ���� Creating the document term matrix ----

# -------------------------------------------------------------------------- -

# https://en.wikipedia.org/wiki/Document-term_matrix

cor = Corpus(VectorSource(text)) # Create text corpus

dtm = DocumentTermMatrix(cor, # Craete DTM

control = list(weighting =

function(x)

weightTfIdf(x, normalize = F))) # IDF weighing

dim(dtm)

# sorts a sparse matrix in triplet format (i,j,v) first by i, then by j (https://groups.google.com/forum/#!topic/rtexttools-help/VILrGoRpRrU)

# Args:

# working.dtm: a sparse matrix in i,j,v format using $i $j and $v respectively. Any other variables that may exist in the sparse matrix are not operated on, and will be returned as-is.

# Returns:

# A sparse matrix sorted by i, then by j.

ResortDtm <- function(working.dtm) {

working.df <- data.frame(i = working.dtm$i, j = working.dtm$j, v = working.dtm$v) # create a data frame comprised of i,j,v values from the sparse matrix passed in.

working.df <- working.df[order(working.df$i, working.df$j), ] # sort the data frame first by i, then by j.

working.dtm$i <- working.df$i # reassign the sparse matrix' i values with the i values from the sorted data frame.

working.dtm$j <- working.df$j # ditto for j values.

working.dtm$v <- working.df$v # ditto for v values.

return(working.dtm) # pass back the (now sorted) data frame.

}

dtm <- ResortDtm(dtm)

# Coded labels

training_codes = train.data$sentiment

# -------------------------------------------------------------------------- -

# ���� Creating the model: Testing different models and choosing the best model ----

# -------------------------------------------------------------------------- -

# After many iterations and testing with models like RF, TREE, Bagging, maxent, and slda, we found that GLMNET is giving higher accuracy

# Creates a 'container' obj for training, classifying, and analyzing docs

container <- create_container(dtm,

t(training_codes), # Labels or the Y variable / outcome we want to train on

trainSize = 1:nrow(train),

testSize = (nrow(train)+1):nrow(train.data),

virgin = FALSE) # Whether to treat the classification data as 'virgin' data or not

# If virgin = TRUE, then machine won;t borrow from prior datasets

str(container) # View struc of the container obj; is a list of training n test data

# Creating the model

models <- train_models(container, # ?train_models; makes a model object using the specified algorithms.

algorithms=c("GLMNET")) # "MAXENT","SVM","GLMNET","SLDA","TREE","BAGGING","BOOSTING","RF"

# -------------------------------------------------------------------------- -

# ���� Makes predictions from a train_models() object ----

# -------------------------------------------------------------------------- -

results <- classify_models(container, models)

head(results)

# -------------------------------------------------------------------------- -

# ���� Determining the accuracy of prediction results ----

# -------------------------------------------------------------------------- -

# Building a confusion matrix

out = data.frame(model_sentiment = results$GLMNET_LABEL, # Rounded probability == model's prediction of Y

model_prob = results$GLMNET_PROB,

actual_sentiment = train.data$sentiment[(nrow(train)+1):nrow(train.data)]) # Actual value of Y

dim(out); head(out);

summary(out) # How many 0s and 1s were there anyway?

(z = as.matrix(table(out[,1], out[,3]))) # Display the confusion matrix.

(pct = round(((z[1,1] + z[2,2])/sum(z))*100, 2)) # Prediction accuracy in % terms

Social Media Sentiment Analysis

https://www.dezyre.com/article/top-10-machine-learning-projects-for-beginners/397

https://elitedatascience.com/machine-learning-projects-for-beginners#social-media

https://en.wikipedia.org/wiki/Sentiment_analysis

https://en.wikipedia.org/wiki/Social_media_mining

Sentiment analysis, also known as opinion mining, opinion extraction, sentiment mining or subjectivity analysis, is the process of analyzing a piece of online writing (social media mentions, blog posts, news sites, or any other piece) expresses positive, negative, or neutral attitude. https://brand24.com/blog/twitter-sentiment-analysis/

Motivation

Social media has almost become synonymous with «big data» due to the sheer amount of user-generated content.

Mining this rich data can prove unprecedented ways to keep a pulse on opinions, trends, and public sentiment (Facebook, Twitter, YouTube, WeChat...)

Social media data will become even more relevant for marketing, branding, and business as a whole.

As you can see, Data Analysis (Machine learning) for this kind of researches is a tool that will become more and more important in the coming years.

Methodology

Mining Social Media data

The first part of the project will be Mining Social Media data

- To start the project we first need to choose where we are going to get the data from. I have seen in many sources that to start working on it, Twitter is the classic entry point for practicing. Here you can see a tutorial about how to Mining Twitter Data with Python : https://marcobonzanini.com/2015/03/02/mining-twitter-data-with-python-part-1/

Twitter Data Mining

https://www.toptal.com/python/twitter-data-mining-using-python

https://marcobonzanini.com/2015/03/02/mining-twitter-data-with-python-part-1/

Why Twitter data?

Twitter is a gold mine of data. Unlike other social platforms, almost every user’s tweets are completely public and pullable. This is a huge plus if you’re trying to get a large amount of data to run analytics on. Twitter data is also pretty specific. Twitter’s API allows you to do complex queries like pulling every tweet about a certain topic within the last twenty minutes, or pull a certain user’s non-retweeted tweets.

A simple application of this could be analyzing how your company is received in the general public. You could collect the last 2,000 tweets that mention your company (or any term you like), and run a sentiment analysis algorithm over it.

We can also target users that specifically live in a certain location, which is known as spatial data. Another application of this could be to map the areas on the globe where your company has been mentioned the most.

As you can see, Twitter data can be a large door into the insights of the general public, and how they receive a topic. That, combined with the openness and the generous rate limiting of Twitter’s API, can produce powerful results.

Twitter Developer Account:

In order to use Twitter’s API, we have to create a developer account on the Twitter apps site.

Log in or make a Twitter account at https://apps.twitter.com/

As of July 2018, you must apply for a Twitter developer account and be approved before you may create new apps. Once approved, you will be able to create new apps from developer.twitter.com.

Home Twitter Developer Account: https://developer.twitter.com/

Tutorials:https://developer.twitter.com/en/docs/tutorials

Docs: https://developer.twitter.com/en/docs

How to Register a Twitter App: https://iag.me/socialmedia/how-to-create-a-twitter-app-in-8-easy-steps/

Storing the data

Secondly, we will need to store the data

Analyzing the data

The third part of the project will be the analysis of the data. Here is where Machine learning will be implement.

- In this part we first need to decide what we want to analyze. There are many examples:

- Example 1 - Business: companies use opinion mining tools to find out what consumers think of their product, service, brand, marketing campaigns or competitors.

- Example 2 - Public actions: opinion analysis is used to analyze online reactions to social and cultural phenomena, for example, the premiere episode of the Game of Thrones, or Oscars.

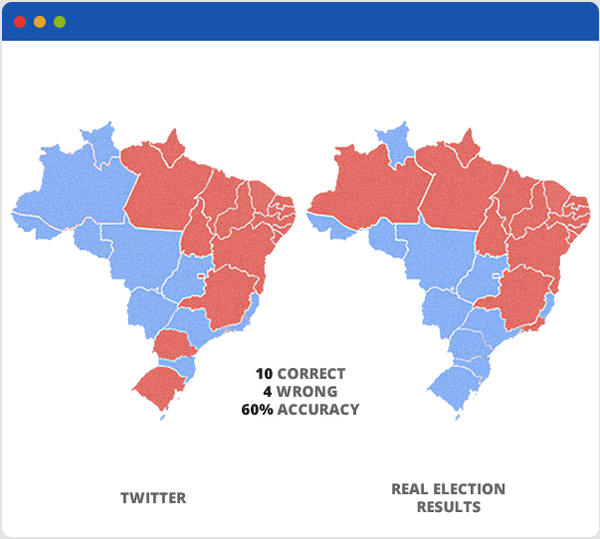

- Example 3 - Politics: In politics, sentiment analysis is used to keep track of society’s opinions on the government, politicians, statements, policy changes, or event to predict results of the election.

- Here the link to a nice article I found «This article describes the techniques that effectively analyzed Twitter Trend Topics to predict, as a sample test case, regional voting patterns in the 2014 Brazilian presidential election» : https://www.toptal.com/data-science/social-network-data-mining-for-predictive-analysis

- In essence, this guy analyses Twitter data for the days prior to the election and got this map:

- Example 4 - Health: I found, for example, this topic that I think is really interesting: Using Twitter Data and Sentiment Analysis to Study Diseases Dynamics. In this work, we extract information about diseases from Twitter with spatiotemporal constraints, i.e. considering a specific geographic area during a given period. We show our first results for a monitoring tool that allow to study the dynamic of diseases https://www.researchgate.net/publication

Plotting the data

If we decide to plot Geographical trends of data, we could present the data using JavaScript like this (Click into the regions and countries): http://perso.sinfronteras.ws/1-archivos/javascript_maps-ammap/continentes.html

Some remarks

- It will be up to us (as a team) to determine what we want to analyses. However, we don't need to decide RIGHT NOW what we are going to analyses. A big part of the methodology can be done without knowing what we are going to analyses. We have enough time to think about a nice case study...

- Another important feature of this work if that we can do it as complex as we want. We could start with something simple like: Determining the most relevant Twitter topic in a geographic area (Dublin for example) during a given period; and following a similar methodology, implement some more complex or relevant analysis like that one about Study Diseases Dynamics; or whichever we decide as a team.

Some references

https://brand24.com/blog/twitter-sentiment-analysis/

https://elitedatascience.com/machine-learning-projects-for-beginners#social-media

https://www.dezyre.com/article/top-10-machine-learning-projects-for-beginners/397

https://www.toptal.com/data-science/social-network-data-mining-for-predictive-analysis

Fake news detection

Supervised Machine Learning for Fake News Detection

Fake news Challenge

http://www.fakenewschallenge.org/

Exploring how artificial intelligence technologies could be leveraged to combat fake news.

Formal Definition

- Input: A headline and a body text - either from the same news article or from two different articles.

- Output: Classify the stance of the body text relative to the claim made in the headline into one of four categories:

- Agrees: The body text agrees with the headline.

- Disagrees: The body text disagrees with the headline.

- Discusses: The body text discuss the same topic as the headline, but does not take a position

- Unrelated: The body text discusses a different topic than the headline

Stance Detection dataset for FNC1

https://github.com/FakeNewsChallenge/fnc-1

Winner team

First place - Team SOLAT in the SWEN

https://github.com/Cisco-Talos/fnc-1

The data provided is (headline, body, stance) instances, where stance is one of {unrelated, discuss, agree, disagree}. The dataset is provided as two CSVs:

- train_bodies.csv : This file contains the body text of articles (the articleBody column) with corresponding IDs (Body ID)

- train_stances.csv : This file contains the labeled stances (the Stance column) for pairs of article headlines (Headline) and article bodies (Body ID, referring to entries in train_bodies.csv).

Distribution of the data

The distribution of Stance classes in train_stances.csv is as follows:

| rows | unrelated | discuss | agree | disagree |

|---|---|---|---|---|

| 49972 | 0.73131 | 0.17828 | 0.0736012 | 0.0168094 |

Paper: Fake news detection on social media - A data mining perspective

Paper: Automatic Detection of Fake News in Social Media using Contextual Information

Linguistic approach

The linguistic or textual approach to detecting false information involves using techniques that analyzes frequency, usage, and patterns in the text. Using this gives the ability to find similarities that comply to usage that is known in types of text, such as for fake news, which have a language that is similar to satire and will contain more emotional and an easier language than articles have on the same topic.

Support VectorMachines

A support vector machine(SVM) is a classifier that works by separating a hyperplane(n-dimensional space) containing input. It is based on statistical learning theory[59]. Given labeled training data, the algorithm outputs an optimal hyperplane which classifies new examples. The optimal hyperplane is calculated by finding the divider that minimizes the noise sensitivity andmaximizes the generalization

Naive Bayes

Naive Bayes is a family of linear classifiers that works by using mutually independent features in a dataset for classification[46]. It is known for being easy to implement, being robust, fast and accurate. They are widely used for classification tasks, such as diagnosis of diseases and spam filtering in E-mail.

Term frequency inverse document frequency

Term frequency-inverse document frequency(TF-IDF) is a weight value often used in information retrieval and gives a statistical measure to evaluate the importance of a word in a document collection or a corpus. Basically, the importance of a word increases proportionally with how many times it appears in a document, but is offset by the frequency of the word in the collection or corpus. Thus a word that appears all the time will have a low impact score, while other less used words will have a greater value associated with them[28]

N-grams

Sentiment analysis

Contextual approach

Contextual approaches incorporate most of the information that is not text. This includes data about users, such as comments, likes, re-tweets, shares and so on. It can also be information regarding the origin, both as who created it and where it was first published. This kind of information has a more predictive approach then linguistic, where you can be more deterministic. The contextual clues give a good indication of how the information is being used, and based on this assumptions can be made.

This approach relies on structured data to be able to make the assumptions, and because of that the usage area is for now limited to Social Media, because of the amount of information that is made public there. You have access to publishers, reactions, origin, shares and even age of the posts.

In addition to this, contextual systems are most often used to increase the quality of existing information and augment linguistic systems, by giving more information to work on for these systems, being reputation, trust metrics or other ways of giving indicators on whether the information is statistically leaning towards being fake or not.

Below a series of contextual methods are presented. They are a collection of state of the art methods and old, proven methods.

Logistic regression

Crowdsourcing algorithms

Network analysis

Trust Networks

Trust Metrics

Content-driven reputation system

Knowledge Graphs

Paper: Fake News Detection using Machine Learning

Blog: I trained fake news detection AI with >95% accuracy and almost went crazy

Confusion matrix

https://www.dataschool.io/simple-guide-to-confusion-matrix-terminology/

Document Term Matrices

Collecting data from Twitter using R

install.packages("twitteR")

library(twitteR)

consumer_key<-'EyViljD4yD3e3iNEnRvYnJRe8'

consumer_secret<-'mN8vjKR0oqDCReCr77Xx81BK0epQZ6MiPggPtDzYXDpxDzM5vu'

access_token<-'3065887431-u1mtPeXAOehZKuuhTCswbstoRZdadYghIrBiFHS'

access_secret<-'afNNt3hdZZ5MCFOEPC4tuq3dW3NPzU403ABPXHOHKtFFn'

setup_twitter_oauth(consumer_key, consumer_secret, access_token, access_secret)

# terms <- c("iphonex", "iPhonex", "iphoneX", "iPhoneX", "iphone10", "iPhone10","iphone x", "iPhone x", "iphone X", "iPhone X", "iphone 10", "iPhone 10", "#iphonex", "#iPhonex", "#iphoneX", "#iPhoneX", "#iphone10", "#iPhone10")

terms <- c("Football")

terms_search <- paste(terms, collapse = " OR ")

iphonex <- searchTwitter(terms_search, n=1000, lang="ur")

iphonex <- twListToDF(iphonex)

write.table(iphonex,"/home/anapaula/iphonex.csv", append=T, row.names=F, col.names=T, sep=",")

R Programming

Anaconda

Anaconda is a free and open source distribution of the Python and R programming languages for data science and machine learning related applications (large-scale data processing, predictive analytics, scientific computing), that aims to simplify package management and deployment. Package versions are managed by the package management system conda. https://en.wikipedia.org/wiki/Anaconda_(Python_distribution)

Installation

https://www.anaconda.com/download/#linux

https://linuxize.com/post/how-to-install-anaconda-on-ubuntu-18-04/

RapidMiner

Jupyter Notebook

https://www.datacamp.com/community/tutorials/tutorial-jupyter-notebook

RTextTools - A Supervised Learning Package for Text Classification

https://journal.r-project.org/archive/2013/RJ-2013-001/RJ-2013-001.pdf

https://cran.r-project.org/web/packages/RTextTools/index.html

The train_model() function takes each algorithm, one by one, to produce an object passable to classify_model().

A convenience train_models() function trains all models at once by passing in a vector of model requests. The syntax below demonstrates model creation for all nine algorithms:

| Algorithms | Author | From package | Keyword |

|---|---|---|---|

| General linearized models | Friedman et al., 2010 | glmnet | GLMNET* |

| Support vector machine | Meyer et al., 2012 | e1071 | SVM* |

| Maximum entropy | Jurka, 2012 | maxent | MAXENT* |

| Classification or regression tree | Ripley., 2012 | tree | TREE |

| Random forest | Liawand Wiener, 2002 | randomForest | RF |

| Boosting | Tuszynski, 2012 | caTools | BOOSTING |

| Neural networks | Venables and Ripley, 2002 | nnet | NNET |

| Bagging | Peters and Hothorn, 2012 | ipred | BAGGING** |

| Scaled linear discriminant analysis | Peters and Hothorn, 2012 | ipred | SLDA** |

| * Low-memory algorithm

** Very high-memory algorithm | |||

GLMNET <- train_model(container,"GLMNET")