Difference between revisions of "Supervised Machine Learning for Fake News Detection"

Adelo Vieira (talk | contribs) (→Summary of Results) (Tag: Visual edit) |

Adelo Vieira (talk | contribs) (→Summary of Results) (Tag: Visual edit) |

||

| Line 57: | Line 57: | ||

! rowspan="3" |Package | ! rowspan="3" |Package | ||

! rowspan="3" |Keyword | ! rowspan="3" |Keyword | ||

| − | ! colspan=" | + | ! colspan="8" |Accuracy |

| − | |||

| − | |||

| − | |||

|- | |- | ||

| − | ![[Establishing an authenticity of sport news by Machine Learning Models#Description of the Kaggle fake news dataset|Kaggle fake news dataset]] | + | ! colspan="2" |[[Establishing an authenticity of sport news by Machine Learning Models#Description of the Kaggle fake news dataset|Kaggle fake news dataset]] |

| − | ! | + | ! colspan="2" |[[Establishing an authenticity of sport news by Machine Learning Models#Description of the Fake news Detector dataset|Fake news Detector dataset]] |

| − | + | ! colspan="4" |Gofaaas Fake News Dataset | |

| − | ! | ||

| − | |||

500 rows | 500 rows | ||

| − | |||

| − | |||

| − | |||

|- | |- | ||

| − | ! | + | !Test data (30% of the dataset) |

| − | + | !Cross validation | |

| − | ! | + | !Test data (30% of the dataset)<br /> |

| − | ! | + | !Cross validation |

| − | ! | + | !Test data (30% of the dataset) |

| − | ! | + | !Cross validation |

| − | ! | + | !Using the Kaggle Model* |

| − | ! | + | !Using the Detector Model** |

| − | ! | ||

|- | |- | ||

|[[Establishing an authenticity of sport news by Machine Learning Models#Extreme Gradient Boosting|Naive Bayes]] | |[[Establishing an authenticity of sport news by Machine Learning Models#Extreme Gradient Boosting|Naive Bayes]] | ||

Revision as of 18:43, 27 April 2019

Contents

Declaration

Acknowledgement

Thanks for Muhammad, Graham and Mark

Abstract

Introduction

Chapter 1

Chapter 2 - Training a Supervised Machine Learning Model for fake news detection

Supervised text Classification for fake news detection Using Machine Learning Models

Procedure

- The Dataset

- Splitting the data into Train and Test data

- Cleaning the data

- Building the Document-Term Matrix

- Model Building

- Cross validation

- Making predictions from the model created and displaying a Confusion matrix

Results

Summary of Results

Summary of Results

| Algorithms | Author | Package | Keyword | Accuracy | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Kaggle fake news dataset | Fake news Detector dataset | Gofaaas Fake News Dataset

500 rows | |||||||||

| Test data (30% of the dataset) | Cross validation | Test data (30% of the dataset) |

Cross validation | Test data (30% of the dataset) | Cross validation | Using the Kaggle Model* | Using the Detector Model** | ||||

| Naive Bayes | Bayes, Thomas | We used RTextTools, which depends on e1071 | NB* | ||||||||

| Support vector machine | Meyer et al., 2012 | We used RTextTools, which depends on e1071 | SVM* | ||||||||

| Random forest | Liawand Wiener, 2002 | We used RTextTools, which depends on randomForest | RF | ||||||||

| General linearized models | Friedman et al., 2010 | We used RTextTools, which depends on wglmnet | GLMNET* | ||||||||

| Maximum entropy | Jurka, 2012 | We used RTextTools, which depends on maxent | MAXENT* | ||||||||

| Extreme Gradient Boosting | Chen & Guestrin, 2016 | xgboost | XGBOOST* | ||||||||

| Classification or regression tree | Ripley., 2012 | We used RTextTools, which depends on tree | TREE | ||||||||

| Boosting | Tuszynski, 2012 | We used RTextTools, which depends on caTools | BOOSTING | ||||||||

| Neural networks | Venables and Ripley, 2002 | We used RTextTools, which depends on | NNET | ||||||||

| Bagging | Peters and Hothorn, 2012 | We used RTextTools, which depends on ipred | BAGGING** | ||||||||

| Scaled linear discriminant analysis | Peters and Hothorn, 2012 | We used RTextTools, which depends on ipred | SLDA** | ||||||||

| * Low-memory algorithm

** Very high-memory algorithm |

|||||||||||

| Algorithms | Author | Package | Keyword | Accuracy | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Kaggle fake news dataset

20,800 rows |

Fake news Detector dataset

10,000 rows |

Gofaaas Fake News Dataset

500 rows | |||||||||

| Test data (70% of the dataset) | Cross validation | Test data (70% of the dataset) | Cross validation | Test data (70% of the dataset) | Cross validation | Using the Kaggle Model^ | Using the Detector Model^^ | ||||

| Naive Bayes | Bayes, Thomas | e1071 | NB* | ||||||||

| Support vector machine | Meyer et al., 2012 | We used RTextTools, which depends on e1071 | SVM* | ||||||||

| Random forest | Liawand Wiener, 2002 | randomForest | RF | ||||||||

| Extreme Gradient Boosting | Chen & Guestrin, 2016 | xgboost | XGBOOST* | ||||||||

| General linearized models | Friedman et al., 2010 | We used RTextTools, which depends on wglmnet | GLMNET* | ||||||||

| Maximum entropy | Jurka, 2012 | We used RTextTools, which depends on maxent | MAXENT* | ||||||||

| * Low-memory algorithm

** Very high-memory algorithm ^ ^^ | |||||||||||

Evaluation of Results

We evaluate our approach in different settings. First, weperform cross-validation on our noisy training set; second,and more importantly, we train models on the training setand validate them against a manually created gold standard.17Moreover, we evaluate two variants, i.e., including and exclud-ing user features. [smb:home/adelo/1-system/1-disco_local/1-mis_archivos/1-pe/1-ciencia/1-computacion/2-data_analysis-machine_learning/gofaaaz-machine_learning/5-References/7-Weakly_supervised_searning_for_fake_news_detection_on_twitter.pdf]

The Gofaaas-Fake News Detector R Package

Installation

Functions

Datasets used

Kaggle Fake News Dataset

https://www.kaggle.com/c/fake-news/data

Distribution of the data:

The distribution of Stance classes in train_stances.csv is as follows:

| rows | unrelated | discuss | agree | disagree |

|---|---|---|---|---|

| 49972 | 0.73131 | 0.17828 | 0.0736012 | 0.0168094 |

Fake News Detector Dataset

Gofaaas Fake News Dataset

Algorithms

Naive Bayes

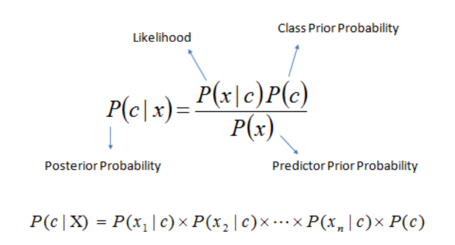

Naïve Bayes is based on the Bayesian theorem, there in order to understand Naïve Bayes it is important to first understand the Bayesian theorem.

Bayesian theorem is a mathematical formula for determining conditional probability which is the probability of something, happening given that something else has already occurred.

- P(c|x) is the posterior probability of class (target) given predictor (attribute).

- P(c) is the prior probability of class.

- P(x|c) is the likelihood which is the probability of predictor given class.

- P(x) is the prior probability of predictor.

Prior probability, in Bayesian statistical inference, is the probability of an event before new data is collected.

Posterior probability is the revised probability of an event occurring after taking into consideration new information.

In statistical terms, the posterior probability is the probability of event A occurring given that event B has occurred.

Support vector machine

Random forest

Extreme Gradient Boosting

The XGBoost R package

XGBoost - Extreme Gradient Boosting:

- https://xgboost.readthedocs.io/en/latest/

- https://cran.r-project.org/web/packages/xgboost/index.html

The RTextTools package

RTextTools - A Supervised Learning Package for Text Classification:

- https://journal.r-project.org/archive/2013/RJ-2013-001/RJ-2013-001.pdf

- http://www.rtexttools.com/

- https://cran.r-project.org/web/packages/RTextTools/index.html

Chapter 3 - Gofaas Web App

A way to interact, test and display the model results

Conclusion