Difference between revisions of "Página de pruebas 4"

Adelo Vieira (talk | contribs) |

Adelo Vieira (talk | contribs) |

||

| Line 1: | Line 1: | ||

| − | + | ===Applying Bayes' Theorem - Example 2=== | |

| − | + | * Let's extend our spam filter by adding a few additional terms to be monitored: "money", "groceries", and "unsubscribe". | |

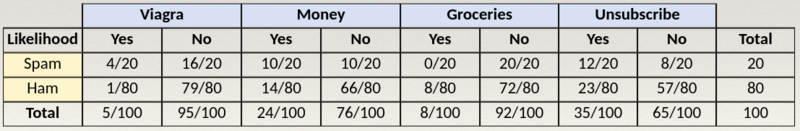

| + | * We will assume that the Naïve Bayes learner was trained by constructing a likelihood table for the appearance of these four words in 100 emails, as shown in the following table: | ||

| − | |||

| − | [[ | + | [[File:ApplyingBayesTheorem-Example.png|800px|thumb|center|]] |

| − | |||

| − | + | As new messages are received, the posterior probability must be calculated to determine whether the messages are more likely to be spam or ham, given the likelihood of the words found in the message text. | |

| − | + | We can define the problem as shown in the equation below, which captures the probability that a message is spam, given that the words 'Viagra' and Unsubscribe are present and that the words 'Money' and 'Groceries' are not. | |

| + | |||

| + | |||

| + | <math> | ||

| + | P(Spam|Viagre \cap Money \cap Groceries \cap Unsubscribe) = \frac{P(Viagra \cap Money \cap Groceries \cap Unsubscribe | spam)P(spam)}{P(Viagra \cap Money \cap Groceries \cap Unsubscribe)} | ||

| + | </math> | ||

| + | |||

| + | |||

| + | <span style="color: #007bff">For a number of reasons, this is computationally difficult to solve. As additional features are added, tremendous amounts of memory are needed to store probabilities for all of the possible intersecting events. Therefore, '''Class-Conditional independence''' can be assumed to simplify the problem.</span> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | '''Class-Conditional independence''' | ||

| + | |||

| + | The work becomes much easier if we can exploit the fact that Naïve Bayes assumes independence among events. Specifically, Naïve Bayes assumes '''class-conditional independence''', which means that events are independent so long as they are conditioned on the same class value. | ||

| + | |||

| + | Assuming conditional independence allows us to simplify the equation using the probability rule for independent events <math>P(A \cap B) = P(A) \times P(B)</math>. This results in a much easier-to-compute formulation: | ||

| + | |||

| + | |||

| + | <math> | ||

| + | P(Spam|Viagre \cap Money \cap Groceries \cap Unsubscribe) = \frac{P(Viagra|Spam) \cdot P(Money|Spam) \cdot P(Groceries|Spam) \cdot P(Unsubscribe|Spam) \cdot P(spam)}{P(Viagra) \cdot P(Money) \cdot P(Groceries) \cdot P(Unsubscribe)} | ||

| + | </math> | ||

| + | |||

| + | |||

| + | For example, suppose that a message contains the terms '''Viagra''' and '''Unsubscribe''', but does not contain either '''Money''' or '''Groceries''': | ||

| + | |||

| + | |||

| + | <math> | ||

| + | P(Spam|Viagre \cap Unsubscribe) = \frac{P(Viagra|Spam) \cdot P(Unsubscribe|Spam) \cdot P(spam)}{P(Viagra) \cdot P(Unsubscribe)} = \frac{\frac{4}{20} \cdot \frac{12}{20} \cdot \frac{20}{100}}{\frac{5}{100} \cdot \frac{35}{100}} = | ||

| + | </math> | ||

| + | |||

| + | |||

| + | <div class="mw-collapsible mw-collapsed" style="width:100%; background: #ededf2; padding: 5px"> | ||

| + | ''' The presentation shows this example this way. I think there are mistakes in this presentation: ''' | ||

| + | <div class="mw-collapsible-content"> | ||

| + | [[File:ApplyingBayesTheorem-ClassConditionalIndependance.png|800px|thumb|center|]] | ||

| + | |||

| + | Using the values in the likelihood table, we can start filling numbers in these equations. Because the denominatero si the same in both cases, it can be ignored for now. The overall likelihood of spam is then: | ||

| + | |||

| + | |||

| + | <math> | ||

| + | \frac{4}{20} \cdot \frac{10}{20} \cdot \frac{20}{20} \cdot \frac{12}{20} \cdot \frac{20}{100} = 0.012 | ||

| + | </math> | ||

| + | |||

| + | |||

| + | While the likelihood of ham given the occurrence of these words is: | ||

| + | |||

| + | |||

| + | <math> | ||

| + | \frac{1}{80} \cdot \frac{60}{80} \cdot \frac{72}{80} \cdot \frac{23}{80} \cdot \frac{80}{100} = 0.002 | ||

| + | </math> | ||

| + | |||

| + | |||

| + | Because 0.012/0.002 = 6, we can say that this message is six times more likely to be spam than ham. However, to convert these numbers to probabilities, we need one last step. | ||

| + | |||

| + | |||

| + | The probability of spam is equal to the likelihood that the message is spam divided by the likelihood that the message is either spam or ham: | ||

| + | |||

| + | |||

| + | <math> | ||

| + | \frac{0.012}{(0.012 + 0.002)} = 0.857 | ||

| + | </math> | ||

| + | |||

| + | |||

| + | The probability that the message is spam is 0.857. As this is over the threshold of 0.5, the message is classified as spam. | ||

| + | </div> | ||

| + | </div> | ||

| + | |||

| + | |||

| + | <br /> | ||

Revision as of 16:12, 6 February 2021

Applying Bayes' Theorem - Example 2

- Let's extend our spam filter by adding a few additional terms to be monitored: "money", "groceries", and "unsubscribe".

- We will assume that the Naïve Bayes learner was trained by constructing a likelihood table for the appearance of these four words in 100 emails, as shown in the following table:

As new messages are received, the posterior probability must be calculated to determine whether the messages are more likely to be spam or ham, given the likelihood of the words found in the message text.

We can define the problem as shown in the equation below, which captures the probability that a message is spam, given that the words 'Viagra' and Unsubscribe are present and that the words 'Money' and 'Groceries' are not.

For a number of reasons, this is computationally difficult to solve. As additional features are added, tremendous amounts of memory are needed to store probabilities for all of the possible intersecting events. Therefore, Class-Conditional independence can be assumed to simplify the problem.

Class-Conditional independence

The work becomes much easier if we can exploit the fact that Naïve Bayes assumes independence among events. Specifically, Naïve Bayes assumes class-conditional independence, which means that events are independent so long as they are conditioned on the same class value.

Assuming conditional independence allows us to simplify the equation using the probability rule for independent events . This results in a much easier-to-compute formulation:

For example, suppose that a message contains the terms Viagra and Unsubscribe, but does not contain either Money or Groceries:

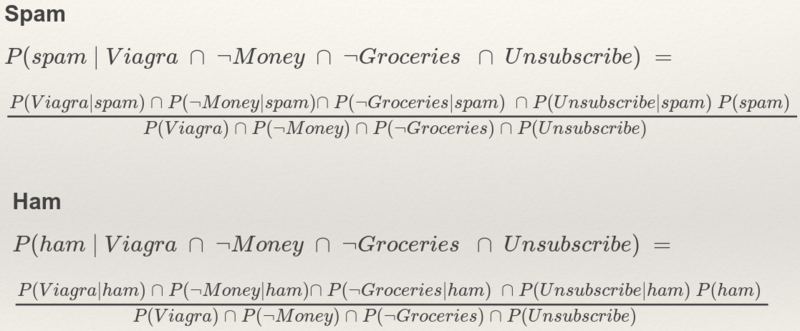

The presentation shows this example this way. I think there are mistakes in this presentation:

Using the values in the likelihood table, we can start filling numbers in these equations. Because the denominatero si the same in both cases, it can be ignored for now. The overall likelihood of spam is then:

While the likelihood of ham given the occurrence of these words is:

Because 0.012/0.002 = 6, we can say that this message is six times more likely to be spam than ham. However, to convert these numbers to probabilities, we need one last step.

The probability of spam is equal to the likelihood that the message is spam divided by the likelihood that the message is either spam or ham:

The probability that the message is spam is 0.857. As this is over the threshold of 0.5, the message is classified as spam.