Página de pruebas 3

ContentsMean Absolute Error - MAE |

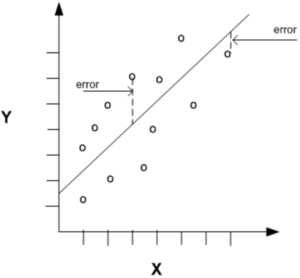

The Mean Absolute Error (MAE) is calculated taking the sum of the absolute differences between the actual and predicted values (i.e. the errors with the sign removed) and multiplying it by the reciprocal of the number of observations.

Note that the value returned by the equation is dependent on the range of the values in the dependent variable. it ks scale dependent. MAE is preferred by many as the evaluation metric of choice as it gives equal weight to all errors, irrespective of their magnitude. |

|

|---|---|---|

Mean Squared Error - MSE |

The Mean Squared Error (MSE) is very similar to the MAE, except that it is calculated taking the sum of the squared differences between the actual and predicted values and multiplying it by the reciprocal of the number of observations. Note that squaring the differences also removes their sign.

|

|

Root Mean Squared Error |

The Root Mean Squared Error (MSE) is basically the same as MSE, except that it is calculated taking the square root of sum of the squared differences between the actual and predicted values and multiplying it by the reciprocal of the number of observations.

|

|

Mean Absolute Percentage Error |

Mean Absolute Percentage Error (MAPE) is a scale-independent measure of the performance of a regression model. It is calculated by summing the absolute value of the difference between the actual value and the predicted value, divided by the actual value. This is then multiplied by the reciprocal of the number of observations. This is then multiplied by 100 to obtain a percentage.

|

|

R squared |

, or the Coefficient of Determination, is the ratio of the amount of variance explained by a model and the total amount of variance in the dependent variable and is the rage [0,1].

|

|