Difference between revisions of "Página de pruebas 3"

Adelo Vieira (talk | contribs) (→Measuring Correlation - The Correlation Coefficient) |

Adelo Vieira (talk | contribs) (→Measuring Correlation - The Correlation Coefficient) |

||

| Line 29: | Line 29: | ||

<br /> | <br /> | ||

| − | =====Pearson< | + | =====Pearson<span>'<span>s r===== |

Pearson's r | Pearson's r | ||

Revision as of 12:32, 26 June 2020

Contents

Correlation & Simple and Multiple Regression

- 17/06: Recorded class - Correlation & Regration

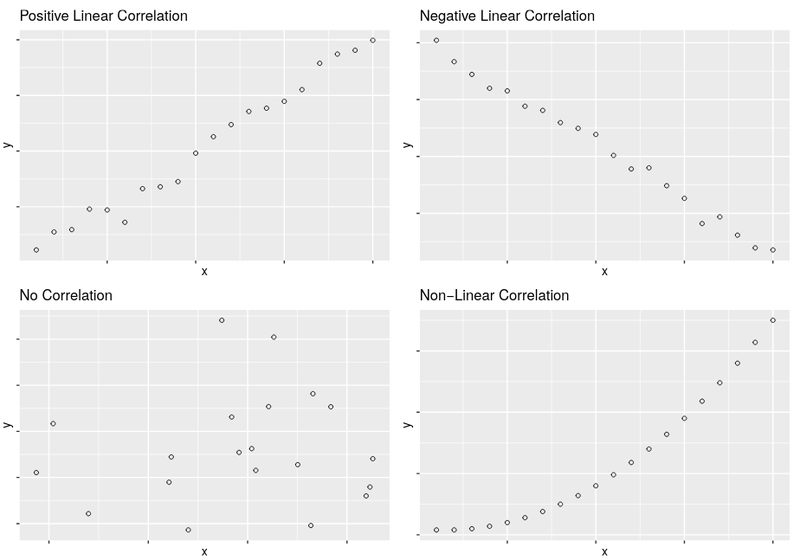

Correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. https://en.wikipedia.org/wiki/Correlation_and_dependence

Where moderate to strong correlations are found, we can use this to make a prediction about one the value of one variable given what is known about the other variables.

The following are examples of correlations:

- there is a correlation between ice cream sales and temperature.

- Phytoplankton population at a given latitude and surface sea temperature

- Blood alcohol level and the odds of being involved in a road traffic accident

Measuring Correlation - The Correlation Coefficient

Karl Pearson (1857-1936)

The correlation coefficient, developed by Karl Pearson, provides a much more exact way of determining the type and degree of a linear correlation between two variables.

Pearson's r

Pearson's r

Pearson's r, also known as the Pearson product-moment correlation coefficient, is a measure of the strength of the relationship between two variables and is given by this equation:

Where and are the means of the x (independent) and y (dependent) variables, respectively, and and are the individual observations for each variable.

The direction of the correlation:

- Values of Pearson's r range between -1 and +1.

- Values greater than zero indicate a positive correlation, with 1 being a perfect positive correlation.

- Values less than zero indicate a negative correlation, with -1 being a perfect negative correlation.

The degree of the correlation:

| Degree of correlation | Interpretation |

|---|---|

| 0.8 to 1.0 | Very strong |

| 0.6 to 0.8 | Strong |

| 0.4 to 0.6 | Moderate |

| 0.2 to 0.4 | Weak |

| 0 to 0.2 | Very weak or non-existent |

The coefficient of determination

The value is termed the coefficient of determination because it measures the proportion of variance in the dependent variable that is determined by its relationship with the independent variables. This is calculated from two values:

- The total sum of squares:

- The residual sum of squares:

The total sum of squares is the sum of the squared differences between the actual values () and their mean. The residual sum of squares is the sum of the squared differences between the predicted values () and their respective actual values.

is used to gain some idea of the goodness of fit of a model. It is a measure of how well the regression predictions approximate the actual data points. An of 1 means that predicted values perfectly fit the actual data.

Testing the "generalizability" of the correlation

Having determined the value of the correlation coefficient (r) for a pair of variables, you should next determine the likelihood that the value of r occurred purely by chance. In other words, what is the likelihood that the relationship in your sample reflects a real relationship in the population.

Before carrying out any test, the alpha () level should be set. This is a measure of how willing we are to be wrong when we say that there is a relationship between two variables. A commonly-used level in research is 0.05.

An level to 0.05 means that you could possibly be wrong up to 5 times out of 100 when you state that there is a relationship in the population based on a correlation found in the sample.

In order to test whether the correlation in the sample can be generalised to the population, we must first identify the null hypothesis and the alternative hypothesis .

This is a test against the population correlation co-efficient (), so these hypotheses are:

- - There is no correlation in the

population

- - There is correlation

Next, we calculate the value of the test statistic using the following equation:

So for a correlation coefficient value of -0.8, an value of 0.9 and a sample size of 102, this would be:

Checking the t-tables for an level of 0.005 and a two-tailed test (because we are testing if is less than or greater than 0) we get a critical value of 2.056. As the value of the test statistic (25.29822) is greater than the critical value, we can reject the null hypothesis and conclude that there is likely to be a correlation in the population.

Correlation Causation

Even if you find the strongest of correlations, you should never interpret it as more than just that... a correlation.

Causation indicates a relationship between two events where one event is affected by the other. In statistics, when the value of one event, or variable, increases or decreases as a result of other events, it is said there is causation.

Let's say you have a job and get paid a certain rate per hour. The more hours you work, the more income you will earn, right? This means there is a relationship between the two events and also that a change in one event (hours worked) causes a change in the other (income). This is causation in action! https://study.com/academy/lesson/causation-in-statistics-definition-examples.html

Given any two correlated events A and B, the following relationships are possible:

- A causes B

- B causes A

- A and B are both the product of a common underlying cause, but do not cause each other

- Any relationship between A and B is simply the result of coincidence.

Although a correlation between two variables could possibly indicate the presence of

- a causal relationship between the variables in either direction(x causes y, y causes x); or

- the influence of one or more confounding variables, another variable that has an influence on both variables

It can also indicate the absence of any connection. In other words, it can be entirely spurious, the product of pure chance. In the following slides, we will look at a few examples...

Examples

Causality or coincidence?