Developing a Web Dashboard for analyzing Amazon's Laptop sales data

- Try the App at: http://dashboard.sinfronteras.ws

- Github repository: https://github.com/adeloaleman/AmazonLaptopsDashboard

- An example of the data (JSON file) we have scraped from Amazon: Media:AmazonLaptopReviews.json

- This report in a PDF file:

- A short presentation:

Contents

- 1 Introduction

- 2 Project rationale and business value

- 3 Development process

- 4 Cloud Deployment

- 5 Conclusion

- 6 References

Introduction

When I started thinking about this project, the only clear point was that I wanted to work in Data Analysis using Python. I had already got some experience in this field in my final BSc in IT Degree project, working on a Supervised Machine Learning model for Fake News Detection. So, this time, I had some clearer ideas of the scope of data analysis and related fields and about the kind of project I was looking to work on. In addition to data analysis, I'm also interested in Web development. Therefore, in this project, along with Data Analysis, I was also looking to give important weight to web development. In this context, I got with the idea of developing a Web Dashboard for analyzing Amazon Laptop Reviews.

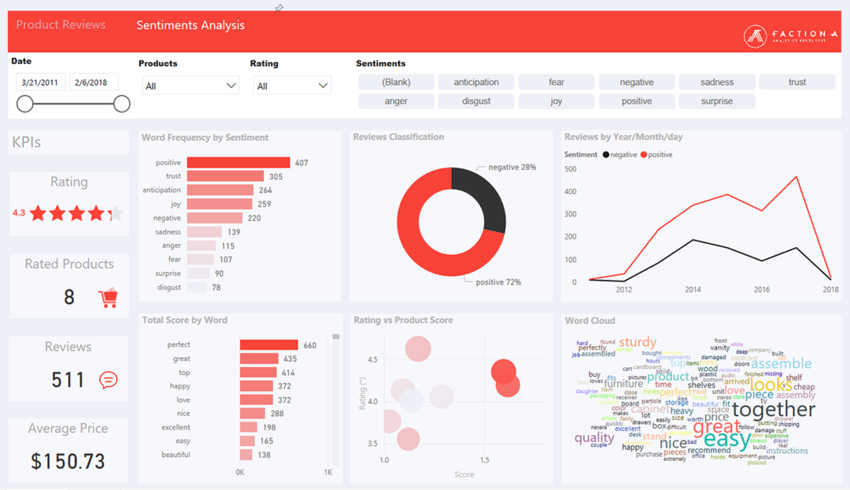

In a general sense, "a Data Dashboard is an information management tool that visually tracks, analyzes and displays key performance indicators (KPI), metrics and key data points to monitor the health of a business or specific process" [1]. That is not a bad definition to describe the application we are building. We just need to highlight that our Dashboard displays information about Laptop sales data from Amazon (customer reviews, in particular).

We wanted to mention that the initial proposal was to develop a Dashboard for analyzing a wider range of products from Amazon (not only Laptops). However, because we had limited HR and time to accomplish this project, we were forced to reduce the scope of the application. This way, we were trying to make sure that we were going to be able to develop a functional Dashboard on the timeframe provided.

It is also important to highlight that the visual appeal of the application is being considered an essential aspect of the development process. We are aware that this is currently a very important feature of a web application so we are taking the necessary time to make sure we develop a Dashboard with visual appeal and decent web design.

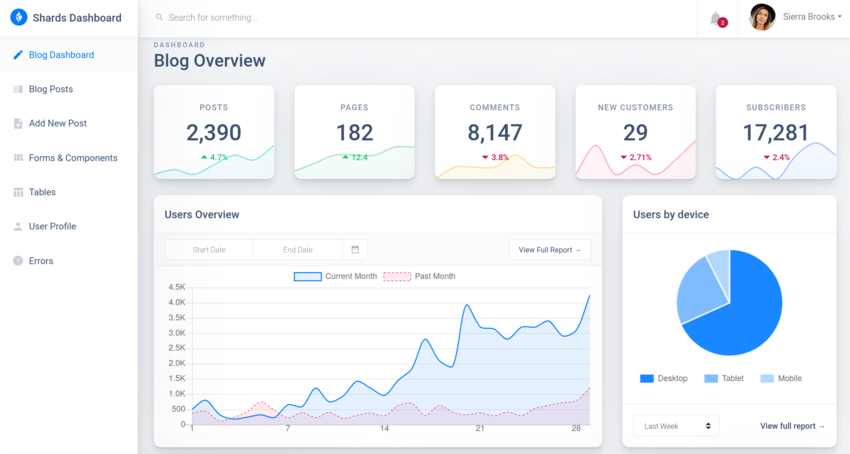

Some examples of the kind of Application we are developing:

- This is a very similar application to the one we are building. Our initial requirements and prototype were based on some of the components shown in this application.

This is an example of the kind of Dashboard we are building [2]

https://www.youtube.com/watch?v=R5HkXyAUUII

- This dashboard doesn't have a similar function to the dashboard we are building. However, it is a good example of the design and visual appeal of the application we aim to build.

At this point, a version 1.0 of the application has already been built and deployed. It is currently running for testing purposes at http://dashboard.sinfronteras.ws

You can also access the source code and download it from our Github repository at http://github.com/adeloaleman/AmazonLaptopsDashboard

The content of this report is organized in the following way:

- We start by giving some arguments that justify the business value of this kind of application. We understand that the scope of our Dashboard is limited because we are only considering Laptop data, but we try to explain in a broad sense, the advantage of analyzing retail sales data in order to enhance a commercial strategy.

- In the Development process Section, we will go through the different phases of the development process. The goal is to make clear the general architecture of the application, the technologies we have used and the reasons for the decisions made. Only some portions of the codes will be explained. We think there is no point in explaining all programming details of hundreds of lines of code.

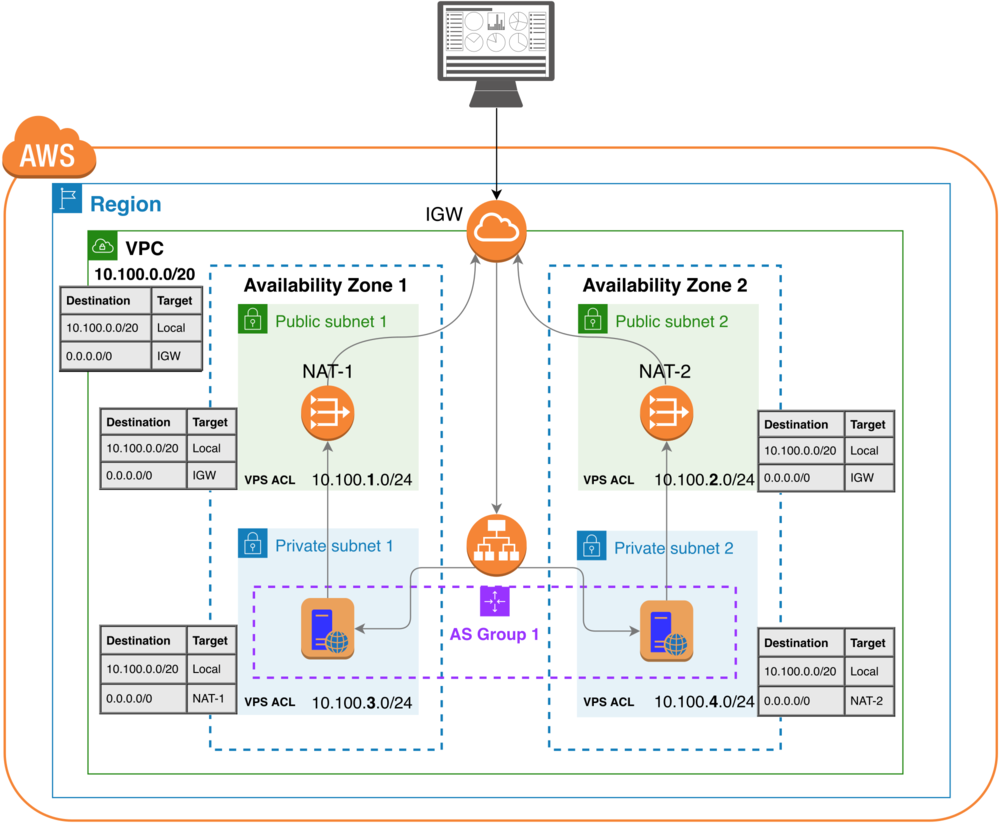

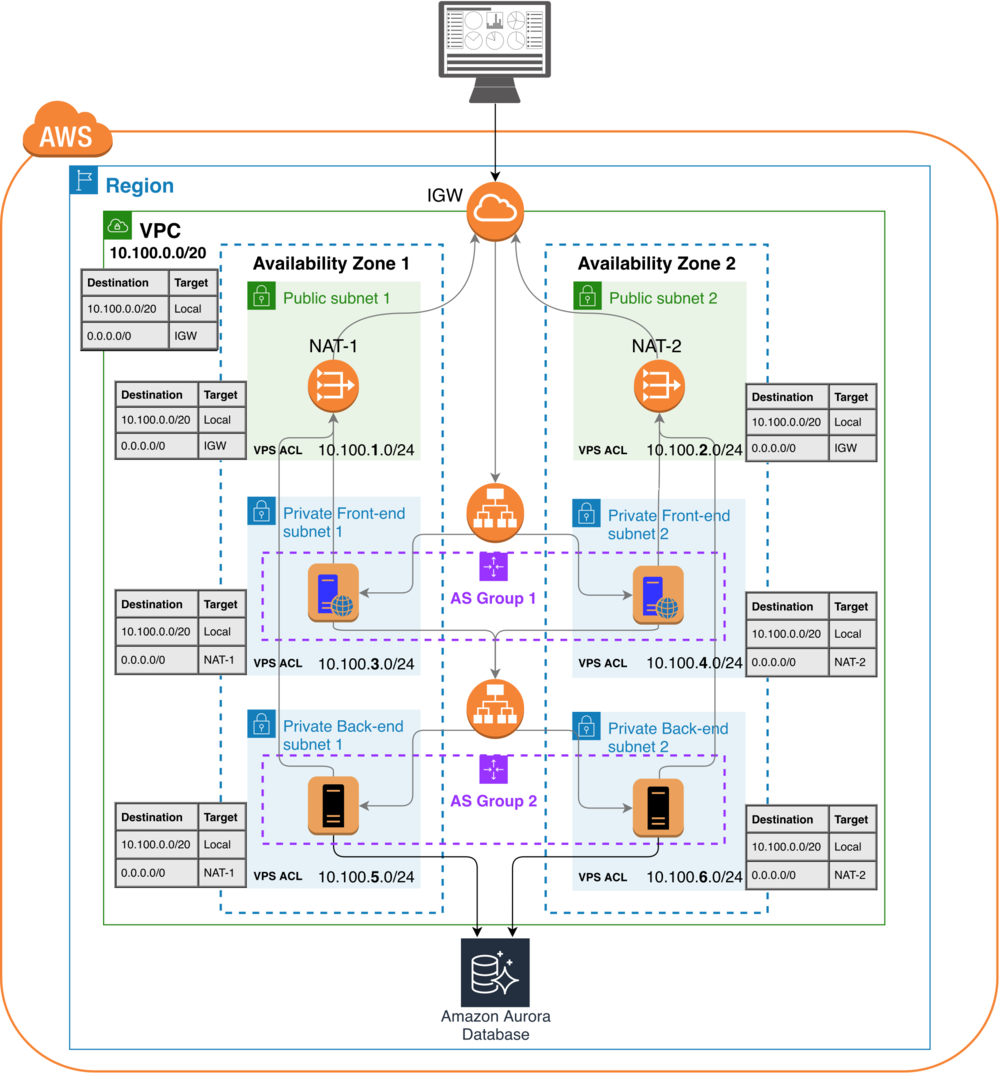

- We finally describe the process followed to deploy the application in the cloud. In this chapter, we explain the current deployment architecture, in which the back-end and front-end are running in the same server, but we also address a three-tier architecture for high availability and strict security policies that has been designed for future cloud deployment of the Dashboard.

Project rationale and business value

In marketing and business intelligence, it is crucial to know what customers think about target products. Customer opinions, reviews, or any data that reflect the experience of the customer, represents important information that companies can use to enhances their marketing and business strategies.

Marketing and business intelligence professionals, also need a practical way to display and summary the most important aspects and key indicators about the dynamic of the target market; but, what we want to say when we refer to the dynamic of the market? We are going to use this term to refer to the most important information that business professional requires to understand the market and thus be able to make decisions that seek to improve the revenue of the company.

Now, let's explain with a practical example, which kind of information business professionals need to know about a target product or market. Suppose that you are a Business Intelligence Manager working for an IT company (Lenovo, for example). The company is looking to improve its Laptops sale strategy. So, which kind of information do you need to know, to be able to make key decisions related to the Tech Specs and features that the new generation of Laptops should have to become a more attractive product into the market? You would need to analyses, for instance:

- Which are the best selling Laptops.

- Are Lenovo Laptops in a good position in the market?

- What are the top Lenovo Competitors in the industry.

- What are the key features that customers take into consideration when buying a Laptop.

- What are the key tech specs that customers like (and don't like) about Lenovo and Competitors Laptops.

- How much customers are willing to pay for a Laptop.

Those are just some examples of the information a business intelligence professional need to know when looking for the best strategy. Let's say that, after analyzing the data, you found that the top-selling Laptops are actually the most expensive ones. Laptops with high-quality tech specs and performance. You also found that Lenovo Laptops are, in general, under the range of prices and quality tech specs of the top-selling Laptops.

With the above information, a logical strategy could be to invest in an action plan to improve the tech specs and general quality of Lenovo Laptops. If, on the contrary, the information highlights that very expensive Laptops have a very low demand, an intelligent approach could be a strategy to reduce the cost of the new generation of Laptops.

So, we have already seen the importance of analyzing relevant data to understand the dynamics of the market when looking to enhance the business strategy. Now, from where and how can we get the necessary data to perform a market analysis for a business plan?

This kind of data can be collected by asking directly information from retailers. For example, if you have access to a detailed Annual Report for Sales & Marketing of a computer retailer, you will have the kind of information that can be valuable to understand the dynamic of the market. This report could contain details about the best selling computers, prices, tech specs, revenues, etc. However, from a Sales Annual report would be missing detailed information about what customers think of the product they bought. Traditionally, this kind of data has been collected using methods such as face to face or telephone surveys.

Recently, the huge amount of data generated every day in social network and online retailer, is being used to perform analysis that allows us to gain a better understanding of the market and, in particular, about customer opinions. This method is becoming a more effective, practical, and cheaper way of gathering this kind of information compare to traditional methods.

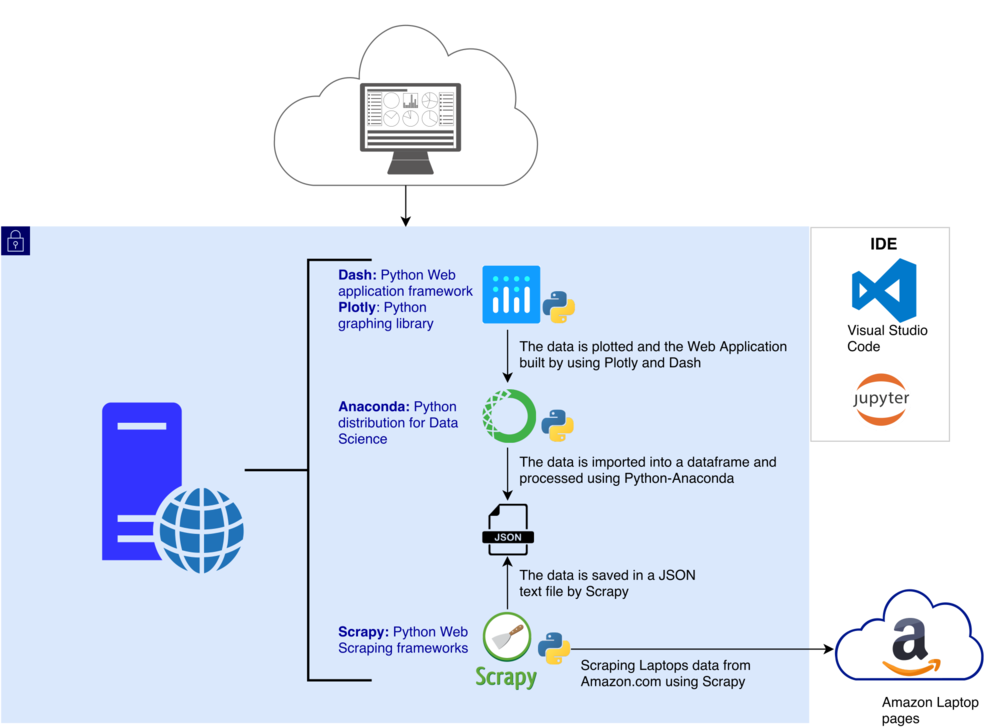

Development process

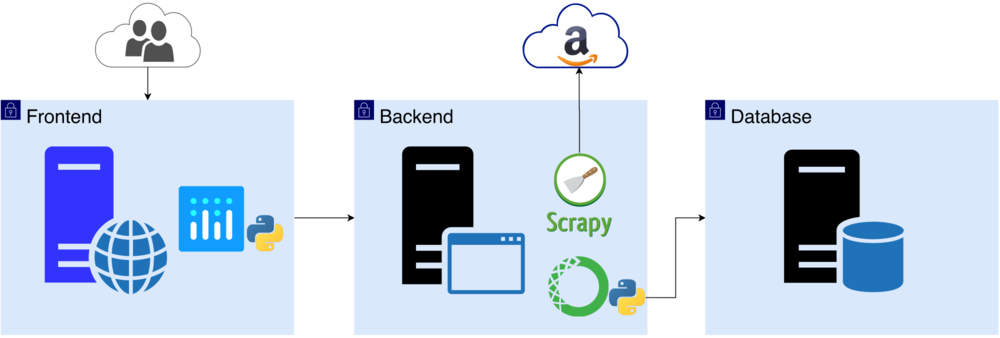

This is the current implementation. All the components are running on the same server. However, the goal is to implement a 3-Tier Architecture as shown in Figure 4.

Back-end

Scraping data from Amazon

We first need to get the data that we want to display and analyze in the Dashboard.

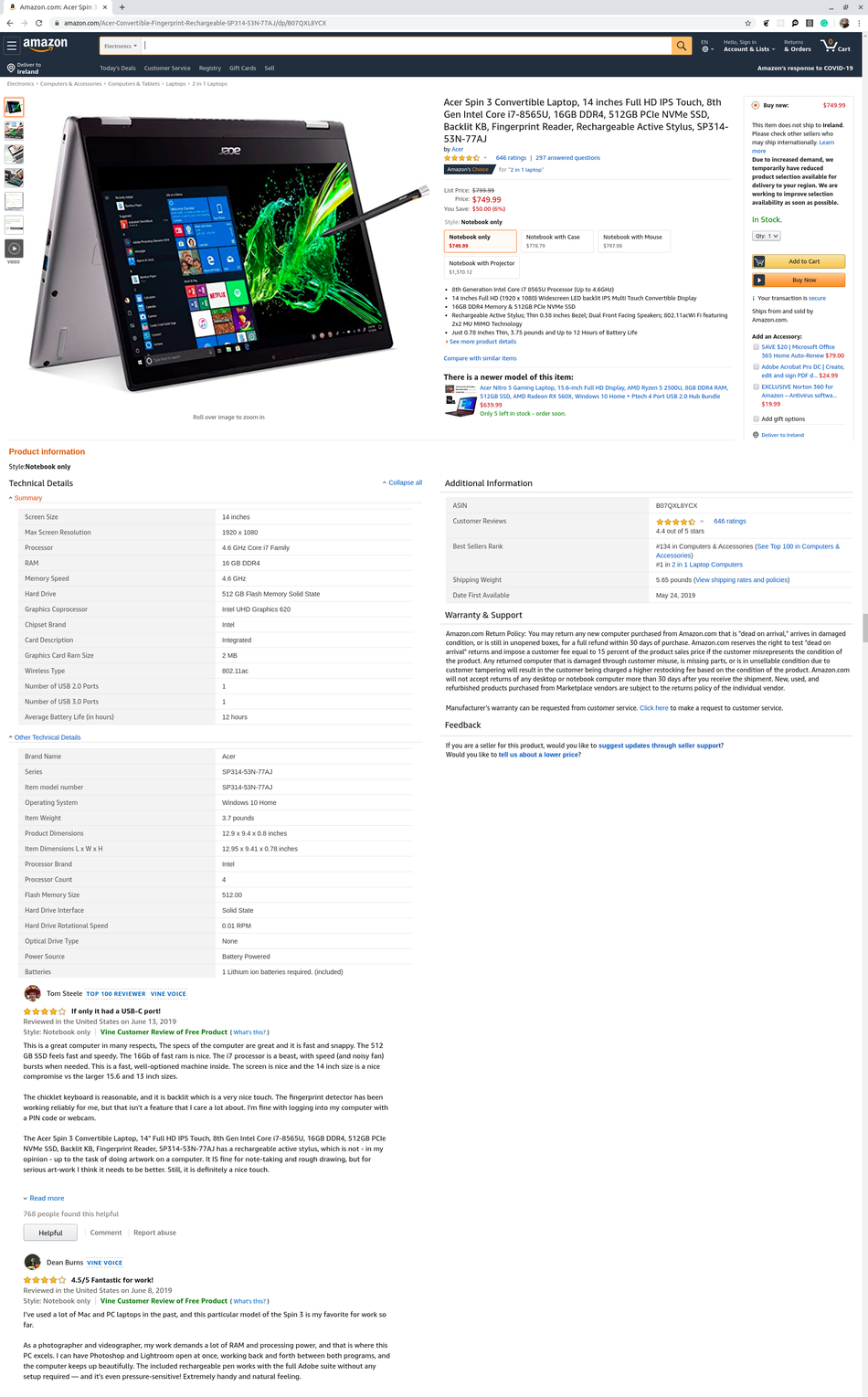

As we have already mentioned, we want to extract data related to laptops for sale from www.amazon.com. The goal is to collect the details of about 100 Laptops from different brands and models and save this data as a JSON text file.

To better explain which information we need, In Figure 5, we show a Laptop sale page from https://www.amazon.com/A4-53N-77AJ/dp/B07QXL8YCX. From that webpage, we need to extract the following information:

| Main details | Tech details | Reviews |

|---|---|---|

|

|

|

The process of extracting data from Websites is commonly known as web scraping [4]. A web scraping software can be used to automatically extract large amounts of data from a website and save the data for later processing. [5]

In this project, we are using Scrapy as web scraping solution. This is one of the most popular Python Web Scraping frameworks. According to its official documentation, "Scrapy is a fast high-level web crawling and web scraping framework used to crawl websites and extract structured data from their pages" [6]

The Code Snippet included in Figure 6 shows an example of a Scrapy Python code. This is a portion of the program we built to extract Laptop data from www.amazon.com. The goal of this report is not to explain all the technical details about the code. That would be such long documentation so it is better to review the official Scrapy documentation for more details. [7]

You can access the source code from our Github repository at

https://github.com/adeloaleman/AmazonLaptopsDashboard/blob/master/AmazonScrapy/AmazonScrapy/spiders/amazonSpider.py

Web scraping can become a complex task when the information we want to extract is structured on more than one page. For example, in our project, the most important data we need is customer reviews. Because a Laptop in Amazon can have countless reviews, this information can become so extensive that it cannot be displayed on a single page but on a set of similar pages.

Let's explain the process and some of the complexities of extracting Laptop data from Amazon.com:

- The following is the link to the base Amazon laptops page. This page display the first group of 24 Laptops available at Amazon.com including all branches and features: https://www.amazon.com/Laptops-Computers-Tablets/s?rh=n%3A565108&page=1

- If you want to keep reviewing Laptos you need to make click in the «Next Page» link at the button of the page, which will bring you to the next group of 24 laptos. However, istead of clicking the «Next Page» link, you could also enter the following URL in you web browser: https://www.amazon.com/Laptops-Computers-Tablets/s?rh=n%3A565108&page=2

- Then, to go to the third group of 24 Laptops you can use use the same address with a 3 at the end: https://www.amazon.com/Laptops-Computers-Tablets/s?rh=n%3A565108&page=3

- So, to extract information from a large number of Laptops, we have to scrape a sequence of similar pages.

- Most of the information we want to extract from each Laptop will be on the Laptop main page. As we saw in Figure 5, we can get the "ASIN", "Price", "Average customer reviews" and other details from this main page.

- However, customer reviews are such a long data that cannot be displayed on only one page but on a sequence of pages linked to the main Laptop page. So, in the case of our example Laptop page (Figure 5), customer reviews are displayed in these pages:

- ...

import scrapy

class QuotesSpider(scrapy.Spider):

name = "amazon_links"

start_urls=[]

# Link to the base Amazon laptos page. This page display the first group of Laptops

# available at Amazon.com including all branches and features:

myBaseUrl = 'https://www.amazon.com/Laptops-Computers-Tablets/s?rh=n%3A565108&page='

for i in range(1,3):

start_urls.append(myBaseUrl+str(i))

def parse(self, response):

data = response.css("a.a-text-normal::attr(href)").getall()

links = [s for s in data if "Lenovo-" in s

or "LENOVO-" in s

or "Hp-" in s

or "HP-" in s

or "Acer-" in s

or "ACER-" in s

or "Dell-" in s

or "DELL-" in s

or "Samsung-" in s

or "SAMSUNG-" in s

or "Asus-" in s

or "ASUS-" in s

or "Toshiba-" in s

or "TOSHIBA-" in s

]

links = list(dict.fromkeys(links))

links = [s for s in links if "#customerReviews" not in s]

links = [s for s in links if "#productPromotions" not in s]

for i in range(len(links)):

links[i] = response.urljoin(links[i])

yield response.follow(links[i], self.parse_compDetails)

def parse_compDetails(self, response):

def extract_with_css(query):

return response.css(query).get(default='').strip()

price = response.css("#priceblock_ourprice::text").get()

product_details_table = response.css("#productDetails_detailBullets_sections1")

product_details_values = product_details_table.css("td.a-size-base::text").getall()

k = []

for i in product_details_values:

i = i.strip()

k.append(i)

product_details_values = k

ASIN = product_details_values[0]

average_customer_reviews = product_details_values[4]

number_reviews_div = response.css("#reviews-medley-footer")

number_reviews_ratings_str = number_reviews_div.css("div.a-box-inner::text").get()

number_reviews_ratings_str = number_reviews_ratings_str.replace(',', '')

number_reviews_ratings_str = number_reviews_ratings_str.replace('.', '')

number_reviews_ratings_list = [int(s) for s in number_reviews_ratings_str.split() if s.isdigit()]

number_reviews = number_reviews_ratings_list[0]

number_ratings = number_reviews_ratings_list[1]

reviews_link = number_reviews_div.css("a.a-text-bold::attr(href)").get()

reviews_link = response.urljoin(reviews_link)

tech_details1_table = response.css("#productDetails_techSpec_section_1")

tech_details1_keys = tech_details1_table.css("th.prodDetSectionEntry")

tech_details1_values = tech_details1_table.css("td.a-size-base")

tech_details1 = {}

for i in range(len(tech_details1_keys)):

text_keys = tech_details1_keys[i].css("::text").get()

text_values = tech_details1_values[i].css("::text").get()

text_keys = text_keys.strip()

text_values = text_values.strip()

tech_details1[text_keys] = text_values

tech_details2_table = response.css("#productDetails_techSpec_section_2")

tech_details2_keys = tech_details2_table.css("th.prodDetSectionEntry")

tech_details2_values = tech_details2_table.css("td.a-size-base")

tech_details2 = {}

for i in range(len(tech_details2_keys)):

text_keys = tech_details2_keys[i].css("::text").get()

text_values = tech_details2_values[i].css("::text").get()

text_keys = text_keys.strip()

text_values = text_values.strip()

tech_details2[text_keys] = text_values

tech_details = {**tech_details1 , **tech_details2}

reviews = []

yield response.follow(reviews_link,

self.parse_reviews,

meta={

'url': response.request.url,

'ASIN': ASIN,

'price': price,

'average_customer_reviews': average_customer_reviews,

'number_reviews': number_reviews,

'number_ratings': number_ratings,

'tech_details': tech_details,

'reviews_link': reviews_link,

'reviews': reviews,

})

Data Analytic

- Loading the data

- Data Preparation and Text pre-processing

- Creating new columns to facilitate handling of customer reviews ant tech details

- Modifying the format and data type of some columns

- Removing punctuation and stopwords

- Data visualization

- Avg. customer reviews \& Avg. price bar charts

- Avg. customer reviews Vs. Price bubble chart

- Customer reviews word cloud

- Word count bar chart of customer reviews

- Sentiment analysis

Loading the data

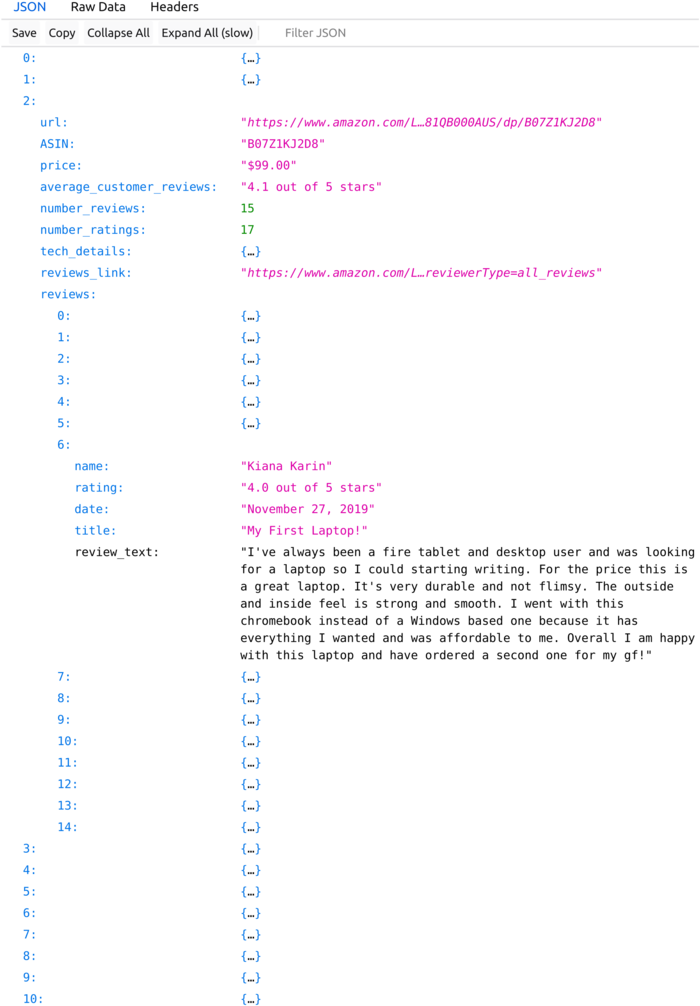

After extracting the data from Amazon using Scrapy, we have stored the data into a simple JSON text file (Figure \ref{fig:data_json}). Here we are importing the data from the JSON text file into a pandas dataframe:

Data Preparation and Text pre–processing

In the appendices, we have included the Python code used for Data Preparation and Text pre-processing. Below, we will explain step by step the process followed to prepare the data

Creating new columns to facilitate handling of customer reviews and tech details:

After loading the data from the JSON file, every "review" entry is a dictionary type value that is composed of several fields: customer name, rating, date, title, and the text of the review itself.

Here we extract the relevant details (title and the text of the review itself) and create 3 new columns to facilitate the handling of the "review" entries. We create the following columns: "reviews_title", "reviews_text" and "reviews_one_string":

.

After loading the data from the JSON file, all technical details are in a dictionary type entry. In the following block, we are extracting the tech details that are important for our analysis ("series" and "model_number")and creating new columns for each of these relevant tech details:

.

Modifying the format and data type of some columns:

Here we make sure that the first character of the brand name is uppercase and remaining characters lowercase. This is important because we are going to perform filtering and searching function using the brand name so we need to make sure the writing is consistent:

.

After extracting the data from the web page, the numeric values ("average_customer_reviews" and "price")are actually of "string" type. So, We need to convert the entry to a numeric type (Float). This is necessary because we will perform mathematical operations with these values.The following function takes a numeric string (<class 'str>'), removes any comma or dollar characters ("," "$") and returns a numeric float value (<class 'float'>):

.

A raw "average_customer_reviews" entry looks like this: "4.5 out of 5 stars" (<class 'str>')We only need the first value as a numeric float type: 4.5 (<class 'float'>)

This is done in the next line of code over the entire dataframe by selecting only the first element ("4.5" in the above example) and applying the "format_cleaner()" function to the "average_customer_reviews" column:

.A raw "price" entry looks like this: "$689.90" (<class 'str'>) We only need the numeric value: 689.90 (<class 'float'>) This is done in the next line of code over the entire dataframe by applying the "format_cleaner()" function to the "price" column:

.

Removing punctuation and stopwords:

- Punctuation: We will remove all punctuation char found thestringPython library.

- Our stopwords will be composed by:

- The common stopwords defined in the nltk library

- Some particular stopwords related to our data:

- Brand names: There is no point in analyzing brand names. For instance, in a Lenovo review, the customer will use the word "Lenovo" many times, but this fact does not contribute anything to the analysis.

- Laptop synonyms: laptop, computer, machine, etc.

- Some no-official contractions that are not in the nltk library: Im, don't, Ive, etc.

Defining our stopwords list:.

The following function takes a string and returns the same string without punctuation or stopwords:.

Example of applying the "pre_processing()" function :

.

Here we are applying the function "pre_processing()" to the "reviews_one_string" column over the entiredataframe:.

At this point, the data is ready for visualization at the front-end:

.

Data visualization

As we mentioned, this version 1.0 hasn't been clearly defined in a 3-tiers architecture yet. At this point, everything is running in one server (Figure ??). However, the analysis has always been thought with the goal of deploying the application in a 3-tiers architecture (Figure ??).

Regarding chart building for data visualization, it has been particularly challenging do define the tier of the application architecture (back-end or front-end) in which chart building must be placed.

Although it is clear that the charts itself are part of the frontend, there is usually a data processing step -linked with graphing- that could be placed at the backend.

This is why we prefer to explain the programming details related to data visualization in this section. However, other data visualization aspects related to the same charts explained in this section will be treated in the Front-end section.

Avg customer reviews vs Avg price bar charts

We will only talk about the code to generate the charts in this section. We will explain why we have included these charts in the application and discuss other analytical aspects in the Front-end section.

.

Avg customer reviews vs Price bubble chart

.

Customer reviews word cloud

.

Word count bar chart of customer reviews

.

Sentiment analysis

We regret not having achieved this point on time to be included in this version. Although we have already started some tests, we don’t have enough material yet to include some progress about it in this report. We want to make it clear that we are currently working on this point.

Front-end

To develop the front-end we have used a Python framework called Dash. This is a relatively new Framework, it is open source and "it built on top of Flask, Plotly.js, and React. It enables you to build dashboards using pure Python". [8]

According to its official documentation, "Dash is ideal for building data visualization apps. It's particularly suited for anyone who works with data in Python" [9]

Dash is designed to integrate plots built with the graphing library Plotly. This is an open-source library that allows us to makes interactive, publication-quality graphs. So all the charts we will integrate to our web application will be built with Plotly [10]

Basically, we have followed the official documentation of both technologies, Dash and Plotly, to gain the necessary technical skills to carry out this project: \cite{dash} and \cite{plotly}

We think that there is no point in explaining the code we have programmed in this part. This code is so long that we consider unnecessary to spend so many pages and so much time on a technical explanation of the code. We think that it is more important to explain the rationale behind the decisions we made when defining the design and elements used in the development process.

In Figures \ref{dashboard1}, \ref{dashboard2} and \ref{dashboard3} we show a couple of images of the application front-end.

You can also access the source code and download it from our Github repository at http://github.com/adeloaleman/AmazonLaptopsDashboard

In the following sections, we describe the main features and elements of the front-end development process.

Some main features about the front-end design

Home page

Brand selection, Series selection and Price panels

Avg. customer reviews vs. Prices panel

Customer reviews Word cloud and Word count bar chart

Modules under construction

Cloud Deployment

Figure 8: Cloud design for high availability and security. This is the latest deployment of the Dashboard

Conclusion

References

- ↑ 1.0 1.1 "What is a data dashboard?". Klipfolio.

- ↑ 2.0 2.1 2.2 "Faction A - Sentiment Analysis Dashboard". Microsoft.

- ↑ 3.0 3.1 3.2 "Shards dashboard - Demo". Designrevision.

- ↑ 4.0 4.1 "Web scraping". Wikipedia.

- ↑ 5.0 5.1 "Scrapy". Wikipedia.

- ↑ 6.0 6.1 "Official Scrapy website". Scrapy.

- ↑ 7.0 7.1 "Scrapy 2.1 documentation". Scrapy documentation.

- ↑ 8.0 8.1 "Dash for Beginners". Datacamp. 2019.

- ↑ 9.0 9.1 "Introduction to Dash". Dash documentation.

- ↑ 10.0 10.1 "Plotly Python Open Source Graphing Library". Plotly documentation.

- ↑ Ellingwood, Justin (May 2016). "How To Serve Flask Applications with Gunicorn and Nginx on Ubuntu 16.04". Digitalocean.

- ↑ "Failed to find application object 'server' in 'app'". Plotly community. 2018.

- ↑ Chesneau, Benoit. "Deploying Gunicorn". Gunicorn documentation.

- ↑ "Standalone WSGI Containers". Pallets documentation.

- ↑ "Deploying Dash Apps". Dash documentation.

- ↑ "Dropdown Examples and Reference". Dash documentation.

- ↑ Ka Hou, Sio (2019). "Bootstrap Navigation Bar". towardsdatascience.com.

- ↑ "4 Áreas con importantes datos por analizar". UCOM - Universidad comunera. 2019.

- ↑ "Bootstrap Navigation Bar". W3schools documentation.

- ↑ Carol Britton and Jill Doake (2005). "A Student Guide to Object-Oriented Development".

- ↑ "Create Charts & Diagrams Online". Lucidchart.

- ↑ "Upcube dashboard - Demo". themesdesign.

- ↑ "W3.CSS Sidebar". W3schools documentation.

- ↑ "Sidebar code example". W3schools documentation.

- ↑ "Navbar". Bootstrap documentation.

- ↑ "Bubble Charts in Python". Plotly documentation.

- ↑ "Bar Charts in Python". Plotly documentation.