Data Science

- Media:Exploration_of_the_Darts_dataset_using_statistics.pdf

- Media:Exploration_of_the_Darts_datase_using_statistics.zip

Contents

[hide]Data Science and Machine Learning Courses

- Posts

- Udemy: https://www.udemy.com/

- Python for Data Science and Machine Learning Bootcamp - Nivel básico

- Machine Learning, Data Science and Deep Learning with Python - Nivel básico - Parecido al anterior

- Data Science: Supervised Machine Learning in Python - Nivel más alto

- Mathematical Foundation For Machine Learning and AI

- The Data Science Course 2019: Complete Data Science Bootcamp

- Coursera - By Stanford University

- Udacity: https://eu.udacity.com/

- Columbia University - COURSE FEES USD 1,400

Mathematics and Statistics for Data Science

Data Mining - Machine Learning Algorithms

Boosting

Gradient boosting

https://medium.com/mlreview/gradient-boosting-from-scratch-1e317ae4587d

https://freakonometrics.hypotheses.org/tag/gradient-boosting

https://en.wikipedia.org/wiki/Gradient_boosting

http://arogozhnikov.github.io/2016/06/24/gradient_boosting_explained.html

Boosting is a machine learning ensemble meta-algorithm for primarily reducing bias, and also variance in supervised learning, and a family of machine learning algorithms that convert weak learners to strong ones. Boosting is based on the question posed by Kearns and Valiant (1988, 1989): "Can a set of weak learners create a single strong learner?" A weak learner is defined to be a classifier that is only slightly correlated with the true classification (it can label examples better than random guessing). In contrast, a strong learner is a classifier that is arbitrarily well-correlated with the true classification. https://en.wikipedia.org/wiki/Gradient_boosting

Gradient boosting is a machine learning technique for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision trees. It builds the model in a stage-wise fashion like other boosting methods do, and it generalizes them by allowing optimization of an arbitrary differentiable loss function.

Boosting is a sequential process; i.e., trees are grown using the information from a previously grown tree one after the other. This process slowly learns from data and tries to improve its prediction in subsequent iterations. Let's look at a classic classification example:

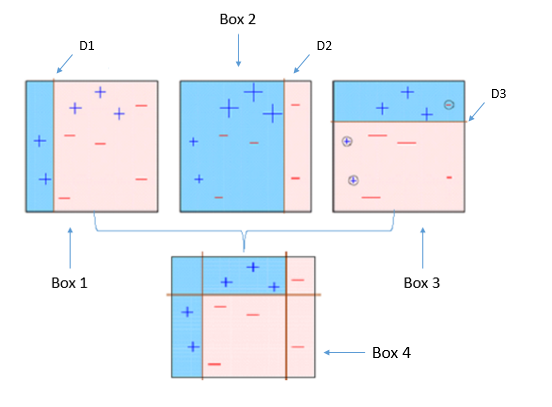

Four classifiers (in 4 boxes), shown above, are trying hard to classify + and - classes as homogeneously as possible. Let's understand this picture well:

- Box 1: The first classifier creates a vertical line (split) at D1. It says anything to the left of D1 is + and anything to the right of D1 is -. However, this classifier misclassifies three + points.

- Box 2: The next classifier says don't worry I will correct your mistakes. Therefore, it gives more weight to the three + misclassified points (see bigger size of +) and creates a vertical line at D2. Again it says, anything to right of D2 is - and left is +. Still, it makes mistakes by incorrectly classifying three - points.

- Box 3: The next classifier continues to bestow support. Again, it gives more weight to the three - misclassified points and creates a horizontal line at D3. Still, this classifier fails to classify the points (in circle) correctly.

- Remember that each of these classifiers has a misclassification error associated with them.

- Boxes 1,2, and 3 are weak classifiers. These classifiers will now be used to create a strong classifier Box 4.

- Box 4: It is a weighted combination of the weak classifiers. As you can see, it does good job at classifying all the points correctly.

That's the basic idea behind boosting algorithms. The very next model capitalizes on the misclassification/error of previous model and tries to reduce it.

Python for Data Science

R

RapidMiner