Difference between revisions of "Data Science"

Adelo Vieira (talk | contribs) (Replaced content with "{{Sidebar}} <accesscontrol> Autoconfirmed users </accesscontrol> ==Projects portfolio== <div style="margin-left: 20px; width: 550pt; margin-top: 50px !important"> <ul> {{...") (Tag: Replaced) |

Adelo Vieira (talk | contribs) |

||

| Line 306: | Line 306: | ||

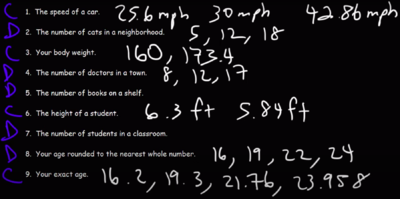

===Data Levels and Measurement=== | ===Data Levels and Measurement=== | ||

| − | Levels of | + | Levels of Measurement - Measurement scales |

| + | |||

| + | https://www.statisticssolutions.com/data-levels-and-measurement/ | ||

| + | |||

| + | |||

| + | There are four Levels of Measurement in research and statistics: Nominal, Ordinal, Interval, and Ratio. | ||

| + | |||

| + | |||

| + | In Practice: | ||

| + | * Most schemes accommodate just two levels of measurement: nominal and ordinal | ||

| + | * There is one special case: dichotomy (otherwise known as a "boolean" attribute) | ||

| + | |||

| + | |||

| + | {| class="wikitable" | ||

| + | ! rowspan="2" | | ||

| + | ! rowspan="2" | | ||

| + | ! rowspan="2" style="width:80px; background-color:#E6B0AA" |Values have meaningful order | ||

| + | ! rowspan="2" style="width:80px; background-color:#A9DFBF" |Distance between values is defined | ||

| + | ! colspan="3" style="width:80px; background-color:#FDEBD0" |'''Mathematical operations make sense''' | ||

| + | (Values can be used to perform '''mathematical operations)''' | ||

| + | ! rowspan="2" style="width:80px; background-color:#AED6F1" |There is a meaning ful zero-point | ||

| + | ! colspan="5" style="width:80px; background-color:#D7BDE2" |Values can be used to perform statistical computations | ||

| + | ! rowspan="2" |Example | ||

| + | |- | ||

| + | ! style="width:80px; background-color:#FDEBD0" | '''Comparison operators''' | ||

| + | ! style="width:80px; background-color:#FDEBD0" | Addition and subtrac tion | ||

| + | ! style="width:80px; background-color:#FDEBD0" | Multiplica tion and division | ||

| + | ! style="width:80px; background-color:#D7BDE2" | "Counts", aka, "Fre quency of Distribu tion" | ||

| + | ! style="width:80px; background-color:#D7BDE2" | Mode | ||

| + | ! style="width:80px; background-color:#D7BDE2" | Median | ||

| + | ! style="width:80px; background-color:#D7BDE2" | Mean | ||

| + | ! style="width:80px; background-color:#D7BDE2" | Std | ||

| + | |- | ||

| + | !'''Nominal''' | ||

| + | |Values serve only as labels. Also called "categorical", "enumerated", or "discrete". However, "enumerated" and "discrete" imply order | ||

| + | | colspan="11" style="margin: 0; padding: 0;" | | ||

| + | {| class="mw-collapsible mw-collapsed wikitable" style="margin: 0; padding: 0;" | ||

| + | |- style="vertical-align:middle;" | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px; vertical-align:top; padding-top:70px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | |- style="vertical-align:top;" | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | Values don't have any meaningful order | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | No distance between values is defined | ||

| + | | colspan="3" style="height:100px; text-align:left; width:80px;" | | ||

| + | Values don't carry any mathematical meaning | ||

| + | | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | colspan="3" style="height:100px; text-align:left; width:80px;" | | ||

| + | Values cannot be used to perform many statistical computations, such as mean and standard deviation | ||

| + | |- | ||

| + | | colspan="11" |Even if the values are numbers. For example, if we want to categorize males and females, we could use a number of 1 for male, and 2 for female. However, the values of 1 and 2 in this case don't have any meaningful order or carry any mathematical meaning. They are simply used as labels. <nowiki>https://www.statisticssolutions.com/data-levels-and-measurement/</nowiki> | ||

| + | |} | ||

| + | |For an '''«outlook»''' attribute from weather data, potential values could be "sunny", "overcast", and "rainy". | ||

| + | |- | ||

| + | !'''Ordinal''' | ||

| + | |Ordinal attributes are called "numeric", or "continuous", however "continuous" implies mathematical continuity | ||

| + | | colspan="11" style="margin: 0; padding: 0;" | | ||

| + | {| class="mw-collapsible mw-collapsed wikitable" style="margin: 0; padding: 0;" | ||

| + | |- style="vertical-align:middle; margin: 0; padding: 0;" | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px; vertical-align:top; padding-top:70px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | |- style="vertical-align:top;" | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | Values have a meaningful order | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | No distance between values is defined | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | Only comparison operators make sense | ||

| + | | colspan="2" |Mathematical operations such as addition, subtraction, multiplication, etc. do not make sense | ||

| + | | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | colspan="11" |For example, an '''«Education level»''' attribute with possible values of '''«high school»''', '''«undergraduate degree»''', and '''«graduate degree»'''. There is a definitive order to the categories (i.eº., graduate is higher than undergraduate, and undergraduate is higher than high school), but we cannot make any other arithmetic assumption. For instance, we cannot assume that the difference in education level between undergraduate and high school is the same as the difference between graduate and undergraduate. | ||

| + | |||

| + | Distinction between nominal and ordinal not always clear (e.g., attribute "outlook") | ||

| + | |} | ||

| + | |A '''«temperature»''' attribute in weather data with potential values fo: "hot" > "warm" > "cool" | ||

| + | |- | ||

| + | !'''Interval''' | ||

| + | | | ||

| + | | colspan="11" style="margin: 0; padding: 0;" | | ||

| + | {| class="mw-collapsible mw-collapsed wikitable" style="margin: 0; padding: 0;" | ||

| + | |- style="vertical-align:middle;" | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;"><div style="text-align: center;"><span style="color: grey; font-size: 15pt; text-align: center;">✘</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px; vertical-align:top; padding-top:60px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | |- style="vertical-align:top;" | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | Distance between values is defined. In other words, we can quantify the difference between values | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | Comparison operators make sense | ||

| + | |Addition, subtraction, make sense | ||

| + | |Multiplication, and division do not make sense | ||

| + | |Interval variables often do not have a meaningful zero-point. | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | | ||

| + | |(not sure) | ||

| + | |- | ||

| + | | colspan="11" |An example of an interval variable would be a '''«Temperature»''' attribute. We can correctly assume that the difference between 70 and 80 degrees is the same as the difference between 80 and 90 degrees. However, the mathematical operations of multiplication and division do not apply to interval variables. For instance, we cannot accurately say that 100 degrees is twice as hot as 50 degrees. Additionally, interval variables often do not have a meaningful zero-point. For example, a temperature of zero degrees (on Celsius and Fahrenheit scales) does not mean a complete absence of heat. | ||

| + | |||

| + | |||

| + | An interval variable can be used to compute commonly used statistical measures such as the average (mean), standard deviation, and the Pearson correlation coefficient. <nowiki>https://www.statisticssolutions.com/data-levels-and-measurement/</nowiki> | ||

| + | |} | ||

| + | |a '''«Temperature»''' attribute composed by numeric measures of such property | ||

| + | |- | ||

| + | !'''Ratio''' | ||

| + | | | ||

| + | | colspan="11" style="margin: 0; padding: 0;" | | ||

| + | {| class="mw-collapsible mw-collapsed wikitable" style="margin: 0; padding: 0;" | ||

| + | |- style="vertical-align:middle;" | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | | style="height:100px; text-align:center; width:80px; vertical-align:top; padding-top:60px;" |<div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;"><div style="text-align: center;"><span style="color: blue; font-size: 20pt; text-align: center;">✔</span></div></span></div> | ||

| + | |- style="vertical-align:top;" | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | colspan="3" style="height:100px; text-align:left; width:80px;" | | ||

| + | All arithmetic operations are possible on a ratio variable | ||

| + | |Ratio variables have a meaningful zero-point | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | style="height:100px; text-align:left; width:80px;" | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | colspan="11" |An example of a ratio variable would be weight (e.g., in pounds). We can accurately say that 20 pounds is twice as heavy as 10 pounds. Additionally, ratio variables have a meaningful zero-point (e.g., exactly 0 pounds means the object has no weight). | ||

| + | |||

| + | |||

| + | A ratio variable can be used as a dependent variable for most parametric statistical tests such as t-tests, F-tests, correlation, and regression. <nowiki>https://www.statisticssolutions.com/data-levels-and-measurement/</nowiki> | ||

| + | |} | ||

| + | |The '''«weight»''' (e.g., in pounds) | ||

| + | |||

| + | Other examples: gross sales and income of a company. | ||

| + | |} | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ===What is an example=== | ||

| + | [[File:Observations-data_sciences.png|250px|thumb|right|Taken from https://www.youtube.com/watch?v=XAdTLtvrkFM]] | ||

| + | |||

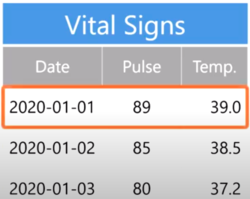

| + | An example, also known in statistics as '''an observation''', is an instance of the phenomenon that we are studying. An observation is characterized by one or a set of attributes (variables). | ||

| + | |||

| + | |||

| + | In data science, we record observations on the rows of a table. | ||

| + | |||

| + | |||

| + | For example, imaging that we are recording the vital signs of a patient. For each observation we would record the «date of the observation», the «patient's heart» rate, and the «temperature» | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ===What is a dataset=== | ||

| + | [Noel Cosgrave slides] | ||

| + | |||

| + | * A dataset is typically a matrix of observations (in rows) and their attributes (in columns). | ||

| + | |||

| + | * It is usually stored as: | ||

| + | :* Flat-file (comma-separated values (CSV)) (tab-separated values (TSV)). A flat file can be a plain text file or a binary file. | ||

| + | :* Spreadsheets | ||

| + | :* Database table | ||

| + | |||

| + | * It is by far the most common form of data used in practical data mining and predictive analytics. However, it is a restrictive form of input as it is impossible to represent relationships between observations. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ===What is Metadata=== | ||

| + | Metadata is information about the background of the data. It can be thought of as "data about the data" and contains: [Noel Cosgrave slides] | ||

| + | |||

| + | * Description of the variables. | ||

| + | * Information about the data types for each variable in the data. | ||

| + | * Restrictions on values the variables can hold. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

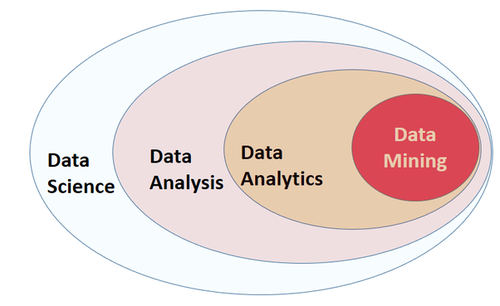

| + | ==What is Data Science== | ||

| + | There are many different terms that are related and sometimes even used as synonyms. It is actually hard to define and differentiate all these related disciplines such as: | ||

| + | |||

| + | Data Science - Data Analysis - Data Analytics - Predictive Data Analytics - Data Mining - Machine Learning - Big Data - AI and even more - Business Analytics. | ||

| + | |||

| + | https://www.loginworks.com/blogs/top-10-small-differences-between-data-analyticsdata-analysis-and-data-mining/ | ||

| + | |||

| + | https://www.quora.com/What-is-the-difference-between-Data-Analytics-Data-Analysis-Data-Mining-Data-Science-Machine-Learning-and-Big-Data-1 | ||

| + | |||

| + | |||

| + | [[File:Data_science-Data_analytics-Data_mining.png|500px|thumb|right|]] | ||

| + | |||

| + | |||

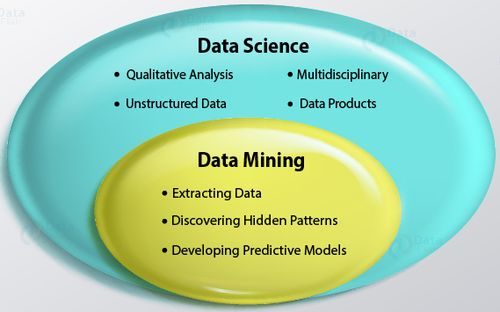

| + | [[File:Data_science-Data_mining.jpg|500px|thumb|right|]] | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | '''Data Science''' | ||

| + | <blockquote> | ||

| + | I think the broadest term is Data Sciences. Data Sciences is a very broad discipline (an umbrella term) that involves (encompasses) many subsets such as Data Analysis, Data Analytics, Data Mining, Machine Learning, Big data (could also be included), and several other related disciplines. | ||

| + | |||

| + | A general definition could be that Data Science is a multi-disciplinary field that uses aspects of statistics, computer science, applied mathematics, data visualization techniques, and even business analysis, with the goal of getting new insights and new knowledge (uncovering useful information) from a vast amount of data, that can help in deriving conclusion and usually in taking business decisions http://makemeanalyst.com/what-is-data-science/ https://www.loginworks.com/blogs/top-10-small-differences-between-data-analyticsdata-analysis-and-data-mining/ | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | '''Data analysis''' | ||

| + | <blockquote> | ||

| + | Data analysis is still a very broad process that includes many multi-disciplinary stages, it goes from: (that are not usually related to Data Analytics or Data Mining) | ||

| + | |||

| + | * Defining a Business objective | ||

| + | * Data collection (Extracting the data) | ||

| + | * Data Storage | ||

| + | * Data Integration: Multiple data sources are combined. http://troindia.in/journal/ijcesr/vol3iss3/36-40.pdf | ||

| + | * Data Transformation: The data is transformed or consolidated into forms that are appropriate or valid for mining by performing various aggregation operations. http://troindia.in/journal/ijcesr/vol3iss3/36-40.pdf | ||

| + | |||

| + | * Data cleansing, Data modeling, Data mining, and Data visualizing, with the goal of uncovering useful information that can help in deriving conclusions and usually in taking business decisions. [EDUCBA] https://www.loginworks.com/blogs/top-10-small-differences-between-data-analyticsdata-analysis-and-data-mining/ | ||

| + | :* This is the stage where we could use Data mining and ML techniques. | ||

| + | |||

| + | * Optimisation: Making the results more precise or accurate over time. | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | '''Data Mining''' (Data Analytics - Predictive Data Analytics) (Hasta ahora creo que estos términos son prácticamente lo mismo) | ||

| + | <blockquote> | ||

| + | |||

| + | https://www.loginworks.com/blogs/top-10-small-differences-between-data-analyticsdata-analysis-and-data-mining/ | ||

| + | |||

| + | We can say that Data Mining is a Data Analysis subset. It's the process of (1) Discovering hidden patterns in data and (2) Developing predictive models, by using statistics, learning algorithms, and data visualization techniques. | ||

| + | |||

| + | |||

| + | Common methods in data mining are: See Styles of Learning - Types of Machine Learning section | ||

| + | |||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

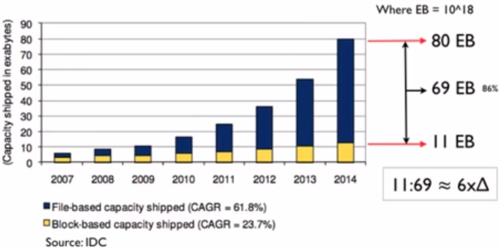

| + | '''Big Data''' | ||

| + | <blockquote> | ||

| + | Big data describes a massive amount of data that has the potential to be mined for information but is too large to be processed and analyzed using traditional data tools. | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | '''Machine Learning''' | ||

| + | <blockquote> | ||

| + | Al tratar de encontrar una definición para Machine Learning me di cuanta de que muchos expertos coinciden en que no hay una definición standard para ML. | ||

| + | |||

| + | En este post se explica bien la definición de ML: https://machinelearningmastery.com/what-is-machine-learning/ | ||

| + | |||

| + | Estos vídeos también son excelentes para entender what ML is: | ||

| + | |||

| + | :https://www.youtube.com/watch?v=f_uwKZIAeM0 | ||

| + | :https://www.youtube.com/watch?v=ukzFI9rgwfU | ||

| + | :https://www.youtube.com/watch?v=WXHM_i-fgGo | ||

| + | :https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v | ||

| + | |||

| + | |||

| + | Una de las definiciones más citadas es la definición de Tom Mitchell. This author provides in his book Machine Learning a definition in the opening line of the preface: | ||

| + | |||

| + | <blockquote> | ||

| + | {| style="color: black; background-color: white; width: 100%; padding: 0px 0px 0px 0px; border:1px solid #ddddff;" | ||

| + | | style="width: 20%; height=10px; background-color: #D8BFD8; padding: 0px 5px 0px 10px; border:1px solid #ddddff; vertical-align:center; moz-border-radius: 0px; webkit-border-radius: 0px; border-radius:0px;" | | ||

| + | <!--==============================================================================--> | ||

| + | <span style="color:#0000FF"> | ||

| + | '''''Tom Mitchell''''' | ||

| + | </span> | ||

| + | <!--==============================================================================--> | ||

| + | |- | ||

| + | | style="width: 20%; background-color: #2F4F4F; padding: 5px 5px 5px 10px; border:1px solid #ddddff; vertical-align:top;" | | ||

| + | <!--==============================================================================--> | ||

| + | <span style="color:#FFFFFF"> | ||

| + | '''The field of machine learning is concerned with the question of how to construct computer programs that automatically improve with experience.''' | ||

| + | </span> | ||

| + | <!--==============================================================================--> | ||

| + | |} | ||

| + | |||

| + | '''So, in short we can say that ML is about writing''' <span style="background:#D8BFD8">'''computer programs that improve themselves'''</span>. | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | Tom Mitchell also provides a more complex and formal definition: | ||

| + | |||

| + | <blockquote> | ||

| + | {| style="color: black; background-color: white; width: 100%; padding: 0px 0px 0px 0px; border:1px solid #ddddff;" | ||

| + | | style="width: 20%; height=10px; background-color: #D8BFD8; padding: 0px 5px 0px 10px; border:1px solid #ddddff; vertical-align:center; moz-border-radius: 0px; webkit-border-radius: 0px; border-radius:0px;" | | ||

| + | <!--==============================================================================--> | ||

| + | <span style="color:#0000FF"> | ||

| + | '''''Tom Mitchell''''' | ||

| + | </span> | ||

| + | <!--==============================================================================--> | ||

| + | |- | ||

| + | | style="width: 20%; background-color: #2F4F4F; padding: 5px 5px 5px 10px; border:1px solid #ddddff; vertical-align:top;" | | ||

| + | <!--==============================================================================--> | ||

| + | <span style="color:#FFFFFF"> | ||

| + | '''A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.''' | ||

| + | </span> | ||

| + | <!--==============================================================================--> | ||

| + | |} | ||

| + | |||

| + | Don't let the definition of terms scare you off, this is a very useful formalism. It could be used as a design tool to help us think clearly about: | ||

| + | |||

| + | :'''E:''' What data to collect. | ||

| + | :'''T:''' What decisions the software needs to make. | ||

| + | :'''P:''' How we will evaluate its results. | ||

| + | |||

| + | Suppose your email program watches which emails you do or do not mark as spam, and based on that learns how to better filter spam. In this case: https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v | ||

| + | |||

| + | :'''E:''' Watching you label emails as spam or not spam. | ||

| + | :'''T:''' Classifying emails as spam or not spam. | ||

| + | :'''P:''' The number (or fraction) of emails correctly classified as spam/not spam. | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | ''Machine Learning'' and ''Data Mining'' are terms that overlap each other. This is logical because both use the same techniques (weel, many of the techniques uses in Data Mining are also use in ML). I'm talking about Supervised and Unsupervised Learning algorithms (that are also called Supervised ML and Unsupervised ML algorithms). | ||

| + | |||

| + | The difference is that in ML we want to construct computer programs that automatically improve with experience (computer programs that improve themselves) | ||

| + | |||

| + | We can, for instance, use a Supervised learning algorithm (Naive Bayes, for example) to build a model that, for example, classifies emails as spam or no-spam. So we can use labeled training data to build the classifier and then use it to classify unlabeled data. | ||

| + | |||

| + | So far, even if this classifier is usually called an ML classifier, it is NOT strictly a ML program. It is just a Data Mining or Predictive data analytics task. It's not a strict ML program because the classifier is not automatically improving itself with experience. | ||

| + | |||

| + | Now, if we are able to use this classifier to develop a program that automatically gathers and adds more training data to rebuild the classifier and updates the classifier when its performance improves, this would now be a strict ML program; because the program is automatically gathering new training data and updating the model so it will automatically improve its performance. | ||

| + | |||

| + | |||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

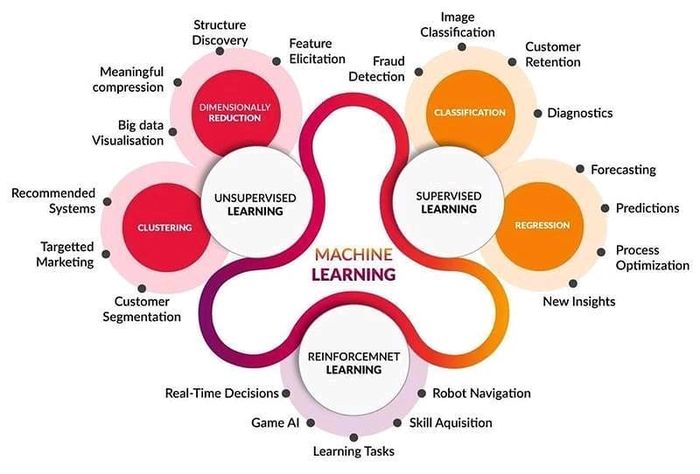

| + | ==Styles of Learning - Types of Machine Learning== | ||

| + | <br /> | ||

| + | [[File:Machine_learning_types.jpg|700px|thumb|center|]] | ||

| + | |||

| + | |||

| + | <br /> | ||

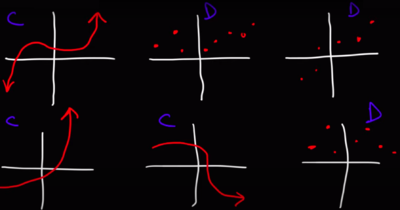

| + | ===Supervised Learning=== | ||

| + | Supervised Learning (Supervised ML): | ||

| + | |||

| + | https://en.wikipedia.org/wiki/Supervised_learning | ||

| + | https://machinelearningmastery.com/supervised-and-unsupervised-machine-learning-algorithms/ | ||

| + | |||

| + | |||

| + | Supervised learning is the process of using training data (training data/labeled data: input (x) - output (y) pairs) to produce a mapping function (<math>f</math>) that allows mapping from the input variable (<math>x</math>) to the output variable (<math>y</math>). The training data is composed of input (x) - output (y) pairs. | ||

| + | |||

| + | Put more simply, in Supervised learning we have input variables (<math>x</math>) and an output variable (<math>y</math>) and we use an algorithm that is able to produce an inferred mapping function from the input to the output. | ||

| + | |||

| + | <math>y = f(x)</math> | ||

| + | |||

| + | The goal is to approximate the mapping function so well that when we have new input data (<math>x</math>), we can predict the output variable <math>y</math>. | ||

| + | |||

| + | |||

| + | The dependent variable is the variable that is to be predicted (<math>y</math>). An independent variable is the variable or variables that is used to predict or explain the dependent variable (<math>x</math>). | ||

| + | |||

| + | |||

| + | <span style='color:red; font-size:13pt'>It is not so easy to see and understand the mathematical conceptual difference between Regression and Classification techniques. In both methods, we determine a function from an input variable to an output variable. It is clear that regressions methods predict <span style='color:blue'>continuous</span> variables (the output variable is continuous), and classification predicts <span style='color:blue'>discrete</span> variables. Now, if we think about the mathematical conceptual difference, we must notice that '''regression is estimating the mathematical function that most closely fits the data'''. In some classification methods, it is clear that we are not estimating a mathematical function that fits the data, but just a method/algorithm/mapping_function (no sé cual sería el término más adecuado) that allows us to map the input to the output. This is, for example, clear in K-Nearest Neighbors where the algorithm doesn't generate a mathematical function that fits the data but only a mapping function (de nuevo, no sé si éste sea el mejor término) that actually (in the case of KNN) relies on the data (KNN determines the class of a given unlabeled observation by identifying the k-nearest labeled observations to it). So, the mapping function obtained in KNN is attached to the training data. In this case, is clear that KNN is not returning a mathematical function that fits the data. In Naïve Bayes, the mapping function obtained is not attached to the data. That is to say, when we use the mapping function generate by NB, this doesn't require the training data (of course we require the training data to build the NB Mapping function, but not to apply the generated function to classify a new unlabeled observation which is the case of KNN). However, we can see that the mathematical concept behind NB is not about finding a mathematical function that fits the data but it relies on a probabilistic approach (tengo que analizar mejor lo último que he dicho aquí sobre NB). Now, when it comes to an algorithm like Decision Trees, it is not so clear to see and understand the mathematical conceptual difference between Regression and Classification. in DT, I think that (even if the output is a discrete variable) we are generating a mathematical function that fits the data. I can see that the method of doing so is not so clear as in the case of, for example, Linear regression, but it would in the end be a mathematical function that fits the data. I think this is why, by doing simples variation in the algorithms, decision trees can also be used as a regression method.</span> | ||

| + | |||

| + | |||

| + | <span style='color:blue; font-size:13pt'>De hecho, Regression and classification methods are so closely related that: </span> | ||

| + | |||

| + | * <span style='color:blue; font-size:13pt'>Some algorithms can be used for both classification and regression with small modifications, such as Decision trees, SVM, and Artificial neural networks.</span> https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/ | ||

| + | |||

| + | * <span style='color:blue; font-size:13pt'>A regression algorithm may predict a discrete value, but the discrete value in the form of an integer quantity.</span> https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/ | ||

| + | |||

| + | * <span style='color:blue; font-size:13pt'>A classification algorithm may predict a continuous value, but the continuous value is in the form of a probability for a class label. </span> | ||

| + | :* Logistic Regression: Contrary to popular belief, logistic regression IS a regression model. The model builds a regression model to predict the probability that a given data entry belongs to the category numbered as "1". Just like Linear regression assumes that the data follows a linear function, Logistic regression models the data using the sigmoid function. https://www.geeksforgeeks.org/understanding-logistic-regression/ | ||

| + | |||

| + | * <span style='color:blue; font-size:13pt'>There are methods for implementing Regression using classification algorithms: </span> | ||

| + | :* https://www.sciencedirect.com/science/article/abs/pii/S1088467X97000139 | ||

| + | :* https://www.dcc.fc.up.pt/~ltorgo/Papers/RegrThroughClass.pdf | ||

| + | |||

| + | |||

| + | |||

| + | <br /> | ||

| + | <blockquote> | ||

| + | * '''Regression techniques''' (Correlation methods) | ||

| + | |||

| + | : A regression algorithm is able to approximate a mapping function (<math>f</math>) from input variables (<math>x</math>) '''to a <span style='color:red'>continuous</span> output variable''' (<math>y</math>). https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/ | ||

| + | : For example, the price of a house may be predicted using regression techniques. | ||

| + | |||

| + | |||

| + | : Quizá podríamos decir que Regression analysis is the process of finding the mathematical function that most closely fits the data. The most common form of regression analysis is linear regression, which is the process of finding the line that most closely fits the data. | ||

| + | |||

| + | |||

| + | : The purpose of regression analysis is to: [Noel] | ||

| + | :* Predict the value of the dependent variable as a function of the value(s) of at least one independent variable. | ||

| + | :* Explain how changes in an independent variable are manifested in the dependent variable | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | :* Linear Regression | ||

| + | :* Decision Tree Regression | ||

| + | :* Support Vector Machines (SVM): It can be used for classification and regression analysis | ||

| + | :* Neural Network Regression | ||

| + | |||

| + | |||

| + | :: '''Regression algorithms are used for:''' | ||

| + | ::* Prediction of continuous variables: future prices/cost, incomes, etc. | ||

| + | ::* Housing Price Prediction: For example, a regression model could be used to predict the value of a house based on location, number of rooms, lot size, and other factors. | ||

| + | ::* Weather forecasting: For example. A «temperature» attribute of weather data. | ||

| + | |||

| + | |||

| + | * '''Classification techniques''' | ||

| + | : Classification is the process of identifying to which of a set of categories a new observation belongs. https://en.wikipedia.org/wiki/Statistical_classification | ||

| + | |||

| + | : A classification algorithm is able to approximate a mapping function (<math>f</math>) from input variables (<math>x</math>) '''to a <span style='color:red'>discrete</span> output variable''' (<math>y</math>). So, the mapping function predicts the class/label/category of a given observation. https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/ | ||

| + | |||

| + | |||

| + | : For example, an email can be classified as "spam" or "not spam". | ||

| + | |||

| + | |||

| + | :* K-Nearest Neighbors | ||

| + | :* Decision Trees | ||

| + | :* Random Forest | ||

| + | :* Naive Bayes | ||

| + | :* Logistic Regression | ||

| + | :* Support Vector Machines (SVM): It can be used for classification and regression analysis | ||

| + | :* Neural Network Classification | ||

| + | :* ... | ||

| + | |||

| + | |||

| + | :: '''Classification algorithms are used for:''' | ||

| + | ::* Text/Image classification | ||

| + | ::* Medical Diagnostics | ||

| + | ::* Weather forecasting: For example. An «outlook» attribute of weather data with potential values of "sunny", "overcast", and "rainy" | ||

| + | ::* Fraud Detection | ||

| + | ::* Credit Risk Analysis | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ===Unsupervised Learning=== | ||

| + | Unsupervised Learning (Unsupervised ML) | ||

| + | <br /> | ||

| + | <blockquote> | ||

| + | |||

| + | |||

| + | * '''Clustering''' | ||

| + | : It is the task of dividing the data into groups that contain similar data (grouping data that is similar together). | ||

| + | : For example, in a Library, We can use clustering to group similar books together, so customers that are interested in a particular kind of book can see other similar books | ||

| + | |||

| + | |||

| + | :* K-Means Clustering | ||

| + | |||

| + | :* Mean-Shift Clustering | ||

| + | |||

| + | :* Density-based spatial clustering of applications with noise (DBSCAN) | ||

| + | |||

| + | |||

| + | :: Clustering methods are used for: | ||

| + | |||

| + | ::* Recommendation Systems: Recommendation systems are designed to recommend new items to users/customers based on previous user's preferences. They use clustering algorithms to predict a user's preferences based on the preferences of other users in the user's cluster. | ||

| + | ::: For example, Netflix collects user-behavior data from its more than 100 million customers. This data helps Netflix to understand what the customers want to see. Based on the analysis, the system recommends movies (or tv-shows) that users would like to watch. This kind of analysis usually results in higher customer retention. https://www.youtube.com/watch?v=dK4aGzeBPkk | ||

| + | |||

| + | |||

| + | ::* Customer Segmentation | ||

| + | |||

| + | |||

| + | ::* Targeted Marketing | ||

| + | |||

| + | |||

| + | |||

| + | * '''Dimensionally reduction''' | ||

| + | |||

| + | |||

| + | :: Dimensionally reduction methods are used for: | ||

| + | |||

| + | ::* Big Data Visualisation | ||

| + | ::* Meaningful compression | ||

| + | ::* Structure Discovery | ||

| + | |||

| + | |||

| + | |||

| + | * '''Association Rules''' | ||

| + | |||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ===Reinforcement Learning=== | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ==Some real-world examples of big data analysis== | ||

| + | |||

| + | * '''Credit card real-time data:''' | ||

| + | : Credit card companies collect and store the real-time data of when and where the credit cards are being swiped. This data helps them in fraud detection. Suppose a credit card is used at location A for the first time. Then after 2 hours the same card is being used at location B which is 5000 kilometers from location A. Now it is practically impossible for a person to travel 5000 kilometers in 2 hours, and hence it becomes clear that someone is trying to fool the system. https://www.youtube.com/watch?v=dK4aGzeBPkk | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ==Statistic== | ||

| + | |||

| + | * Probability vs Likelihood: https://www.youtube.com/watch?v=pYxNSUDSFH4 | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ==Descriptive Data Analysis== | ||

| + | Rather than find hidden information in the data, descriptive data analysis looks to summarize the dataset. | ||

| + | |||

| + | * Some of the measures commonly included in descriptive data analysis: | ||

| + | |||

| + | :* '''Central tendency''': Mean, Mode, Media | ||

| + | :* '''Variability''' (Measures of variation): Range, Quartile, Standard deviation, Z-Score | ||

| + | :* '''Shape of distribution''': Probabilistic distribution plot, Histogram, Skewness, Kurtosis | ||

| + | |||

| + | |||

| + | <br /> | ||

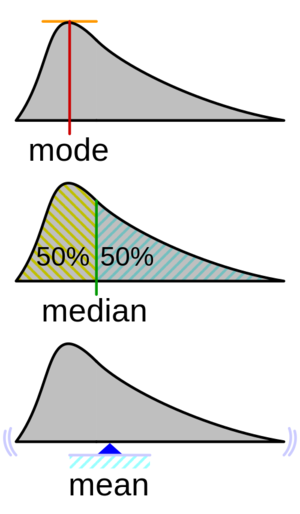

| + | ===Central tendency=== | ||

| + | https://statistics.laerd.com/statistical-guides/measures-central-tendency-mean-mode-median.php | ||

| + | |||

| + | A central tendency (or measure of central tendency) is a single value that attempts to describe a variable by identifying the central position within that data (the most typical value in the data set). | ||

| + | |||

| + | '''The mean''' (often called the average) is the most popular measure of the central tendency, but there are others, such as the '''median''' and the '''mode'''. | ||

| + | |||

| + | '''The mean, median, and mode''' are all valid measures of central tendency, but under different conditions, some measures of central tendency are more appropriate to use than others. | ||

| + | |||

| + | |||

| + | [[File:Visualisation_mode_median_mean.png|right|thumb|300pt|Geometric visualisation of the mode, median and mean of an arbitrary probability density function Taken from https://en.wikipedia.org/wiki/Probability_density_function <br /> [[:File:Visualisation_mode_median_mean.svg]] ]] | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Mean==== | ||

| + | The mean (or average) is the most popular measure of central tendency. | ||

| + | |||

| + | |||

| + | The mean is equal to the sum of all the values in the data set divided by the number of values in the data set. | ||

| + | |||

| + | |||

| + | The mean is usually denotated as <math>\mu</math> (population mean) or <math>\bar{x}</math> (pronounced x bar) (sample mean): | ||

| + | |||

| + | <math>\bar{x} = \frac{(x_1 + x_2 +...+ x_n)}{n} = \frac{\sum x}{n}</math> | ||

| + | |||

| + | |||

| + | An important property of the mean is that it includes every value in your data set as part of the calculation. In addition, the mean is the only measure of central tendency where the sum of the deviations of each value from the mean is always zero. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | =====When not to use the mean===== | ||

| + | '''When the data has values that are unusual (too small or too big) compared to the rest of the data set (outliers) the mean is usually not a good measure of the central tendency.''' | ||

| + | |||

| + | |||

| + | For example, consider the wages of the employees in a factory: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !Staff | ||

| + | !1 | ||

| + | !2 | ||

| + | !3 | ||

| + | !4 | ||

| + | !5 | ||

| + | !6 | ||

| + | !7 | ||

| + | !8 | ||

| + | !9 | ||

| + | !10 | ||

| + | |- | ||

| + | !'''Salary''' | ||

| + | !<math>15k</math> | ||

| + | !<math>18k</math> | ||

| + | !<math>16k</math> | ||

| + | !<math>14k</math> | ||

| + | !<math>15k</math> | ||

| + | !<math>15k</math> | ||

| + | !<math>12k</math> | ||

| + | !<math>17k</math> | ||

| + | !<math>90k</math> | ||

| + | !<math>95k</math> | ||

| + | |} | ||

| + | |||

| + | |||

| + | The mean salary for these ten employees is $30.7k. However, inspecting the data we can see that this mean value might not be the best way to accurately reflect the typical salary of an employee, as most workers have salaries in a range between $12k to 18k. The mean is being '''skewed''' by the two large salaries. As we will find out later, taking the median would be a better measure of central tendency in this situation. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | '''Another case when we usually prefer the median over the mean (or mode) is when our data is skewed (i.e., the frequency distribution for our data is skewed).''' | ||

| + | |||

| + | '''If we consider the normal distribution - as this is the most frequently assessed in statistics - when the data is perfectly normal, the mean, median, and mode are identical'''. Moreover, they all represent the most typical value in the data set. '''However, as the data becomes skewed the mean loses its ability to provide the best central location for the data'''. Therefore, in the case of skewed data, the median is typically the best measure of the central tendency because it is not as strongly influenced by the skewed values. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ====Median==== | ||

| + | The median is the middle score for a set of data that has been arranged in order of magnitude. The median is less affected by outliers and skewed data. In order to calculate the median, suppose we have the data below: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !65 | ||

| + | !55 | ||

| + | !89 | ||

| + | !56 | ||

| + | !35 | ||

| + | !14 | ||

| + | !56 | ||

| + | !55 | ||

| + | !87 | ||

| + | !45 | ||

| + | !92 | ||

| + | |} | ||

| + | |||

| + | |||

| + | We first need to rearrange that data in order of magnitude: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !14 | ||

| + | !35 | ||

| + | !45 | ||

| + | !55 | ||

| + | !55 | ||

| + | !<span style="color:#FF0000">56</span> | ||

| + | !56 | ||

| + | !65 | ||

| + | !87 | ||

| + | !89 | ||

| + | !92 | ||

| + | |} | ||

| + | |||

| + | |||

| + | Then, the Median is the middle score. In this case, 56. This works fine when you have an odd number of scores, but what happens when you have an even number of scores? What if you had only 10 scores? Well, you simply have to take the middle two scores and average the result. So, if we look at the example below: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !65 | ||

| + | !55 | ||

| + | !89 | ||

| + | !56 | ||

| + | !35 | ||

| + | !14 | ||

| + | !56 | ||

| + | !55 | ||

| + | !87 | ||

| + | !45 | ||

| + | |} | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !14 | ||

| + | !35 | ||

| + | !45 | ||

| + | !55 | ||

| + | !<span style="color:#FF0000">55</span> | ||

| + | !<span style="color:#FF0000">56</span> | ||

| + | !56 | ||

| + | !65 | ||

| + | !87 | ||

| + | !89 | ||

| + | |} | ||

| + | |||

| + | We can now take the 5th and 6th scores and calculate the mean. So the Median would be 55.5. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

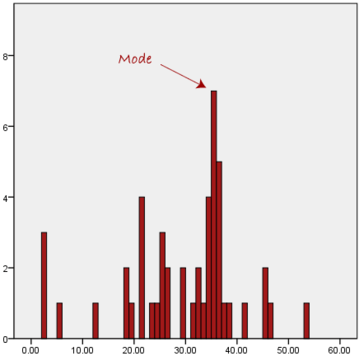

| + | ====Mode==== | ||

| + | The mode is the most frequent score in our data set. | ||

| + | |||

| + | On a histogram, it represents the highest bar. For continuous variables, we usually define a bin size, so every bar in the histogram represent a range of values depending on the bin size | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | [[File:Mode-1.png|center|thumb|359x359px]] | ||

| + | |||

| + | |||

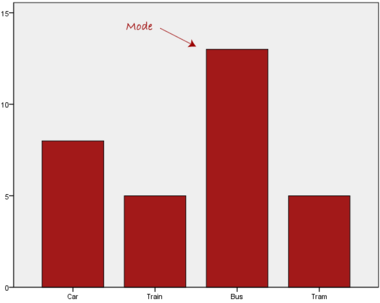

| + | Normally, the mode is used for categorical data where we wish to know which is the most common category, as illustrated below: | ||

| + | [[File:Mode-1a.png|center|thumb|380x380px]] | ||

| + | |||

| + | |||

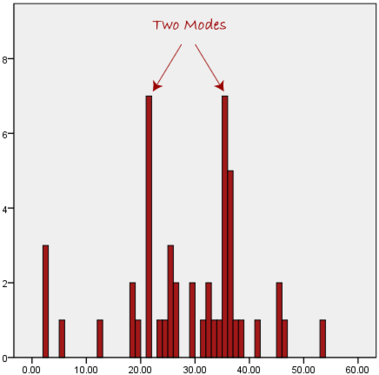

| + | We can see above that the most common form of transport, in this particular data set, is the bus. However, one of the problems with the mode is that it is not unique, so it leaves us with problems when we have two or more values that share the highest frequency, such as below: | ||

| + | <br /> | ||

| + | [[File:Mode-2.png|center|thumb|379x379px]] | ||

| + | |||

| + | |||

| + | We are now stuck as to which mode best describes the central tendency of the data. This is particularly problematic when we have continuous data because we are more likely not to have anyone value that is more frequent than the other. For example, consider measuring 30 peoples' weight (to the nearest 0.1 kg). How likely is it that we will find two or more people with '''exactly''' the same weight (e.g., 67.4 kg)? The answer, is probably very unlikely - many people might be close, but with such a small sample (30 people) and a large range of possible weights, you are unlikely to find two people with exactly the same weight; that is, to the nearest 0.1 kg. This is why the mode is very rarely used with continuous data. | ||

| + | |||

| + | |||

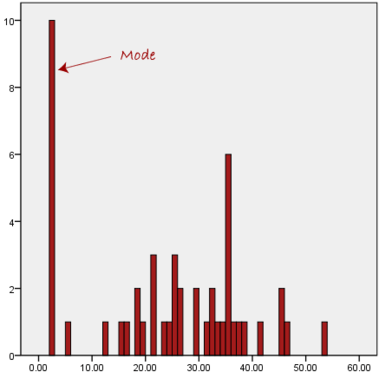

| + | Another problem with the mode is that it will not provide us with a very good measure of central tendency when the most common mark is far away from the rest of the data in the data set, as depicted in the diagram below: | ||

| + | <br /> | ||

| + | [[File:Mode-3.png|center|thumb|379px]] | ||

| + | |||

| + | |||

| + | In the above diagram the mode has a value of 2. We can clearly see, however, that the mode is not representative of the data, which is mostly concentrated around the 20 to 30 value range. To use the mode to describe the central tendency of this data set would be misleading. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

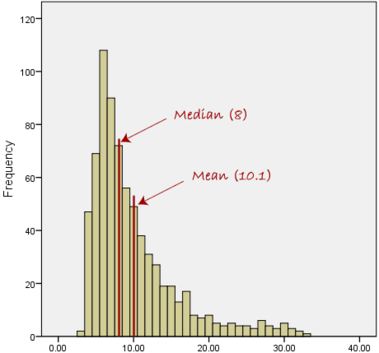

| + | ====Skewed Distributions and the Mean and Median==== | ||

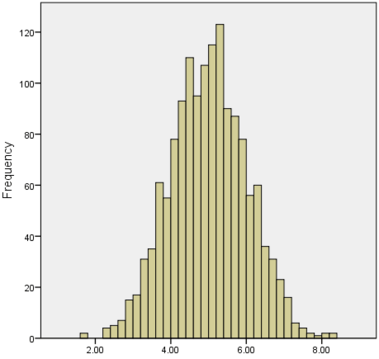

| + | We often test whether our data is normally distributed because this is a common assumption underlying many statistical tests. An example of a normally distributed set of data is presented below: | ||

| + | <br /> | ||

| + | [[File:Skewed-1.png|center|thumb|379px]] | ||

| + | |||

| + | When you have a normally distributed sample you can legitimately use both the mean or the median as your measure of central tendency. In fact, in any symmetrical distribution the mean, median and mode are equal. However, in this situation, the mean is widely preferred as the best measure of central tendency because it is the measure that includes all the values in the data set for its calculation, and any change in any of the scores will affect the value of the mean. This is not the case with the median or mode. | ||

| + | |||

| + | However, when our data is skewed, for example, as with the right-skewed data set below: | ||

| + | <br /> | ||

| + | [[File:Skewed-2.png|center|thumb|379px]] | ||

| + | |||

| + | we find that the mean is being dragged in the direct of the skew. In these situations, the median is generally considered to be the best representative of the central location of the data. The more skewed the distribution, the greater the difference between the median and mean, and the greater emphasis should be placed on using the median as opposed to the mean. A classic example of the above right-skewed distribution is income (salary), where higher-earners provide a false representation of the typical income if expressed as a mean and not a median. | ||

| + | |||

| + | If dealing with a normal distribution, and tests of normality show that the data is non-normal, it is customary to use the median instead of the mean. However, this is more a rule of thumb than a strict guideline. Sometimes, researchers wish to report the mean of a skewed distribution if the median and mean are not appreciably different (a subjective assessment), and if it allows easier comparisons to previous research to be made. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Summary of when to use the mean, median and mode==== | ||

| + | Please use the following summary table to know what the best measure of central tendency is with respect to the different types of variable: | ||

| + | {| class="wikitable" | ||

| + | !'''Type of Variable''' | ||

| + | !'''Best measure of central tendency''' | ||

| + | |- | ||

| + | |Nominal | ||

| + | |Mode | ||

| + | |- | ||

| + | |Ordinal | ||

| + | |Median | ||

| + | |- | ||

| + | |Interval/Ratio (not skewed) | ||

| + | |Mean | ||

| + | |- | ||

| + | |Interval/Ratio (skewed) | ||

| + | |Median | ||

| + | |} | ||

| + | |||

| + | For answers to frequently asked questions about measures of central tendency, please go to: https://statistics.laerd.com/statistical-guides/measures-central-tendency-mean-mode-median-faqs.php | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ===Measures of Variation=== | ||

| + | <span style="background: yellow">The Variation or Variability is a measure of the '''spread of the data (of a variable)''' or a measure of '''how widely distributed are the values around the mean | the deviation of a variable from its mean'''.</span> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Range==== | ||

| + | The range is just composed of the min and max values of a variable. | ||

| + | |||

| + | |||

| + | Range can be used on '''''Ordinal, Ratio and Interval''''' scales | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Quartile==== | ||

| + | https://statistics.laerd.com/statistical-guides/measures-of-spread-range-quartiles.php | ||

| + | |||

| + | |||

| + | The Quartile is a measure of the spread of a data set. To calculate the Quartile we follow the same logic of the Median. Remember that when calculating the Median, we first sort the data from the lowest to the highest value, so the Median is the value in the middle of the sorted data. In the case of the Quartile, we also sort the data from the lowest to the highest value but we break the data set into quarters, and we take 3 values to describe the data. The value corresponding to the 25% of the data, the one corresponding to the 50% (which is the Median), and the one corresponding to the 75% of the data. | ||

| + | |||

| + | |||

| + | A first example: | ||

| + | [2 3 9 1 9 3 5 2 5 11 3] | ||

| + | |||

| + | Sorting the data from the lowest to the highest value: | ||

| + | 25% 50% 75% | ||

| + | [1 2 "2" 3 3 "3" 5 5 "9" 9 11] | ||

| + | |||

| + | The Quartile is '''[2 3 9]''' | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | Another example. Consider the marks of 100 students who have been ordered from the lowest to the highest scores. | ||

| + | |||

| + | |||

| + | *'''The first quartile (Q1):''' Lies between the 25th and 26th student's marks. | ||

| + | **So, if the 25th and 26th student's marks are 45 and 45, respectively: | ||

| + | ***(Q1) = (45 + 45) ÷ 2 = 45 | ||

| + | *'''The second quartile (Q2):''' Lies between the 50th and 51st student's marks. | ||

| + | **If the 50th and 51st student's marks are 58 and 59, respectively: | ||

| + | ***(Q2) = (58 + 59) ÷ 2 = 58.5 | ||

| + | *'''The third quartile (Q3):''' Lies between the 75th and 76th student's marks. | ||

| + | **If the 75th and 76th student's marks are 71 and 71, respectively: | ||

| + | ***(Q3) = (71 + 71) ÷ 2 = 71 | ||

| + | |||

| + | |||

| + | In the above example, we have an even number of scores (100 students, rather than an odd number, such as 99 students). This means that when we calculate the quartiles, we take the sum of the two scores around each quartile and then half them (hence Q1= (45 + 45) ÷ 2 = 45) . However, if we had an odd number of scores (say, 99 students), we would only need to take one score for each quartile (that is, the 25th, 50th and 75th scores). You should recognize that the second quartile is also the median. | ||

| + | |||

| + | |||

| + | Quartiles are a useful measure of spread because they are much less affected by outliers or a skewed data set than the equivalent measures of mean and standard deviation. For this reason, quartiles are often reported along with the median as the best choice of measure of spread and central tendency, respectively, when dealing with skewed and/or data with outliers. A common way of expressing quartiles is as an interquartile range. The interquartile range describes the difference between the third quartile (Q3) and the first quartile (Q1), telling us about the range of the middle half of the scores in the distribution. Hence, for our 100 students: | ||

| + | |||

| + | |||

| + | <math>Interquartile\ range = Q3 - Q1 = 71 - 45 = 26</math> | ||

| + | |||

| + | |||

| + | However, it should be noted that in journals and other publications you will usually see the interquartile range reported as 45 to 71, rather than the calculated '''<math>Interquartile\ range.</math>''' | ||

| + | |||

| + | A slight variation on this is the <math>semi{\text{-}}interquartile range,</math>which is half the <math>Interquartile\ range. </math>Hence, for our 100 students: | ||

| + | |||

| + | |||

| + | <math>Semi{\text{-}}Interquartile\ range = \frac{Q3 - Q1}{2} = \frac{71 - 45}{2} = 13</math> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ====Box Plots==== | ||

| + | |||

| + | boxplot(iris$Sepal.Length, | ||

| + | col = "blue", | ||

| + | main="iris dataset", | ||

| + | ylab = "Sepal Length") | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Variance==== | ||

| + | https://statistics.laerd.com/statistical-guides/measures-of-spread-absolute-deviation-variance.php | ||

| + | |||

| + | The variance is a measure of the deviation of a variable from the mean. | ||

| + | |||

| + | |||

| + | The deviation of a value is: | ||

| + | |||

| + | <math>Var = x_{i} - \mu</math> | ||

| + | |||

| + | |||

| + | <math>Var = \sigma^{2} = \frac{\sum_{i=1}^{N}(x_{i} - \mu)^2}{N}</math> | ||

| + | |||

| + | <math>\mu: \text{Population mean};\ \ \ x: \text{Score};\ \ \ N: \text{Number of scores in the population}</math> | ||

| + | |||

| + | <br /> | ||

| + | * Unlike the '''Absolute deviation''', which uses the absolute value of the deviation in order to "rid itself" of the negative values, the variance achieves positive values by squaring the deviation of each value. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ====Standard Deviation==== | ||

| + | https://statistics.laerd.com/statistical-guides/measures-of-spread-standard-deviation.php | ||

| + | |||

| + | The Standard Deviation is the square root of the variance. This measure is the most widely used to express deviation from the mean in a variable. | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | : '''Population standard deviation''' (<math>\sigma</math>) | ||

| + | <blockquote> | ||

| + | <math>\sigma = \sqrt{\frac{\sum_{i=1}^{N}(x_{i} - \mu)^2}{N}}</math> | ||

| + | |||

| + | <math>\mu: \text{population mean};\ \ \ N: \text{Number of scores in the population}</math> | ||

| + | </blockquote> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | : '''Sample standard deviation formula''' (<math>s</math>) | ||

| + | <blockquote> | ||

| + | Sometimes our data is only a sample of the whole population. In this case, we can still estimate the Standard deviation; but when we use a sample as an estimate of the whole population, the Standard deviation formula changes to this: | ||

| + | |||

| + | <math>s = \sqrt{\frac{\sum_{i=1}^{n}(x_{i} - \bar{x})^2}{n -1}}</math> | ||

| + | |||

| + | |||

| + | <math>\bar{x}: \text{Sample mean};\ \ \ n: \text{Number of scores in the sample}</math> | ||

| + | </blockquote> | ||

| + | |||

| + | See Bessel's correction: https://en.wikipedia.org/wiki/Bessel%27s_correction | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | * A value of zero means that there is no variability; All the numbers in the data set are the same. | ||

| + | |||

| + | * A higher standard deviation indicates more widely distributed values around the mean. | ||

| + | |||

| + | * Assuming the frequency distributions approximately normal, about <math>68%</math> of all observations are within <math>-1</math> and <math> +1 </math> standard deviation from the mean. | ||

| + | |||

| + | <br /> | ||

| + | |||

| + | ==== Z Score ==== | ||

| + | Z-Score represents how far from the mean a particular value is based on the number of standard deviations. In other words, a z-score tells us how many standard deviations away a value is from the mean. | ||

| + | |||

| + | Z-Scores are also known as standardized residuals. | ||

| + | |||

| + | Note: mean and standard deviation are sensitive to outliers. | ||

| + | |||

| + | |||

| + | We use the following formula to calculate a z-score. https://www.statology.org/z-score-python/ | ||

| + | |||

| + | <math> | ||

| + | z = (x - \mu)/\sigma | ||

| + | </math> | ||

| + | |||

| + | * <math>x</math> is a single raw data value | ||

| + | * <math>\mu</math> is the population mean | ||

| + | * <math>\sigma</math> is the population standard deviation | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | In Python: | ||

| + | |||

| + | scipy.stats.zscore(a, axis=0, ddof=0, nan_policy='propagate') https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.zscore.html | ||

| + | |||

| + | Compute the z score of each value in the sample, relative to the sample mean and standard deviation. | ||

| + | |||

| + | <syntaxhighlight lang="python3"> | ||

| + | a = np.array([ 0.7972, 0.0767, 0.4383, 0.7866, 0.8091, | ||

| + | 0.1954, 0.6307, 0.6599, 0.1065, 0.0508]) | ||

| + | from scipy import stats | ||

| + | stats.zscore(a) | ||

| + | |||

| + | Output: | ||

| + | array([ 1.12724554, -1.2469956 , -0.05542642, 1.09231569, 1.16645923, | ||

| + | -0.8558472 , 0.57858329, 0.67480514, -1.14879659, -1.33234306]) | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ===Shape of Distribution=== | ||

| + | The shape of the distribution of a variable is visualized by building a Probability distribution plot or a histogram. There are also some numerical measures (like the Skewness and the Kurtosis) that provide ways of describing, by a simple value, some features of the shape the distribution of a variable. [Adelo] | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Probability distribution==== | ||

| + | No estoy seguro de cuales son los terminos correctos de este tipo de gráficos (Density - Distribution plots) | ||

| + | |||

| + | https://en.wikipedia.org/wiki/Probability_distribution#Continuous_probability_distribution | ||

| + | |||

| + | https://en.wikipedia.org/wiki/Probability_density_function | ||

| + | |||

| + | https://towardsdatascience.com/histograms-and-density-plots-in-python-f6bda88f5ac0 | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | =====The Normal Distribution===== | ||

| + | https://www.youtube.com/watch?v=rzFX5NWojp0 | ||

| + | |||

| + | https://en.wikipedia.org/wiki/Normal_distribution | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Histograms==== | ||

| + | . | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ====Skewness==== | ||

| + | https://en.wikipedia.org/wiki/Skewness | ||

| + | |||

| + | https://www.investopedia.com/terms/s/skewness.asp | ||

| + | |||

| + | https://towardsdatascience.com/histograms-and-density-plots-in-python-f6bda88f5ac0 | ||

| + | |||

| + | https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.skew.html | ||

| + | |||

| + | |||

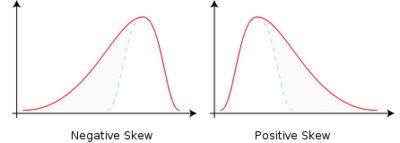

| + | Skewness is a method for quantifying the lack of symmetry in the probability distribution of a variable. | ||

| + | |||

| + | * <span style="background:#E6E6FA">'''Skewness = 0</span> : Normally distributed'''. | ||

| + | |||

| + | * <span style="background:#E6E6FA">'''Skewness < 0</span> : Negative skew: The left tail is longer.''' The mass of the distribution is concentrated on the right of the figure. The distribution is said to be left-skewed, left-tailed, or skewed to the left, despite the fact that the curve itself appears to be skewed or leaning to the right; left instead refers to the left tail being drawn out and, often, the mean being skewed to the left of a typical center of the data. A left-skewed distribution usually appears as a right-leaning curve. https://en.wikipedia.org/wiki/Skewness | ||

| + | |||

| + | * <span style="background:#E6E6FA">'''Skewness > 0</span> : Positive skew : The right tail is longer.''' the mass of the distribution is concentrated on the left of the figure. The distribution is said to be right-skewed, right-tailed, or skewed to the right, despite the fact that the curve itself appears to be skewed or leaning to the left; right instead refers to the right tail being drawn out and, often, the mean being skewed to the right of a typical center of the data. A right-skewed distribution usually appears as a left-leaning curve. | ||

| + | |||

| + | |||

| + | [[File:Skewness.png|400px|thumb|center|]] | ||

| + | |||

| + | |||

| + | [[File:Relationship_between_mean_and_median_under_different_skewness.png|600px|thumb|center|Taken from https://en.wikipedia.org/wiki/Skewness]] | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | |||

| + | ====Kurtosis==== | ||

| + | https://www.statisticshowto.com/probability-and-statistics/statistics-definitions/kurtosis-leptokurtic-platykurtic/ | ||

| + | |||

| + | https://www.itl.nist.gov/div898/handbook/eda/section3/eda35b.htm | ||

| + | |||

| + | https://en.wikipedia.org/wiki/Kurtosis | ||

| + | |||

| + | https://www.simplypsychology.org/kurtosis.html | ||

| + | |||

| + | |||

| + | The kurtosis is a measure of the "tailedness" of the probability distribution. https://en.wikipedia.org/wiki/Kurtosis | ||

| + | |||

| + | We can say that Kurtosis is a measure of the concentration of values on the tail of the distribution. Which of course gives you an idea of the concentration of values on the peak of the distribution; but it is important to know that the measure provided by the kurtosis is related to the tail. [Adelo] | ||

| + | |||

| + | |||

| + | * '''The kurtosis of any univariate normal distribution is 3.''' A univariate normal distribution is usually called just normal distribution. | ||

| + | |||

| + | * '''Platykurtic:''' Kurtosis less than 3 (Negative Kurtosis if we talk about the adjusted version of Pearson's kurtosis, the Excess kurtosis). | ||

| + | :* A negative value means that the distribution has a light tail compared to the normal distribution (which means that there is little data in the tail). | ||

| + | :* An example of a platykurtic distribution is the uniform distribution, which does not produce outliers. | ||

| + | |||

| + | * '''Leptokurtic:''' Kurtosis greater than 3 (Positive Excess kurtosis). | ||

| + | :* A positive Kurtosis tells that the distribution has a heavy tail (outlier), which means that there is a lot of data in the tail. | ||

| + | :* An example of a leptokurtic distribution is the Laplace distribution, which has tails that asymptotically approach zero more slowly than a Gaussian and therefore produce more outliers than the normal distribution. | ||

| + | |||

| + | * This heaviness or lightness in the tails usually means that your data looks flatter (or less flat) compared to the normal distribution. | ||

| + | |||

| + | * It is also common practice to use the adjusted version of Pearson's kurtosis, the excess kurtosis, which is the kurtosis minus 3, to provide the comparison to the standard normal distribution. Some authors use "kurtosis" by itself to refer to the excess kurtosis. https://en.wikipedia.org/wiki/Kurtosis | ||

| + | |||

| + | * It must be noted that the Kurtosis is related to the tails of the distribution, not its peak; hence, the sometimes-seen characterization of kurtosis as "peakedness" is incorrect. https://en.wikipedia.org/wiki/Kurtosis | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | In Python: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.kurtosis.html | ||

| + | |||