Data Science

Contents

- 1 Data Science and Machine Learning Courses

- 2 Data analysis basic concepts

- 3 Data Mining

- 3.1 Text mining with R - A tidy approach, Julia Silge & David Robinson

- 4 Machine Learning

- 5 Some important concepts

- 6 Programming languages and Applications for Data Science

Data Science and Machine Learning Courses

- Posts

- Udemy: https://www.udemy.com/

- Python for Data Science and Machine Learning Bootcamp - Nivel básico

- Machine Learning, Data Science and Deep Learning with Python - Nivel básico - Parecido al anterior

- Data Science: Supervised Machine Learning in Python - Nivel más alto

- Mathematical Foundation For Machine Learning and AI

- The Data Science Course 2019: Complete Data Science Bootcamp

- Coursera - By Stanford University

- Udacity: https://eu.udacity.com/

- Columbia University - COURSE FEES USD 1,400

Data analysis basic concepts

- Media:Exploration_of_the_Darts_dataset_using_statistics.pdf

- Media:Exploration_of_the_Darts_datase_using_statistics.zip

Data analysis is the process of inspecting, cleansing, transforming, and modelling data with a goal of discovering useful information, suggesting conclusions, and supporting decision-making.

Data mining is a particular data analysis technique that focuses on the modelling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing on business information.

In statistical applications, data analysis can be divided into:

- Descriptive Data Analysis:

- Rather than find hidden information in the data, descriptive data analysis looks to summarize the dataset.

- They are commonly implemented measures included in the descriptive data analysis:

- Central tendency (Mean, Mode, Median)

- Variability (Standard deviation, Min/Max)

- Exploratory data analysis (EDA):

- Generate Summaries and make general statements about the data, and its relationships within the data is the heart of Exploratory Data Analysis.

- We generally make assumptions on the entire population but mostly just work with small samples. Why are we allowed to do this??? Two important definitions:

- Population: A precise definition of all possible outcomes, measurements or values for which inference will be made about.

- Sample: A portion of the population which is representative of the population (at least ideally).

- Confirmatory data analysis (CDA):

- In statistics, confirmatory analysis (CA) or confirmatory factor analysis (CFA) is a special form of factor analysis, most commonly used in social research.

Data Mining

Data mining is the process of discovering patterns in large data sets involving methods at the intersection of machine learning, statistics, and database systems.[1] Data mining is an interdisciplinary subfield of computer science and statistics with an overall goal to extract information (with intelligent methods) from a data set and transform the information into a comprehensible structure for further use. https://en.wikipedia.org/wiki/Data_mining

Text mining with R - A tidy approach, Julia Silge & David Robinson

Media:Silge_julia_robinson_david-text_mining_with_r_a_tidy_approach.zip

This book serves as an introduction to text mining using the tidytext package and other tidy tools in R.

Using tidy data principles is a powerful way to make handling data easier and moreeffective, and this is no less true when it comes to dealing with text.

The Tidy Text Format

As described byHadley Wickham (Wickham 2014), tidy data has a specific structure:

- Each variable is a column.

- Each observation is a row.

- Each type of observational unit is a table.

We thus define the tidy text format as being a table with one token per row. A token is a meaningful unit of text, such as a word, that we are interested in using for analysis, and tokenization is the process of splitting text into tokens.

This one-token-per-row structure is in contrast to the ways text is often stored in current analyses, perhaps as strings or in a document-term matrix. For tidy text mining, the token that is stored in each row is most often a single word, but can also be an n-gram, sentence, or para‐graph. In the tidy text package, we provide functionality to tokenize by commonly used units of text like these and convert to a one-term-per-row format.

At the same time, the tidytext package doesn't expect a user to keep text data in a tidy form at all times during an analysis. The package includes functions to tidy() objects (see the broom package [Robinson, cited above]) from popular text mining R pack‐ages such as tm (Feinerer et al. 2008) and quanteda (Benoit and Nulty 2016). This allows, for example, a workflow where importing, filtering, and processing is done using dplyr and other tidy tools, after which the data is converted into a document-term matrix for machine learning applications. The models can then be reconverted into a tidy form for interpretation and visualization with ggplot2.

Contrasting Tidy Text with Other Data Structures

As we stated above, we define the tidy text format as being a table with one token perrow. Structuring text data in this way means that it conforms to tidy data principlesand can be manipulated with a set of consistent tools. This is worth contrasting withthe ways text is often stored in text mining approaches:

- String: Text can, of course, be stored as strings (i.e., character vectors) within R, and often text data is first read into memory in this form.

- Corpus: These types of objects typically contain raw strings annotated with additional metadata and details.

- Document-term matrix: This is a sparse matrix describing a collection (i.e., a corpus) of documents with one row for each document and one column for each term. The value in the matrix is typically word count or tf-idf (see Chapter 3).

Let’s hold off on exploring corpus and document-term matrix objects until Chapter 5,and get down to the basics of converting text to a tidy format.

The unnest tokens Function

Emily Dickinson wrote some lovely text in her time:

text <- c("Because I could not stop for Death",

"He kindly stopped for me",

"The Carriage held but just Ourselves",

"and Immortality")

This is a typical character vector that we might want to analyze. In order to turn it into a tidy text dataset, we first need to put it into a data frame:

library(dplyr)

text_df = tibble(line = 1:4, text = text)

text_df

## Result

## A tibble: 4 x 2

# line text

# <int> <chr>

#1 1 Because I could not stop for Death

#2 2 He kindly stopped for me

#3 3 The Carriage held but just Ourselves

#4 4 and Immortality

That way we have converted the vector text to a tibble. tibble is a modern class of data frame within R, available in the dplyr and tibble packages, that has a convenient print method, will not convert strings to factors, and does not use row names. Tibbles are great for use with tidy tools.

Notice that this data frame containing text isn't yet compatible with tidy text analysis. We can't filter out words or count which occur most frequently, since each row is made up of multiple combined words. We need to convert this so that it has one token per document per row.

In this first example, we only have one document (the poem), but we will explore examples with multiple documents soon. Within our tidy text framework, we need to both break the text into individual tokens (a process called tokenization) and transform it to a tidy data structure.

To convert a data frame (a tibble in this case) into a didy data structure , we use the unnest_tokens() function from library(tidytext).

library(tidytext)

text_df %>%

unnest_tokens(word, text)

# Result

# A tibble: 20 x 2

line word

<int> <chr>

1 1 because

2 1 i

3 1 could

4 1 not

5 1 stop

6 1 for

7 1 death

8 2 he

9 2 kindly

10 2 stopped

11 2 for

12 2 me

13 3 the

14 3 carriage

15 3 held

16 3 but

17 3 just

18 3 ourselves

19 4 and

20 4 immortality

The two basic arguments to unnest_tokens used here are column names. First we have the output column name that will be created as the text is unnested into it (word,in this case), and then the input column that the text comes from (text, in this case). Remember that text_df above has a column called text that contains the data of interest.

After using unnest_tokens, we've split each row so that there is one token (word) in each row of the new data frame; the default tokenization in unnest_tokens() is for single words, as shown here. Also notice:

- Other columns, such as the line number each word came from, are retained.

- Punctuation has been stripped.

- By default, unnest_tokens() converts the tokens to lowercase, which makes them easier to compare or combine with other datasets. (Use the to_lower = FALSE argument to turn off this behavior).

Having the text data in this format lets us manipulate, process, and visualize the text tusing the standard set of tidy tools, namely dplyr, tidyr, and ggplot2, as shown in the following Figure:

Tidying the Works of Jane Austen

Let's use the text of Jane Austen's six completed, published novels from the janeaus‐tenr package (Silge 2016), and transform them into a tidy format. The janeaustenr package provides these texts in a one-row-per-line format, where a line in this context is analogous to a literal printed line in a physical book.

- We start creating a variable with the janeaustenr data using the command austen_books():

- Then we can use the group_by(book) command [from library(dplyr)] to group the data by book:

- We use the mutate command [from library(dplyr)] to add a couple of columns to the data frame: linenumber, chapter

- Para recuperar el chapter we are using the str_detect() and regex() commands from library(stringr)

library(janeaustenr)

library(dplyr)

library(stringr)

original_books <- austen_books() %>%

group_by(book) %>%

mutate(linenumber = row_number(),

chapter = cumsum(str_detect(text, regex("^chapter [\\divxlc]",ignore_case = TRUE)))) %>%

ungroup()

original_books

# Result:

# A tibble: 73,422 x 4

text book linenumber chapter

<chr> <fct> <int> <int>

1 SENSE AND SENSIBILITY Sense & Sensibility 1 0

2 "" Sense & Sensibility 2 0

3 by Jane Austen Sense & Sensibility 3 0

4 "" Sense & Sensibility 4 0

5 (1811) Sense & Sensibility 5 0

6 "" Sense & Sensibility 6 0

7 "" Sense & Sensibility 7 0

8 "" Sense & Sensibility 8 0

9 "" Sense & Sensibility 9 0

10 CHAPTER 1 Sense & Sensibility 10 1

# … with 73,412 more rows

>

- To work with this as a tidy dataset, we need to restructure it in the one-token-per-row format, which as we saw earlier is done with the unnest_tokens() function.

library(tidytext)

tidy_books <- original_books %>%

unnest_tokens(word, text)

tidy_books

# Result

# A tibble: 725,055 x 4

book linenumber chapter word

<fct> <int> <int> <chr>

1 Sense & Sensibility 1 0 sense

2 Sense & Sensibility 1 0 and

3 Sense & Sensibility 1 0 sensibility

4 Sense & Sensibility 3 0 by

5 Sense & Sensibility 3 0 jane

6 Sense & Sensibility 3 0 austen

7 Sense & Sensibility 5 0 1811

8 Sense & Sensibility 10 1 chapter

9 Sense & Sensibility 10 1 1

10 Sense & Sensibility 13 1 the

# ... with 725,045 more rows

The default tokenizing is for words, but other options include characters, n-grams, sentences, lines, paragraphs, or separation around a regex pat-tern.

- Often in text analysis, we will want to remove stop words, which are words that are not useful for an analysis, typically extremely common words such as "the", "of", "to", and so forth in English. We can remove stop words (kept in the tidy‐text dataset stop_words) with an anti_join().

tidy_books <- tidy_books %>%

anti_join(stop_words)

tidy_books

# Result:

# A tibble: 217,609 x 4

book linenumber chapter word

<fct> <int> <int> <chr>

1 Sense & Sensibility 1 0 sense

2 Sense & Sensibility 1 0 sensibility

3 Sense & Sensibility 3 0 jane

4 Sense & Sensibility 3 0 austen

5 Sense & Sensibility 5 0 1811

6 Sense & Sensibility 10 1 chapter

7 Sense & Sensibility 10 1 1

8 Sense & Sensibility 13 1 family

9 Sense & Sensibility 13 1 dashwood

10 Sense & Sensibility 13 1 settled

# ... with 217,599 more rows

The stop_words dataset in the tidytext package contains stop words from three lexicons. We can use them all together, as we have here, or filter() to only use one set of stop words if that is more appropriate for a certain analysis.

- We can also use dplyr's count() to find the most common words in all the books as a whole:

tidy_books %>%

count(word, sort = TRUE)

# Result

# A tibble: 13,914 x 2

word n

<chr> <int>

1 miss 1855

2 time 1337

3 fanny 862

4 dear 822

5 lady 817

6 sir 806

7 day 797

8 emma 787

9 sister 727

10 house 699

# ... with 13,904 more rows

- Because we've been using tidy tools, our word counts are stored in a tidy data frame. This allows us to pipe directly to the ggplot2 package, for example to create a visualization of the most common words:

library(ggplot2)

tidy_books %>%

count(word, sort = TRUE) %>%

filter(n > 600) %>%

mutate(word = reorder(word, n)) %>%

ggplot(aes(word, n)) + geom_col() + xlab(NULL) + coord_flip()

The gutenbergr Package

Word Frequencies

Chapter 2 - Sentiment Analysis with Tidy Data

Let's address the topic of opinion mining or sentiment analysis. When human readers approach a text, we use our understanding of the emotional intent of words to infer whether a section of text is positive or negative, or perhaps characterized by some other more nuanced emotion like surprise or disgust. We can use the tools of text mining to approach the emotional content of text programmatically, as shown in the next figure:

One way to analyze the sentiment of a text is to consider the text as a combination of its individual words, and the sentiment content of the whole text as the sum of the sentiment content of the individual words. This isn't the only way to approach sentiment analysis, but it is an often-used approach, and an approach that naturally takes advantage of the tidy tool ecosystem.

The tidytext package contains several sentiment lexicons in the sentiments dataset.

The three general-purpose lexicons are:

- Bing lexicon from Bing Liu and collaborators: Categorizes words in a binary fashion into positive and negative categories.

- AFINN lexicon from Finn Årup Nielsen: Assigns words with a score that runs between -5 and 5, with negative scores indicating negative sentiment and positive scores indicating positive sentiment.

- NRC lexicon from Saif Mohammad and Peter Turney: Categorizes words in a binary fashion ("yes"/"no") into categories of positive, negative, anger, anticipation, disgust, fear, joy, sadness, surprise, and trust.

All three lexicons are based on unigrams, i.e., single words. These lexicons contain many English words and the words are assigned scores for positive/negative sentiment, and also possibly emotions like joy, anger, sadness, and so forth.

All of this information is tabulated in the sentiments dataset, and tidytext provides the function get_sentiments() to get specific sentiment lexicons without the columns that are not used in that lexicon.

install.packages('tidytext')

library(tidytext)

sentiments

# A tibble: 27,314 x 4

word sentiment lexicon score

<chr> <chr> <chr> <int>

1 abacus trust nrc NA

2 abandon fear nrc NA

3 abandon negative nrc NA

4 abandon sadness nrc NA

5 abandoned anger nrc NA

6 abandoned fear nrc NA

7 abandoned negative nrc NA

8 abandoned sadness nrc NA

9 abandonment anger nrc NA

10 abandonment fear nrc NA

# ... with 27,304 more rows

get_sentiments("bing")

# A tibble: 6,788 x 2

word sentiment

<chr> <chr>

1 2-faced negative

2 2-faces negative

3 a+ positive

4 abnormal negative

5 abolish negative

6 abominable negative

7 abominably negative

8 abominate negative

9 abomination negative

10 abort negative

# ... with 6,778 more rows

get_sentiments("afinn")

# A tibble: 2,476 x 2

word score

<chr> <int>

1 abandon -2

2 abandoned -2

3 abandons -2

4 abducted -2

5 abduction -2

6 abductions -2

7 abhor -3

8 abhorred -3

9 abhorrent -3

10 abhors -3

# ... with 2,466 more rows

get_sentiments("nrc")

# A tibble: 13,901 x 2

word sentiment

<chr> <chr>

1 abacus trust

2 abandon fear

3 abandon negative

4 abandon sadness

5 abandoned anger

6 abandoned fear

7 abandoned negative

8 abandoned sadness

9 abandonment anger

10 abandonment fear

# ... with 13,891 more rows

How were these sentiment lexicons put together and validated? They were constructed via either crowdsourcing (using, for example, Amazon Mechanical Turk) or by the labor of one of the authors, and were validated using some combination of crowdsourcing again, restaurant or movie reviews, or Twitter data. Given this information, we may hesitate to apply these sentiment lexicons to styles of text dramatically different from what they were validated on, such as narrative fiction from 200 years ago. While it is true that using these sentiment lexicons with, for example, Jane Austen's novels may give us less accurate results than with tweets sent by a contemporary writer, we still can measure the sentiment content for words that are shared across the lexicon and the text.

There are also some domain-specific sentiment lexicons available, constructed to be used with text from a specific content area. "Example: Mining Financial Articles" on page 81 explores an analysis using a sentiment lexicon specifically for finance.

Dictionary-based methods like the ones we are discussing find the total sentiment of a piece of text by adding up the individual sentiment scores for each word in the text.

Not every English word is in the lexicons because many English words are pretty neutral. It is important to keep in mind that these methods do not take into account qualifiers before a word, such as in "no good" or "not true"; a lexicon-based method like this is based on unigrams only. For many kinds of text (like the narrative examples below), there are no sustained sections of sarcasm or negated text, so this is not an important effect. Also, we can use a tidy text approach to begin to understand what kinds of negation words are important in a given text; see Chapter 9 for an extended example of such an analysis.

Sentiment Analysis with Inner Join

What are the most common joy words in Emma

Let's look at the words with a joy score from the NRC lexicon.

First, we need to take the text of the novel and convert the text to the tidy format using unnest_tokens(), just as we did in Data analysis - Machine Learning - AI#Tidying the Works of Jane Austen. Let's also set up some other columns to keep track of which line and chapter of the book each word comes from; we use group_by and mutate to construct those columns:

library(janeaustenr)

library(tidytext)

library(dplyr)

library(stringr)

tidy_books <- austen_books() %>%

group_by(book) %>%

mutate(linenumber = row_number(),

chapter = cumsum(str_detect(text, regex("^chapter [\\divxlc]",ignore_case = TRUE)))) %>%

ungroup() %>%

unnest_tokens(word, text)

tidy_books

Notice that we chose the name word for the output column from unnest_tokens(). This is a convenient choice because the sentiment lexicons and stop-word datasets have columns named word; performing inner joins and anti-joins is thus easier

Now that the text is in a tidy format with one word per row, we are ready to do the sentiment analysis:

- First, let's use the NRC lexicon and filter() for the joy words.

- Next, let's filter() the data frame with the text from the book for the words from Emma.

- Then, we use inner_join() to perform the sentiment analysis. What are the most common joy words in Emma? Let's use count() from dplyr.

nrcjoy <- get_sentiments("nrc") %>%

filter(sentiment == "joy")

tidy_books %>%

filter(book == "Emma") %>%

inner_join(nrcjoy) %>%

count(word, sort = TRUE)

# Result:

# A tibble: 298 x 2

word n

<chr> <int>

1 friend 166

2 hope 143

3 happy 125

4 love 117

5 deal 92

6 found 92

7 happiness 76

8 pretty 68

9 true 66

10 comfort 65

# ... with 288 more rows

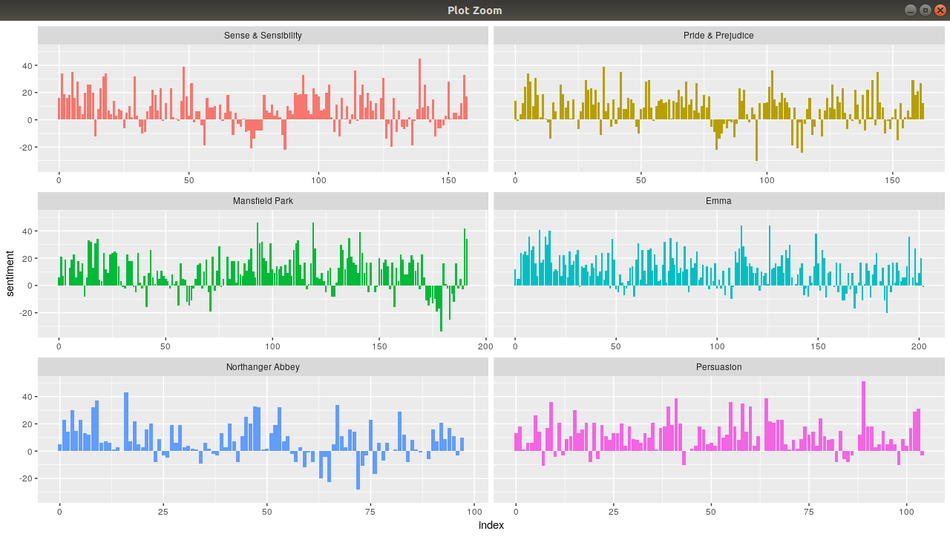

We could examine how sentiment changes throughout each novel

- First, we need to find a sentiment value for each word in the austen_books() dataset:

- We are going to use the Bing lexicon.

- Using an inner_join() as explained above, we can have a sentiment value for each of the words in the austen_books() dataset that have been defined in the Bing lexicon:

janeaustensentiment <- tidy_books %>% inner_join(get_sentiments("bing")) %>%

- Next, we count up how many positive and negative words there are in defined sections of each book:

- We are going to define a section as a number of lines (80 lines in this case)

- Small sections of text may not have enough words in them to get a good estimate of sentiment, while really large sections can wash out narrative structure. For these books, using 80 lines works well, but this can vary depending on individual texts, how long the lines were to start with, etc.

- We are going to define a section as a number of lines (80 lines in this case)

%>% count(book, index = linenumber %/% 80, sentiment) %>%Note how are we counting in defining sections of 80 lines using the count() command:

- The count() command counts rows with equal values of the arguments specifies. In this case we want to count the number of rows with the same value of book, index (index = linenumber %/% 80), and sentiment.

- The %/% operator does integer division (x %/% y is equivalent to floor(x/y))

- So index = linenumber %/% 80 means that, for example, the first row (linenumber = 1) is going to have an index value of 0 (because 1 %/% 80 = 0). Thus:

- linenumber = 1 --> index = 1 %/% 80 = 0'

- linenumber = 79 --> index = 79 %/% 80 = 0'

- linenumber = 80 --> index = 80 %/% 80 = 1'

- linenumber = 159 --> index = 159 %/% 80 = 1'

- linenumber = 160 --> index = 160 %/% 80 = 2'

- ...

- This way, the count() command is counting how many positive and negative words there are every 80 lines.

- Then we use the spread() command [from library(tidyr)] so that we have negative and positive sentiment in separate columns:

library(tidyr) %>% spread(sentiment, n, fill = 0) %>%

- We lastly calculate a net sentiment (positive - negative):

%>% mutate(sentiment = positive - negative)

- Now we can plot these sentiment scores across the plot trajectory of each novel:

- Notice that we are plotting against the index on the x-axis that keeps track of narrative time in sections of text.

library(ggplot2) ggplot(janeaustensentiment, aes(index, sentiment, fill = book)) + geom_col(show.legend = FALSE) + facet_wrap(~book, ncol = 2, scales = "free_x")

Grouping the 5 previous scripts into a single script:

library(tidyr)

library(ggplot2)

janeaustensentiment <- tidy_books %>%

inner_join(get_sentiments("bing")) %>%

count(book, index = linenumber %/% 80, sentiment) %>%

spread(sentiment, n, fill = 0) %>%

mutate(sentiment = positive - negative)

ggplot(janeaustensentiment, aes(index, sentiment, fill = book)) +

geom_col(show.legend = FALSE) +

facet_wrap(~book, ncol = 2, scales = "free_x")

Comparing the Three Sentiment Dictionaries

pride_prejudice <- tidy_books %>%

filter(book == "Pride & Prejudice")

pride_prejudice

afinn <- pride_prejudice %>%

inner_join(get_sentiments("afinn")) %>%

group_by(index = linenumber %/% 80) %>%

summarise(sentiment = sum(score)) %>%

mutate(method = "AFINN")

bing_and_nrc <- bind_rows(

pride_prejudice %>%

inner_join(get_sentiments("bing")) %>%

mutate(method = "Bing et al."),

pride_prejudice %>%

inner_join(get_sentiments("nrc") %>%

filter(sentiment %in% c("positive","negative"))) %>%

mutate(method = "NRC")) %>%

count(method, index = linenumber %/%

80, sentiment) %>%

spread(sentiment, n, fill = 0) %>%

mutate(sentiment = positive - negative)

bind_rows(afinn, bing_and_nrc) %>%

ggplot(aes(index, sentiment, fill = method)) +

geom_col(show.legend = FALSE) +

facet_wrap(~method, ncol = 1, scales = "free_y")

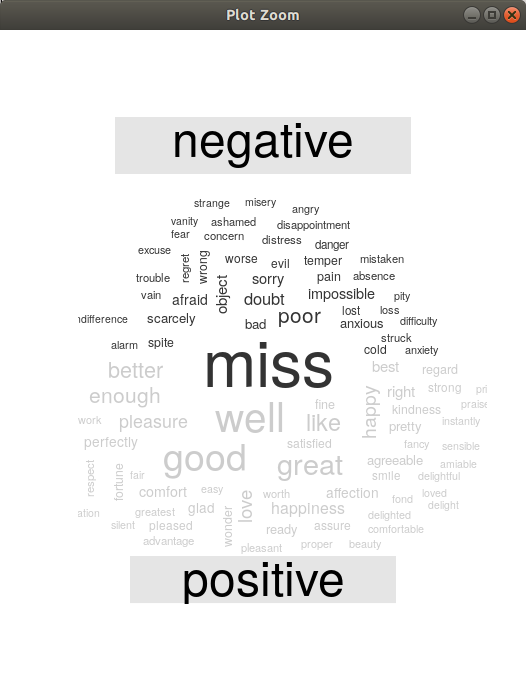

Most Common Positive and Negative Words

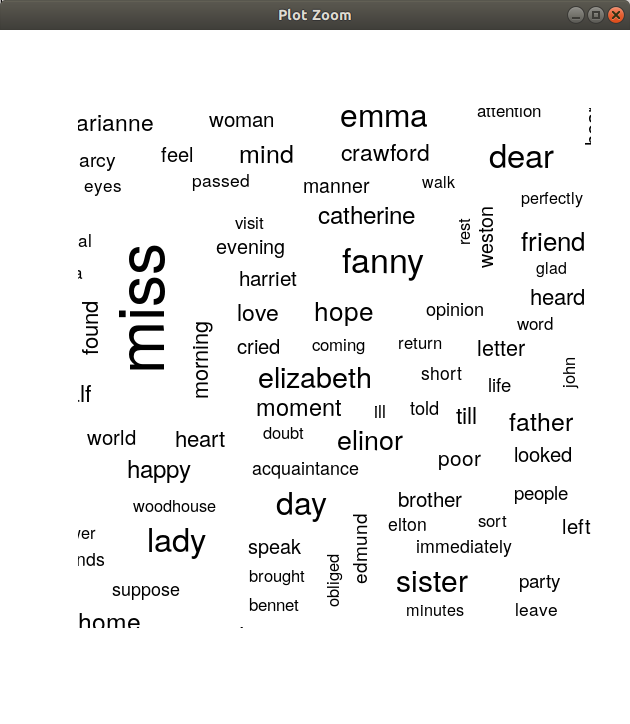

Wordclouds

Using the wordcloud package, which uses base R graphics, let's look at the most common words in Jane Austen's works as a whole again, but this time as a wordcloud.

library(wordcloud)

tidy_books %>%

anti_join(stop_words) %>%

count(word) %>%

with(wordcloud(word, n, max.words = 100))

library(reshape2)

tidy_books %>%

inner_join(get_sentiments("bing")) %>%

count(word, sentiment, sort = TRUE) %>%

acast(word ~ sentiment, value.var = "n", fill = 0) %>%

comparison.cloud(colors = c("gray20", "gray80"), max.words = 100)

Este tipo de graficos están presentando algunos problemas en mi instalación. No se grafican todas las palabras o no se despliegan de forma adecuada en la gráfica

Looking at Units Beyond Just Words

Unidades compuestas de varias palabras

Sometimes it is useful or necessary to look at different units of text. For example, some sentiment analysis algorithms look beyond only unigrams (i.e., single words) to try to understand the sentiment of a sentence as a whole. These algorithms try to understand that "I am not having a good day" is a sad sentence, not a happy one, because of negation.

Such sentiment analysis algorithms can be performed with the following R packages:

- coreNLP (Arnold and Tilton 2016)

- cleanNLP (Arnold 2016)

- sentimentr (Rinker 2017)

For these, we may want to tokenize text into sentences, and it makes sense to use a new name for the output column in such a case.

Example 1:

PandP_sentences <- data_frame(text = prideprejudice) %>%

unnest_tokens(sentence, text, token = "sentences")

Example 2:

austen_books()$text[45:83]

austen_books_sentences <- austen_books()[45:83,1:2] %>%

unnest_tokens(sentence, text, token = "sentences")

austen_books_sentences

The sentence tokenizing does seem to have a bit of trouble with UTF-8 encoded text, especially with sections of dialogue; it does much better with punctuation in ASCII. One possibility, if this is important, is to try using iconv() with something like iconv(text, to = 'latin1') in a mutate statement before unnesting.

Another option in unnest_tokens() is to split into tokens using a regex pattern. We could use the following script, for example, to split the text of Jane Austen's novels into a data frame by chapter:

austen_chapters <- austen_books() %>%

group_by(book) %>%

unnest_tokens(chapter, text, token = "regex", pattern = "Chapter|CHAPTER [\\dIVXLC]") %>%

ungroup()

austen_chapters %>%

group_by(book) %>%

summarise(chapters = n())

We have recovered the correct number of chapters in each novel (plus an "extra" row for each novel title). In the austen_chapters data frame, each row corresponds to one chapter.

Chapter 7 - Case Study - Comparing Twitter Archives

Machine Learning

What is Machine Learning

Al tratar de encontrar una definición para ML me di cuanta de que muchos expertos coinciden en que no hay una definición standard para ML.

En este post se explica bien la definición de ML: https://machinelearningmastery.com/what-is-machine-learning/

Estos vídeos también son excelentes para entender what ML is:

- https://www.youtube.com/watch?v=f_uwKZIAeM0

- https://www.youtube.com/watch?v=ukzFI9rgwfU

- https://www.youtube.com/watch?v=WXHM_i-fgGo

- https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v

Una de las definiciones más citadas es la definición de Tom Mitchell. This author provides in his book Machine Learning a definition in the opening line of the preface:

Tom Mitchell

The field of machine learning is concerned with the question of how to construct computer programs that automatically improve with experience.

So, in short we can say that ML is about write computer programs that improve themselves.

Tom Mitchell also provides a more complex and formal definition:

Tom Mitchell

A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.

Don't let the definition of terms scare you off, this is a very useful formalism. It could be used as a design tool to help us think clearly about:

- E: What data to collect.

- T: What decisions the software needs to make.

- P: How we will evaluate its results.

Suppose your email program watches which emails you do or do not mark as spam, and based on that learns how to better filter spam. In this case: https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v

- E: Watching you label emails as spam or not spam.

- T: Classifying emails as spam or not spam.

- P: The number (or fraction) of emails correctly classified as spam/not spam.

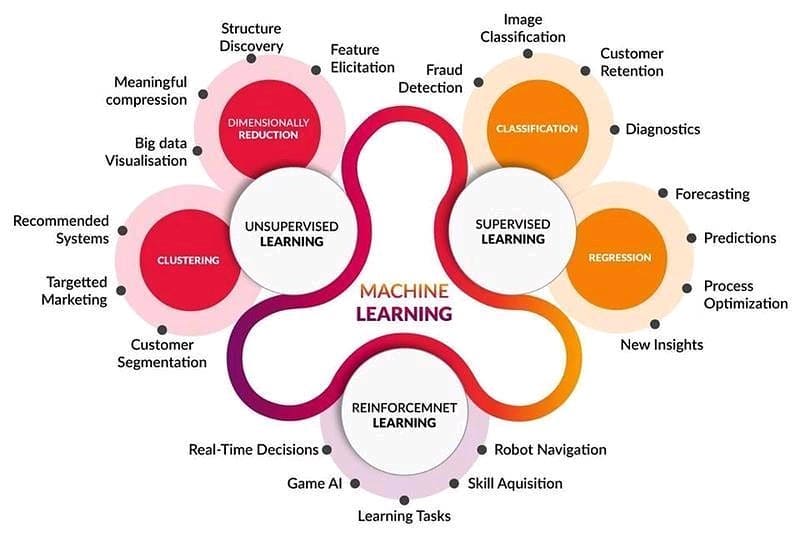

Types of Machine Learning

Supervised Learning

https://en.wikipedia.org/wiki/Supervised_learning

- Supervised learning is the machine learning task of learning a function that maps an input to an output based on example input-output pairs.

- In another words, it infers a function from labeled training data consisting of a set of training examples.

- In supervised learning, each example is a pair consisting of an input object (typically a vector) and a desired output value (also called the supervisory signal).

A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples.

https://machinelearningmastery.com/supervised-and-unsupervised-machine-learning-algorithms/

The majority of practical machine learning uses supervised learning.

Supervised learning is when you have input variables (x) and an output variable (Y) and you use an algorithm to learn the mapping function from the input to the output.

Y = f(X)

The goal is to approximate the mapping function so well that when you have new input data (x) that you can predict the output variables (Y) for that data.

It is called supervised learning because the process of an algorithm learning from the training dataset can be thought of as a teacher supervising the learning process. We know the correct answers, the algorithm iteratively makes predictions on the training data and is corrected by the teacher. Learning stops when the algorithm achieves an acceptable level of performance.

https://www.datascience.com/blog/supervised-and-unsupervised-machine-learning-algorithms

Supervised machine learning is the more commonly used between the two. It includes such algorithms as linear and logistic regression, multi-class classification, and support vector machines. Supervised learning is so named because the data scientist acts as a guide to teach the algorithm what conclusions it should come up with. It’s similar to the way a child might learn arithmetic from a teacher. Supervised learning requires that the algorithm’s possible outputs are already known and that the data used to train the algorithm is already labeled with correct answers. For example, a classification algorithm will learn to identify animals after being trained on a dataset of images that are properly labeled with the species of the animal and some identifying characteristics.

Supervised Classification

Supervised Classification with R: http://www.cmap.polytechnique.fr/~lepennec/R/Learning/Learning.html

Unsupervised Learning

Reinforcement Learning

Machine Learning Algorithms

Boosting

Gradient boosting

https://medium.com/mlreview/gradient-boosting-from-scratch-1e317ae4587d

https://freakonometrics.hypotheses.org/tag/gradient-boosting

https://en.wikipedia.org/wiki/Gradient_boosting

http://arogozhnikov.github.io/2016/06/24/gradient_boosting_explained.html

Boosting is a machine learning ensemble meta-algorithm for primarily reducing bias, and also variance in supervised learning, and a family of machine learning algorithms that convert weak learners to strong ones. Boosting is based on the question posed by Kearns and Valiant (1988, 1989): "Can a set of weak learners create a single strong learner?" A weak learner is defined to be a classifier that is only slightly correlated with the true classification (it can label examples better than random guessing). In contrast, a strong learner is a classifier that is arbitrarily well-correlated with the true classification. https://en.wikipedia.org/wiki/Gradient_boosting

Gradient boosting is a machine learning technique for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision trees. It builds the model in a stage-wise fashion like other boosting methods do, and it generalizes them by allowing optimization of an arbitrary differentiable loss function.

Boosting is a sequential process; i.e., trees are grown using the information from a previously grown tree one after the other. This process slowly learns from data and tries to improve its prediction in subsequent iterations. Let's look at a classic classification example:

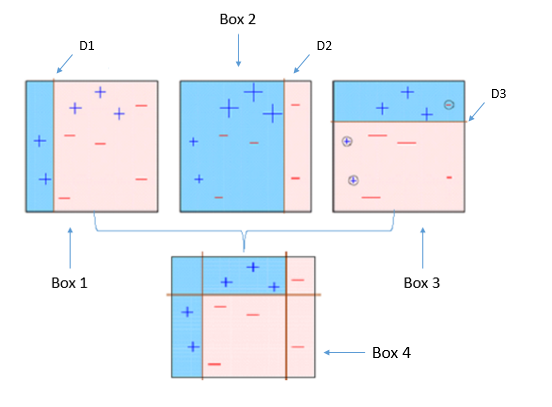

Four classifiers (in 4 boxes), shown above, are trying hard to classify + and - classes as homogeneously as possible. Let's understand this picture well:

- Box 1: The first classifier creates a vertical line (split) at D1. It says anything to the left of D1 is + and anything to the right of D1 is -. However, this classifier misclassifies three + points.

- Box 2: The next classifier says don't worry I will correct your mistakes. Therefore, it gives more weight to the three + misclassified points (see bigger size of +) and creates a vertical line at D2. Again it says, anything to right of D2 is - and left is +. Still, it makes mistakes by incorrectly classifying three - points.

- Box 3: The next classifier continues to bestow support. Again, it gives more weight to the three - misclassified points and creates a horizontal line at D3. Still, this classifier fails to classify the points (in circle) correctly.

- Remember that each of these classifiers has a misclassification error associated with them.

- Boxes 1,2, and 3 are weak classifiers. These classifiers will now be used to create a strong classifier Box 4.

- Box 4: It is a weighted combination of the weak classifiers. As you can see, it does good job at classifying all the points correctly.

That's the basic idea behind boosting algorithms. The very next model capitalizes on the misclassification/error of previous model and tries to reduce it.

XGBoost in R

https://en.wikipedia.org/wiki/XGBoost

https://cran.r-project.org/web/packages/FeatureHashing/vignettes/SentimentAnalysis.html

https://xgboost.readthedocs.io/en/latest/R-package/xgboostPresentation.html

https://github.com/mammadhajili/tweet-sentiment-classification/blob/master/Report.pdf

https://datascienceplus.com/extreme-gradient-boosting-with-r/

https://www.analyticsvidhya.com/blog/2016/01/xgboost-algorithm-easy-steps/

https://www.r-bloggers.com/an-introduction-to-xgboost-r-package/

https://www.kaggle.com/rtatman/machine-learning-with-xgboost-in-r

https://rpubs.com/flyingdisc/practical-machine-learning-xgboost

https://www.youtube.com/watch?v=woVTNwRrFHE

Artificial neural network

https://en.wikipedia.org/wiki/Artificial_neural_network

Some important concepts

Programming languages and Applications for Data Science