Difference between revisions of "Data Science"

Adelo Vieira (talk | contribs) (→Basics types) |

Adelo Vieira (talk | contribs) (→Basic R Tutorial) |

||

| Line 162: | Line 162: | ||

'''We can do arithmetic calculations on vectors:''' | '''We can do arithmetic calculations on vectors:''' | ||

| − | |||

| − | |||

| − | |||

| − | |||

<syntaxhighlight lang="R"> | <syntaxhighlight lang="R"> | ||

| Line 187: | Line 183: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | ====Arithmetic Operators==== | |

| + | * +, -, *, / | ||

| + | * Exponentiation: ^ or ** | ||

| + | * Modulo: %% | ||

| + | |||

<syntaxhighlight lang="R"> | <syntaxhighlight lang="R"> | ||

| + | (5+5)/2 | ||

| + | </syntaxhighlight> | ||

| + | Modulo: | ||

| + | <syntaxhighlight lang="R"> | ||

| + | 28%%6 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | ====Logical Operators==== | ||

| + | * '''< , > , >= , <=''' | ||

| + | * '''==''' : Exactly equal to | ||

| + | * '''!=''' : Not equal to | ||

| + | * '''!x''' : Not x | ||

| + | * '''x''' : y | ||

| + | * '''x & y''' : x AND y | ||

| + | * '''isTRUE(x)''' : Test if x is TRUE | ||

| + | |||

| + | The logical statements in R are wrapped inside the []. We can add many conditional statements as we like but we need to include them in a parenthesis. We can follow this structure to create a conditional statement: | ||

| + | |||

| + | <syntaxhighlight lang="R"> | ||

| + | variable_name[(conditional_statement)] | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | |||

==Data Mining with R - Luis Torgo== | ==Data Mining with R - Luis Torgo== | ||

Revision as of 21:11, 11 February 2019

Contents

[hide]- [+]1 Cursos

- [+]2 Anaconda

- [+]3 R programming language

- [+]4 Data Mining with R - Luis Torgo

- [+]5 Text Mining with R - A TIDY APPROACH, Julia Silge & David Robinson

- 6 RapidMiner

- [+]7 Social Media Sentiment Analysis

- [+]8 Fake news detection

- [+]9 Project proposal - Establishing an authenticity of sports news by Machine Learning Models

Cursos

eu.udacity.com

https://classroom.udacity.com/courses/ud120

www.coursera.org

https://www.coursera.org/learn/machine-learning/home/welcome

Otros

https://www.udemy.com/machine-learning-course-with-python/

https://stackoverflow.com/questions/19181999/how-to-create-a-keyboard-shortcut-for-sublimerepl

Anaconda

Anaconda is a free and open source distribution of the Python and R programming languages for data science and machine learning related applications (large-scale data processing, predictive analytics, scientific computing), that aims to simplify package management and deployment. Package versions are managed by the package management system conda. https://en.wikipedia.org/wiki/Anaconda_(Python_distribution)

Installation

https://www.anaconda.com/download/#linux

https://linuxize.com/post/how-to-install-anaconda-on-ubuntu-18-04/

Jupyter Notebook

https://www.datacamp.com/community/tutorials/tutorial-jupyter-notebook

R programming language

The R Project for Statistical Computing: https://www.r-project.org/

R is an open-source programming language that specializes in statistical computing and graphics. Supported by the R Foundation for Statistical Computing, it is widely used for developing statistical software and performing data analysis.

Installing R on Ubuntu 18.04

https://www.digitalocean.com/community/tutorials/how-to-install-r-on-ubuntu-18-04

Because R is a fast-moving project, the latest stable version isn’t always available from Ubuntu’s repositories, so we’ll start by adding the external repository maintained by CRAN.

Let’s first add the relevant GPG key:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys E298A3A825C0D65DFD57CBB651716619E084DAB9

Once we have the trusted key, we can add the repository: (Note that if you’re not using 18.04, you can find the relevant repository from the R Project Ubuntu list, named for each release)

sudo add-apt-repository 'deb https://cloud.r-project.org/bin/linux/ubuntu bionic-cran35/'

sudo apt update

At this point, we're ready to install R with the following command:

sudo apt install r-base

This confirms that we've successfully installed R and entered its interactive shell:

R sudo -i R

Installing RStudio

https://linuxconfig.org/rstudio-on-ubuntu-18-04-bionic-beaver-linux

From the official RStudio download page, download the latest Ubuntu/Debian RStudio *.deb package available. At the time of writing the Ubuntu 18.04 Bionic package is not available yet. If this is still the case download the Ubuntu 16.04 Xenial package instead: https://www.rstudio.com/products/rstudio/download/#download

sudo gdebi rstudio-xenial-1.1.442-amd64.deb

To start RStudio:

rstudio

Installing R Packages from CRAN

Part of R’s strength is its available abundance of add-on packages. For demonstration purposes, we'll install txtplot, a library that outputs ASCII graphs that include scatterplot, line plot, density plot, acf and bar charts. We'll start R as root so that the libraries will be available to all users automatically:

sudo -i R

> install.packages('txtplot')

When the installation is complete, we can load txtplot:

> library('txtplot')

If there are no error messages, the library has successfully loaded. Let’s put it in action now with an example which demonstrates a basic plotting function with axis labels. The example data, supplied by R's datasets package, contains the speed of cars and the distance required to stop based on data from the 1920s:

> txtplot(cars[,1], cars[,2], xlab = 'speed', ylab = 'distance')

+----+-----------+------------+-----------+-----------+--+

120 + * +

| |

d 100 + * +

i | * * |

s 80 + * * +

t | * * * * |

a 60 + * * * * * +

n | * * * * * |

c 40 + * * * * * * * +

e | * * * * * * * |

20 + * * * * * +

| * * * |

0 +----+-----------+------------+-----------+-----------+--+

5 10 15 20 25

speed

If you are interested to learn more about txtplot, use help(txtplot) from within the R interpreter.

Package that provides functions to connect to MySQL databases

This package name is RMySQL. You just need to type the following command at R prompt:

> install.packages('RMySQL')

Package manager

Display packages currently installed in your computer:

> installed.packages()

This produces a long output with each line containing a package, its version information, the packages it depends, and so on.

A more user-friendly, although less complete, list of the installed packages can be obtained by issuing:

> library()

The following command can be very useful as it allows you to check whether there are newer versions of your installed packages at CRAN:

> old.packages()

Moreover, you can use the following command to update all your installed packages:

> update.packages()

Basic R Tutorial

Data types

R works with numerous data types, including:

- Scalars

- Vectors (numerical, character, logical)

- Matrices

- Data frames

- Lists

Basics types

- 4.5 is a decimal value called numerics.

- 4 is a natural value called integers. Integers are also numerics.

- TRUE or FALSE is a Boolean value called logical.

- The value inside " " or ' ' are text (string). They are called characters.

- We can check the type of a variable with the class function:

x <- 28

class(x)

y <- "R is Fantastic"

class(y)

z <- TRUE

class(z)

To add a value to the variable, use <- or =

Vectors

A vector is a one-dimensional array. We can create a vector with all the basic data type we learnt before. The simplest way to build a vector in R, is to use the c command.

vec_num <- c(1, 10, 49)

vec_chr <- c("a", "b", "c")

vec_bool <- c(TRUE, FALSE, TRUE)

We can do arithmetic calculations on vectors:

vect_1 <- c(1, 3, 5)

vect_2 <- c(2, 4, 6)

sum_vect <- vect_1 + vect_2

We can use the [1:5] command to extract the value 1 to 5:

slice_vector <- c(1,2,3,4,5,6,7,8,9,10)

slice_vector[1:5]

We can write c(1:10) to create a vector of value from one to ten:

c(1:10)

Arithmetic Operators

- +, -, *, /

- Exponentiation: ^ or **

- Modulo: %%

(5+5)/2

Modulo:

28%%6

Logical Operators

- < , > , >= , <=

- == : Exactly equal to

- != : Not equal to

- !x : Not x

- x : y

- x & y : x AND y

- isTRUE(x) : Test if x is TRUE

The logical statements in R are wrapped inside the []. We can add many conditional statements as we like but we need to include them in a parenthesis. We can follow this structure to create a conditional statement:

variable_name[(conditional_statement)]

Data Mining with R - Luis Torgo

http://www.dcc.fc.up.pt/~ltorgo/DataMiningWithR/

The book is accompanied by a set of freely available R source files that can be obtained at the book's Web site. These files include all the code used in the case studies. They facilitate the "do-it-yourself" approach followed in this book. All data used in the case studies is available at the book's Web site as well. Moreover, we have created an R package called DMwR that contains several functions used in the book as well as the datasets already in R format. You should install and load this package to follow the code in the book (details on how to do this are given in the first chapter).

Installing the DMwR package

One thing that you surely should do is install the package associated with this book, which will give you access to several functions used throughout the book as well as datasets. To install it you proceed as with any other package:

Al tratar de instalarlo en Ubuntu 18.04 se generó un error:

Configuration failed because libcurl was not found. Try installing: * deb: libcurl4-openssl-dev (Debian, Ubuntu, etc)

Luego de instalar el paquete mencionado, la instalación completó correctamente:

> install.packages('DMwR')

Chapter 3 - Predicting Stock Market Returns

We will address some of the difficulties of incorporating data mining tools and techniques into a concrete business problem. The spe- cific domain used to illustrate these problems is that of automatic «stock trading systems» (sistemas de comercio de acciones). We will address the task of building a stock trading system based on prediction models obtained with daily stock quotes data. Several models will be tried with the goal of predicting the future returns of the S&P 500 market index (The Standard & Poor's 500, often abbreviated as the S&P 500, or just the S&P, is an American stock market index based on the market capitalizations of 500 large companies having common stock listed on the NYSE or NASDAQ). These predictions will be used together with a trading strategy to reach a decision regarding the market orders to generate.

This chapter addresses several new data mining issues, among which are

- How to use R to analyze data stored in a database,

- How to handle prediction problems with a time ordering among data observations (also known as time series), and

- An example of the difficulties of translating model predictions into decisions and actions in real-world applications.

The Available Data

In our case study we will concentrate on trading the S&P 500 market index. Daily data concerning the quotes of this security are freely available in many places, for example, the Yahoo finance site (http://finance.yahoo.com)

The data we will use is available through different methods:

- Reading the data from the book R package (DMwR).

- The data (in two alternative formats) is also available at http://www.dcc.fc.up.pt/~ltorgo/DataMiningWithR/datasets3.html

- The first format is a comma separated values (CSV) file that can be read into R in the same way as the data used in Chapter 2.

- The other format is a MySQL database dump file that we can use to create a database with the S&P 500 quotes in MySQL.

- Getting the Data directly from the Web (from Yahoo finance site, for example). If you choose to follow this path, you should remember that you will probably be using a larger dataset than the one used in the analysis carried out in this book. Whichever source you choose to use, the daily stock quotes data includes information regarding the following properties:

- Date of the stock exchange session

- Open price at the beginning of the session

- Highest price during the session

- Lowest price

- Closing price of the session

- Volume of transactions

- Adjusted close price4

It is up to you to decide which alternative you will use to get the data. The remainder of the chapter (i.e., the analysis after reading the data) is independent of the storage schema you decide to use.

Reading the data from the DMwR package

La data se encuentra cargada en el paquete DMwR. So, after installing the package DMwR, it is enough to issue:

> library(DMwR) > data(GSPC)

The first statement is only required if you have not issued it before in your R session. The second instruction will load an object, GSPC, of class xts. You can manipulate it as if it were a matrix or a data frame. Try, for example:

> head(GSPC)

Open High Low Close Volume Adjusted

1970-01-02 92.06 93.54 91.79 93.00 8050000 93.00

1970-01-05 93.00 94.25 92.53 93.46 11490000 93.46

1970-01-06 93.46 93.81 92.13 92.82 11460000 92.82

1970-01-07 92.82 93.38 91.93 92.63 10010000 92.63

1970-01-08 92.63 93.47 91.99 92.68 10670000 92.68

1970-01-09 92.68 93.25 91.82 92.40 9380000 92.40

Reading the Data from the CSV File

Reading the Data from a MySQL Database

Getting the Data directly from the Web

Handling Time-Dependent Data in R

The data available for this case study depends on time. This means that each observation of our dataset has a time tag attached to it. This type of data is frequently known as time series data. The main distinguishing feature of this kind of data is that order between cases matters, due to their attached time tags. Generally speaking, a time series is a set of ordered observations of a variable :

where is the value of the series variable at time .

The main goal of time series analysis is to obtain a model based on past observations of the variable, , which allows us to make predictions regarding future observations of the variable, . In the case of our stocks data, we have what is usually known as a multivariate time series, because we measure several variables at the same time tags, namely the Open, High, Low, Close, Volume, and AdjClose.

R has several packages devoted to the analysis of this type of data, and in effect it has special classes of objects that are used to store type-dependent data. Moreover, R has many functions tuned for this type of objects, like special plotting functions, etc.

Among the most flexible R packages for handling time-dependent data are:

- zoo (Zeileis and Grothendieck, 2005) and

- xts (Ryan and Ulrich, 2010).

Both offer similar power, although xts provides a set of extra facilities (e.g., in terms of sub-setting using ISO 8601 time strings) to handle this type of data.

In technical terms the class xts extends the class zoo, which means that any xts object is also a zoo object, and thus we can apply any method designed for zoo objects to xts objects. We will base our analysis in this chapter primarily on xts objects.

To install «zoo» and «xts» in R:

install.packages('zoo')

install.packages('xts')

The following examples illustrate how to create objects of class xts:

1 > library(xts)

2 > x1 <- xts(rnorm(100), seq(as.POSIXct("2000-01-01"), len = 100, by = "day"))

3 > x1[1:5]

> x2 <- xts(rnorm(100), seq(as.POSIXct("2000-01-01 13:00"), len = 100, by = "min"))

> x2[1:4]

> x3 <- xts(rnorm(3), as.Date(c("2005-01-01", "2005-01-10", "2005-01-12")))

> x3

The function xts() receives the time series data in the first argument. This can either be a vector, or a matrix if we have a multivariate time series.In the latter case each column of the matrix is interpreted as a variable being sampled at each time tag (i.e., each row). The time tags are provided in the second argument. This needs to be a set of time tags in any of the existing time classes in R. In the examples above we have used two of the most common classes to represent time information in R: the POSIXct/POSIXlt classes and the Date class. There are many functions associated with these objects for manipulating dates information, which you may want to check using the help facilities of R. One such example is the seq() function. We have used this function before to generate sequences of numbers.

As you might observe in the above small examples, the objects may be indexed as if they were “normal” objects without time tags (in this case we see a standard vector sub-setting). Still, we will frequently want to subset these time series objects based on time-related conditions. This can be achieved in several ways with xts objects, as the following small examples try to illustrate:

> x1[as.POSIXct("2000-01-04")]

> x1["2000-01-05"]

> x1["20000105"]

> x1["2000-04"]

x1["2000-02-26/2000-03-03"]

> x1["/20000103"]

Multiple time series can be created in a similar fashion as illustrated below:

> mts.vals <- matrix(round(rnorm(25),2),5,5)

> colnames(mts.vals) <- paste('ts',1:5,sep='')

> mts <- xts(mts.vals,as.POSIXct(c('2003-01-01','2003-01-04', '2003-01-05','2003-01-06','2003-02-16')))

> mts

> mts["2003-01",c("ts2","ts5")]

The functions index() and time() can be used to “extract” the time tags information of any xts object, while the coredata() function obtains the data values of the time series:

> index(mts)

> coredata(mts)

Defining the Prediction Tasks

Generally speaking, our goal is to have good forecasts of the future price of the S&P 500 index so that profitable orders can be placed on time.

What to Predict

The trading strategies we will describe in Section 3.5 assume that we obtain a prediction of the tendency of the market in the next few days. Based on this prediction, we will place orders that will be profitable if the tendency is confirmed in the future.

Let us assume that if the prices vary more than , «we consider this worthwhile in terms of trading (e.g., covering transaction costs)» «consideramos que vale la pena en términos de negociación (por ejemplo, que cubre los costos de transacción)». In this context, we want our prediction models to forecast whether this margin is attainable in the next days.

Please note that within these days we can actually observe prices both above and below this percentage. For instance, the closing price at time may represent a variation much lower than , but it could have been preceded by a period of prices representing variations much higher than within the window

This means that predicting a particular price for a specific future time might not be the best idea. In effect, what we want is to have a prediction of the overall dynamics of the price in the next days, and this is not captured by a particular price at a specific time.

We will describe a variable, calculated with the quotes data, that can be seen as an indicator (a value) of the tendency in the next days. The value of this indicator should be related to the confidence we have that the target margin will be attainable in the next days.

At this stage it is important to note that when we mention a variation in , we mean above or below the current price. The idea is that positive variations will lead us to buy, while negative variations will trigger sell actions. The indicator we are proposing resumes the tendency as a single value, positive for upward tendencies, and negative for downward price tendencies.

Let the daily average price be approximated by:

where , and are the close, high, and low quotes for day , respectively

Let be the set of percentage variations of today's close to the following days average prices (often called arithmetic returns):

Our indicator variable is the total sum of the variations whose absolute value is above our target margin

The general idea of the variable is to signal -days periods that have several days with average daily prices clearly above the target variation. High positive values of mean that there are several average daily prices that are higher than today's close. Such situations are good indications of potential opportunities to issue a buy order, as we have good expectations that the prices will rise. On the other hand, highly negative values of suggest sell actions, given the prices will probably decline. Values around zero can be caused by periods with "flat" prices or by conflicting positive and negative variations that cancel each other. The following function implements this simple indicator:

T.ind <- function(quotes, tgt.margin = 0.025, n.days = 10) {

v <- apply(HLC(quotes), 1, mean)

r <- matrix(NA, ncol = n.days, nrow = NROW(quotes))

for (x in 1:n.days) r[, x] <- Next(Delt(v, k = x), x)

x <- apply(r, 1, function(x) sum(x[x > tgt.margin | x < -tgt.margin]))

if (is.xts(quotes))

xts(x, time(quotes))

else x

}

The target variation margin has been set by default to 2.5%

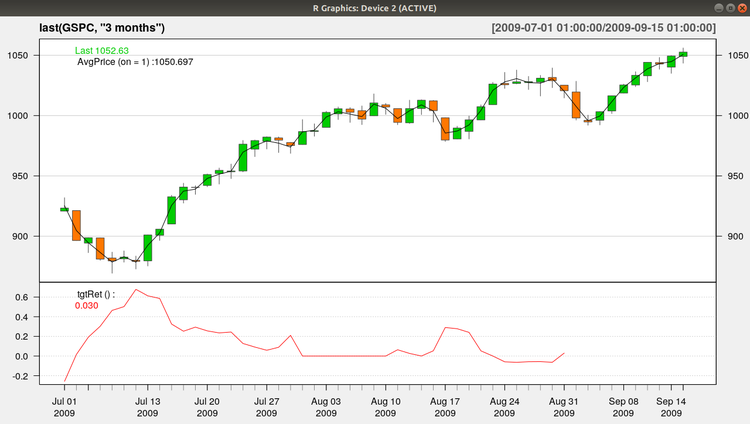

We can get a better idea of the behavior of this indicator in Figure 3.1, which was produced with the following code:

https://plot.ly/r/candlestick-charts/

https://stackoverflow.com/questions/44223485/quantmod-r-candlechart-no-colours

Esta creo que es la única librería que hace falta para esta gráfica:

library(quantmod)

> candleChart(last(GSPC, "3 months"), theme = "white", TA = NULL)

> avgPrice <- function(p) apply(HLC(p), 1, mean)

> addAvgPrice <- newTA(FUN = avgPrice, col = 1, legend = "AvgPrice")

> addT.ind <- newTA(FUN = T.ind, col = "red", legend = "tgtRet")

> addAvgPrice(on = 1)

> addT.ind()

Which Predictors?

We have defined an indicator () that summarizes the behavior of the price time series in the next k days. Our data mining goal will be to predict this behavior. The main assumption behind trying to forecast the future behavior of financial markets is that it is possible to do so by observing the past behavior of the market.

More precisely, we are assuming that if in the past a certain behavior was followed by another behavior , and if that causal chain happened frequently, then it is plausible to assume that this will occur again in the future; and thus if we observe now, we predict that we will observe next.

We are approximating the future behavior (f ), by our indicator T.

We now have to decide on how we will describe the recent prices pattern (p in the description above). Instead of using again a single indicator to de scribe these recent dynamics, we will use several indicators, trying to capture different properties of the price time series to facilitate the forecasting task.

The simplest type of information we can use to describe the past are the recent observed prices. Informally, that is the type of approach followed in several standard time series modeling approaches. These approaches develop models that describe the relationship between future values of a time series and a window of past q observations of this time series. We will try to enrich our description of the current dynamics of the time series by adding further features to this window of recent prices.

Technical indicators are numeric summaries that reflect some properties of the price time series. Despite their debatable use as tools for deciding when to trade, they can nevertheless provide interesting summaries of the dynamics of a price time series. The amount of technical indicators available can be overwhelming. In R we can find a very good sample of them, thanks to package TTR (Ulrich, 2009).

The indicators usually try to capture some properties of the prices series, such as if they are varying too much, or following some specific trend, etc.

In our approach to this problem, we will not carry out an exhaustive search for the indicators that are most adequate to our task. Still, this is a relevant research question, and not only for this particular application. It is usually known as the feature selection problem, and can informally be defined as the task of finding the most adequate subset of available input variables for a modeling task.

The existing approaches to this problem can usually be cast in two groups: (1) feature filters and (2) feature wrappers.

- Feature filters are independent of the modeling tool that will be used after the feature selection phase. They basically try to use some statistical properties of the features (e.g., correlation) to select the final set of features.

- The wrapper approaches include the modeling tool in the selection process. They carry out an iterative search process where at each step a candidate set of features is tried with the modeling tool and the respective results are recorded. Based on these results, new tentative sets are generated using some search operators, and the process is repeated until some convergence criteria are met that will define the final set.

We will use a simple approach to select the features to include in our model. The idea is to illustrate this process with a concrete example and not to find the best possible solution to this problem, which would require other time and computational resources. We will define an initial set of features and then use a technique to estimate the importance of each of these features. Based on these estimates we will select the most relevant features.

Text Mining with R - A TIDY APPROACH, Julia Silge & David Robinson

Chapter 2 - Sentiment Analysis with Tidy Data

Let's address the topic of opinion mining or sentiment analysis. When human readers approach a text, we use our understanding of the emotional intent of words to infer whether a section of text is positive or negative, or perhaps characterized by some other more nuanced emotion like surprise or disgust. We can use the tools of text mining to approach the emotional content of text programmatically, as shown in the next figure:

One way to analyze the sentiment of a text is to consider the text as a combination of its individual words, and the sentiment content of the whole text as the sum of the sentiment content of the individual words. This isn't the only way to approach sentiment analysis, but it is an often-used approach, and an approach that naturally takes advantage of the tidy tool ecosystem.

The tidytext package contains several sentiment lexicons in the sentiments dataset.

install.packages('tidytext')

library(tidytext)

sentiments

# A tibble: 27,314 x 4

word sentiment lexicon score

<chr> <chr> <chr> <int>

1 abacus trust nrc NA

2 abandon fear nrc NA

3 abandon negative nrc NA

4 abandon sadness nrc NA

5 abandoned anger nrc NA

6 abandoned fear nrc NA

7 abandoned negative nrc NA

8 abandoned sadness nrc NA

9 abandonment anger nrc NA

10 abandonment fear nrc NA

# ... with 27,304 more rows

The three general-purpose lexicons are:

- AFINN from Finn Årup Nielsen

- Bing from Bing Liu and collaborators

- NRC from Saif Mohammad and Peter Turney

All three lexicons are based on unigrams, i.e., single words. These lexicons contain many English words and the words are assigned scores for positive/negative sentiment, and also possibly emotions like joy, anger, sadness, and so forth.

All three lexicons are based on unigrams, i.e., single words. These lexicons contain many English words and the words are assigned scores for positive/negative sentiment, and also possibly emotions like joy, anger, sadness, and so forth.

- The NRC lexicon categorizes words in a binary fashion ("yes"/"no") into categories of positive, negative, anger, anticipation, disgust, fear, joy, sadness, surprise, and trust.

- The Bing lexicon categorizes words in a binary fashion into positive and negative categories.

- The AFINN lexicon assigns words with a score that runs between -5 and 5, with negative scores indicating negative sentiment and positive scores indicating positive sentiment. All of this information is tabulated in the sentiments dataset, and tidytext provides the function get_sentiments() to get specific sentiment lexicons without the columns that are not used in that lexicon.

get_sentiments("nrc")

# A tibble: 13,901 x 2

word sentiment

<chr> <chr>

1 abacus trust

2 abandon fear

3 abandon negative

4 abandon sadness

5 abandoned anger

6 abandoned fear

7 abandoned negative

8 abandoned sadness

9 abandonment anger

10 abandonment fear

# ... with 13,891 more rows

get_sentiments("bing")

# A tibble: 6,788 x 2

word sentiment

<chr> <chr>

1 2-faced negative

2 2-faces negative

3 a+ positive

4 abnormal negative

5 abolish negative

6 abominable negative

7 abominably negative

8 abominate negative

9 abomination negative

10 abort negative

# ... with 6,778 more rows

get_sentiments("afinn")

# A tibble: 2,476 x 2

word score

<chr> <int>

1 abandon -2

2 abandoned -2

3 abandons -2

4 abducted -2

5 abduction -2

6 abductions -2

7 abhor -3

8 abhorred -3

9 abhorrent -3

10 abhors -3

# ... with 2,466 more rows

How were these sentiment lexicons put together and validated? They were constructed via either crowdsourcing (using, for example, Amazon Mechanical Turk) or by the labor of one of the authors, and were validated using some combination of crowdsourcing again, restaurant or movie reviews, or Twitter data. Given this information, we may hesitate to apply these sentiment lexicons to styles of text dramatically different from what they were validated on, such as narrative fiction from 200 years ago. While it is true that using these sentiment lexicons with, for example, Jane Austen’s novels may give us less accurate results than with tweets sent by a contemporary writer, we still can measure the sentiment content for words that are shared across the lexicon and the text.

There are also some domain-specific sentiment lexicons available, constructed to be used with text from a specific content area. "Example: Mining Financial Articles" on page 81 explores an analysis using a sentiment lexicon specifically for finance.

Dictionary-based methods like the ones we are discussing find the total sentiment of a piece of text by adding up the individual sentiment scores for each word in the text.

Not every English word is in the lexicons because many English words are pretty neutral. It is important to keep in mind that these methods do not take into account qualifiers before a word, such as in “no good” or “not true”; a lexicon-based method like this is based on unigrams only. For many kinds of text (like the narrative examples below), there are no sustained sections of sarcasm or negated text, so this is not an important effect. Also, we can use a tidy text approach to begin to understand what kinds of negation words are important in a given text; see Chapter 9 for an extended example of such an analysis.

RapidMiner

Social Media Sentiment Analysis

https://www.dezyre.com/article/top-10-machine-learning-projects-for-beginners/397

https://elitedatascience.com/machine-learning-projects-for-beginners#social-media

https://en.wikipedia.org/wiki/Sentiment_analysis

https://en.wikipedia.org/wiki/Social_media_mining

Sentiment analysis, also known as opinion mining, opinion extraction, sentiment mining or subjectivity analysis, is the process of analyzing a piece of online writing (social media mentions, blog posts, news sites, or any other piece) expresses positive, negative, or neutral attitude. https://brand24.com/blog/twitter-sentiment-analysis/

Motivation

Social media has almost become synonymous with «big data» due to the sheer amount of user-generated content.

Mining this rich data can prove unprecedented ways to keep a pulse on opinions, trends, and public sentiment (Facebook, Twitter, YouTube, WeChat...)

Social media data will become even more relevant for marketing, branding, and business as a whole.

As you can see, Data Analysis (Machine learning) for this kind of researches is a tool that will become more and more important in the coming years.

Methodology

Mining Social Media data

The first part of the project will be Mining Social Media data

- To start the project we first need to choose where we are going to get the data from. I have seen in many sources that to start working on it, Twitter is the classic entry point for practicing. Here you can see a tutorial about how to Mining Twitter Data with Python : https://marcobonzanini.com/2015/03/02/mining-twitter-data-with-python-part-1/

Twitter Data Mining

https://www.toptal.com/python/twitter-data-mining-using-python

https://marcobonzanini.com/2015/03/02/mining-twitter-data-with-python-part-1/

Why Twitter data?

Twitter is a gold mine of data. Unlike other social platforms, almost every user’s tweets are completely public and pullable. This is a huge plus if you’re trying to get a large amount of data to run analytics on. Twitter data is also pretty specific. Twitter’s API allows you to do complex queries like pulling every tweet about a certain topic within the last twenty minutes, or pull a certain user’s non-retweeted tweets.

A simple application of this could be analyzing how your company is received in the general public. You could collect the last 2,000 tweets that mention your company (or any term you like), and run a sentiment analysis algorithm over it.

We can also target users that specifically live in a certain location, which is known as spatial data. Another application of this could be to map the areas on the globe where your company has been mentioned the most.

As you can see, Twitter data can be a large door into the insights of the general public, and how they receive a topic. That, combined with the openness and the generous rate limiting of Twitter’s API, can produce powerful results.

Twitter Developer Account:

In order to use Twitter’s API, we have to create a developer account on the Twitter apps site.

Log in or make a Twitter account at https://apps.twitter.com/

As of July 2018, you must apply for a Twitter developer account and be approved before you may create new apps. Once approved, you will be able to create new apps from developer.twitter.com.

Home Twitter Developer Account: https://developer.twitter.com/

Tutorials:https://developer.twitter.com/en/docs/tutorials

Docs: https://developer.twitter.com/en/docs

How to Register a Twitter App: https://iag.me/socialmedia/how-to-create-a-twitter-app-in-8-easy-steps/

Storing the data

Secondly, we will need to store the data

Analyzing the data

The third part of the project will be the analysis of the data. Here is where Machine learning will be implement.

- In this part we first need to decide what we want to analyze. There are many examples:

- Example 1 - Business: companies use opinion mining tools to find out what consumers think of their product, service, brand, marketing campaigns or competitors.

- Example 2 - Public actions: opinion analysis is used to analyze online reactions to social and cultural phenomena, for example, the premiere episode of the Game of Thrones, or Oscars.

- Example 3 - Politics: In politics, sentiment analysis is used to keep track of society’s opinions on the government, politicians, statements, policy changes, or event to predict results of the election.

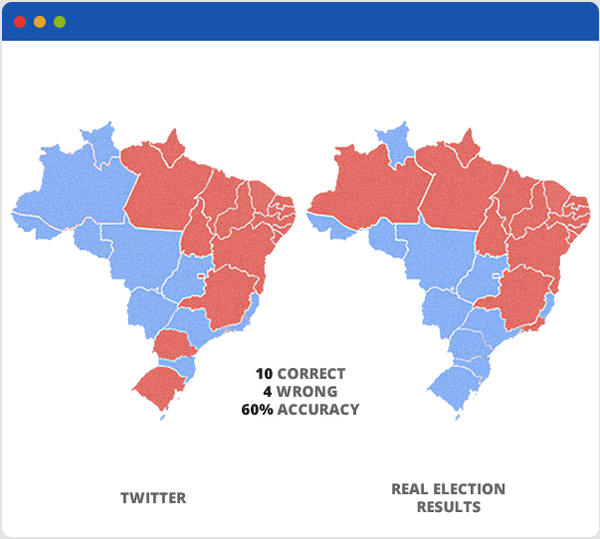

- Here the link to a nice article I found «This article describes the techniques that effectively analyzed Twitter Trend Topics to predict, as a sample test case, regional voting patterns in the 2014 Brazilian presidential election» : https://www.toptal.com/data-science/social-network-data-mining-for-predictive-analysis

- In essence, this guy analyses Twitter data for the days prior to the election and got this map:

- Example 4 - Health: I found, for example, this topic that I think is really interesting: Using Twitter Data and Sentiment Analysis to Study Diseases Dynamics. In this work, we extract information about diseases from Twitter with spatiotemporal constraints, i.e. considering a specific geographic area during a given period. We show our first results for a monitoring tool that allow to study the dynamic of diseases https://www.researchgate.net/publication

Plotting the data

If we decide to plot Geographical trends of data, we could present the data using JavaScript like this (Click into the regions and countries): http://perso.sinfronteras.ws/1-archivos/javascript_maps-ammap/continentes.html

Some remarks

- It will be up to us (as a team) to determine what we want to analyses. However, we don't need to decide RIGHT NOW what we are going to analyses. A big part of the methodology can be done without knowing what we are going to analyses. We have enough time to think about a nice case study...

- Another important feature of this work if that we can do it as complex as we want. We could start with something simple like: Determining the most relevant Twitter topic in a geographic area (Dublin for example) during a given period; and following a similar methodology, implement some more complex or relevant analysis like that one about Study Diseases Dynamics; or whichever we decide as a team.

Some references

https://brand24.com/blog/twitter-sentiment-analysis/

https://elitedatascience.com/machine-learning-projects-for-beginners#social-media

https://www.dezyre.com/article/top-10-machine-learning-projects-for-beginners/397

https://www.toptal.com/data-science/social-network-data-mining-for-predictive-analysis

Fake news detection

Automatic Detection of Fake News in Social Media using Contextual Information

Linguistic approach

The linguistic or textual approach to detecting false information involves using techniques that analyzes frequency, usage, and patterns in the text. Using this gives the ability to find similarities that comply to usage that is known in types of text, such as for fake news, which have a language that is similar to satire and will contain more emotional and an easier language than articles have on the same topic.

Support VectorMachines

A support vector machine(SVM) is a classifier that works by separating a hyperplane(n-dimensional space) containing input. It is based on statistical learning theory[59]. Given labeled training data, the algorithm outputs an optimal hyperplane which classifies new examples. The optimal hyperplane is calculated by finding the divider that minimizes the noise sensitivity andmaximizes the generalization

Naive Bayes

Naive Bayes is a family of linear classifiers that works by using mutually independent features in a dataset for classification[46]. It is known for being easy to implement, being robust, fast and accurate. They are widely used for classification tasks, such as diagnosis of diseases and spam filtering in E-mail.

Term frequency inverse document frequency

Term frequency-inverse document frequency(TF-IDF) is a weight value often used in information retrieval and gives a statistical measure to evaluate the importance of a word in a document collection or a corpus. Basically, the importance of a word increases proportionally with how many times it appears in a document, but is offset by the frequency of the word in the collection or corpus. Thus a word that appears all the time will have a low impact score, while other less used words will have a greater value associated with them[28]

N-grams

Sentiment analysis

Contextual approach

Contextual approaches incorporate most of the information that is not text. This includes data about users, such as comments, likes, re-tweets, shares and so on. It can also be information regarding the origin, both as who created it and where it was first published. This kind of information has a more predictive approach then linguistic, where you can be more deterministic. The contextual clues give a good indication of how the information is being used, and based on this assumptions can be made.

This approach relies on structured data to be able to make the assumptions, and because of that the usage area is for now limited to Social Media, because of the amount of information that is made public there. You have access to publishers, reactions, origin, shares and even age of the posts.

In addition to this, contextual systems are most often used to increase the quality of existing information and augment linguistic systems, by giving more information to work on for these systems, being reputation, trust metrics or other ways of giving indicators on whether the information is statistically leaning towards being fake or not.

Below a series of contextual methods are presented. They are a collection of state of the art methods and old, proven methods.

Logistic regression

Crowdsourcing algorithms

Network analysis

Trust Networks

Trust Metrics

Content-driven reputation system

Knowledge Graphs

Fake News Detection on Social Media - A Data Mining Perspective

https://www.kdd.org/exploration_files/19-1-Article2.pdf

Fake news detection

In the previous section, we introduced the conceptual characterization of traditional fake news and fake news in social media. Based on this characterization, we further explore the problem definition and proposed approaches for fake news detection.

Problem Definition

In this subsection, we present the details of mathematical formulation of fake news detection on social media. Specifically, we will introduce the definition of key components of fake news and then present the formal definition of fake news detection. The basic notations are defined below,

- Let a refer to a News Article. It consists of two major components: Publisher and Content. Publisher «Pa» includes a set of profile features to describe the original author, such as name, domain, age, among other attributes. Content «Ca» consists of a set of attributes that represent the news article and includes headline, text, image, etc.

- We also define Social News Engagements as a set of tuples «E={e_it}» to represent the process of how news spread over time among n users «U={u1, u2, .., un}» and their corresponding posts P={p1, p2, ..., pn} on social media regarding news article «a». Each engagement e_it={ui, pi, t} represents that a user «ui» spreads news article «a» using «pi» at time t. Note that we set t=Null if the article «a» does not have any engagement yet and thus «ui» represents the publisher.

Definition 2 (Fake News Detection) Given the social news engagements E among n users for news article «a», the task of fake news detection is to predict whether the news article «a» is a fake news piece or not, i.e., F : E → {0,1} such that,

where F is the prediction function we want to learn. Note that we define fake news detection as a binary classification problem for the following reason: fake news is essentially a distortion bias on information manipulated by the publisher. According to previous research about media bias theory [26], distortion bias is usually modeled as a binary classification problem.

Next, we propose a general data mining framework for fake news detection which includes two phases: (i) feature extraction and (ii) model construction. The feature extraction phase aims to represent news content and related auxiliary information in a formal mathematical structure, and model construction phase further builds machine learning models to better differentiate fake news and real news based on the feature representations.

Feature Extraction

Fake news detection on traditional news media mainly relies on news content, while in social media, extra social context auxiliary information can be used to as additional information to help detect fake news. Thus, we will present the details of how to extract and represent useful features from news content and social context.

News Content Features

News content features c_a describe the meta information related to a piece of news. A list of representative news content attributes are listed below:

- Source: Author or publisher of the news article

- Headline: Short title text that aims to catch the attention of readers and describes the main topic of the article

- Body Text: Main text that elaborates the details of the news story; there is usually a major claim that is specifically highlighted and that shapes the angle of the publisher

- Image/Video: Part of the body content of a news article that provides visual cues to frame the story

Based on these raw content attributes, different kinds of feature representations can be built to extract discriminative characteristics of fake news. Typically, the news content we are looking at will mostly be linguistic-based and visual-based, described in more detail below.

- Linguistic-based: Since fake news pieces are intentionally created for financial or political gain rather than to report objective claims, they often contain opinionated and inflammatory language, crafted as “clickbait” (i.e., to entice users to click on the link to read the full article) or to incite confusion [13]. Thus, it is reasonable to exploit linguistic features that capture the different writing styles and sensational headlines to detect fake news.

- Visual-based: Visual cues have been shown to be an important manipulator for fake news propaganda. As we have characterized, fake news exploits the individual vulnerabilities of people and thus often relies on sensational or even fake images to provoke anger or other emotional response of consumers. Visual-based features are extracted from visual elements (e.g. images and videos) to capture the different characteristics for fake news.

Social Context Features

In addition to features related directly to the content of the news articles, additional social context features can also be derived from the user-driven social engagements of news consumption on social media platform. Social engagements represent the news proliferation process over time, which provides useful auxiliary information to infer the veracity of news articles. Note that few papers exist in the literature that detect fake news using social context features. However, because we believe this is a critical aspect of successful fake news detection, we introduce a set of common features utilized in similar research areas, such as rumor veracity classification on social media. Generally, there are three major aspects of the social media context that we want to represent: users, generated posts, and networks. Below, we investigate how we can extract and represent social context features from these three aspects to support fake news detection

- User-based: As we mentioned in Section 2.3, fake news pieces are likely to be created and spread by non-human accounts, such as social bots or cyborgs. Thus, capturing users’ profiles and characteristics by user-based features can provide useful information for fake news detection.

- Post-based: People express their emotions or opinions towards fake news through social media posts, such as skeptical opinions, sensational reactions, etc. Thus, it is reasonable to extract post-based features to help find potential fake news via reactions from the general public as expressed in posts.

- Network-based: Users form different networks on social media in terms of interests, topics, and relations. As mentioned before, fake news dissemination processes tend to form an echo chamber cycle, highlighting the value of extracting network-based features to represent these types of network patterns for fake news detection. Network-based features are extracted via constructing specific networks among the users who published related social media posts.

Model Construction

In the previous section, we introduced features extracted from different sources, i.e., news content and social context, for fake news detection. In this section, we discuss the details of the model construction process for several existing approaches. Specifically we categorize existing methods based on their main input sources as: News Content Models and Social Context Models.

News Content Models

In this subsection, we focus on news content models, which mainly rely on news content features and existing factual sources to classify fake news. Specifically, existing approaches can be categorized as Knowledge-based and Style-based.

Knowledge-based: Knowledgebased approaches aim to use external sources to fact-check proposed claims in news content.

Existing fact-checking approaches can be categorized as expert-oriented, crowdsourcing-oriented, and computational-oriented:

Expert-oriented:

Crowdsourcing-oriented:

Computational-oriented:

Style-based: Style-based approaches try to detect fake news by capturing the manipulators in the writing style of news content. There are mainly two typical categories of style-based methods: Deception-oriented and Objectivity-oriented:Deception-oriented:

Objectivity-oriented:

Social Context Models

Social context models include relevant user social engagements in the analysis, capturing this auxiliary information from a variety of perspectives. We can classify existing approaches for social context modeling into two categories: Stance-based and Propagation-based.

Note that very few existing fake news detection approaches have utilized social context models. Thus, we also introduce similar methods for rumor detection using social media, which have potential application for fake news detection.

Stance-based:

Propagation-based:

Paper: Fake News Detection using Machine Learning

I trained fake news detection AI with >95% accuracy and almost went crazy

Project proposal - Establishing an authenticity of sports news by Machine Learning Models

Introduction

In recent years, with the growing of the Web 2.0, it is really easy for almost anyone to publish information on the Web without any verification of its authenticity, giving as a result the emerge of viral fake news. Using row public data from Twitter, we are going to make an application that based on Machine Learning is able to detect with an Authentic news.

Key words

Authenticity, Fake news, machine learning model, Data Mining, Text Mining.

Team and Roles

Definitions

Authenticity and Intent

There are two key features of this definition: authenticity and intent. First, fake news includes false information that can be verified as such. Second, fake news is created with dishonest intention to mislead consumers (Shu et all, 2016).

Sport News

Sports news or sport journalism is a form of writing that reports on sporting topics and competitions.(Wikipedia, 2018).

Fake news

1- According to The New York Times opinion video series operation infection documentary

Fake news comes from disinformation word and It is one of the three weapons from the Ideological Subversion or Active Measure (активные мероприятия) department. It was created by the Russian or KGB spies durant cold war to attack United States. The main goal of fake news is to change the perception of reality of every American. Disinformation focus on: forgeries, agents of influence and plating false stories. The project is a long term going and still in course. The operation infektion project started on July 1983 in New Delhi, India within an remarkable history appears in the newspaper: “Aids may invade India. Mystery disease caused by US experiments. It was created as an biological weapon (Figure1). As an result in September 12th 1985 the history had spread all over Africa. In March 30th 1987 the same history is on national televisions over Unites States. Disinformation is not Propaganda:

- Propaganda try to convince us to believe at something

- Disinformation is defined as deliberately distorted information that is secretly leaked into the communication process in order to deceive and manipulate.

2- According Shu et all 2016

Definition 1 (Fake News) Fake news is a news article that is intentionally and verifiably false. Definition 2 (Fake News Detection) Given the social news engagements E among n users for news article a, the task of fake news detection is to predict whether the news article a is a fake news piece or not.

Machine Learning model

1. According to Professor Tom Mitchell, Carnegie Mellon University: "A computer program is said to learn from experience 'E', with respect to some class of tasks 'T' and performance measure 'P' if its performance at tasks in 'T' as measured by 'P' improves with experience 'E'.

2. Vast amounts of data are being generated in many fields, and the statisticians' job is to make sense of it all: to extract important patterns and trends, and to understand “what the data says”. We call this learning from data. (Hastie, 2016).

3. Pattern recognition has its origins in engineering, whereas machine learning grew out of computer science. However, these activities can be viewed as two facets of the same field…(Bishop, 2016)

4. Machine learning (ML) can help you use historical data to make better business decisions. ML algorithms discover patterns in data, and construct mathematical models using these discoveries. Then you can use the models to make predictions on future data. (Amazon, 2018).

5. One of the most interesting features of machine learning is that it lies on the boundary of several different academic disciplines, principally computer science, statistics, mathematics, and engineering. …machine learning is usually studied as part of artificial intelligence, which puts it firmly into computer science …understanding why these algorithms work requires a certain amount of statistical and mathematical sophistication that is often missing from computer science undergraduates. (Marsland, 2009).

Data Mining

1. According to Encyclopedia Britannica (2018) Data mining, also called knowledge discovery in databases, in computer science, the process of discovering interesting and useful patterns and relationships in large volumes of data.

2. Data mining (DM), also known as knowledge discovery in databases (Fayyad and Uthurusamy, 1999), and information archaeology (Brachman et al, 1993).

Text Mining

1. According Marti (1999): Text data mining (TDM) has the peculiar distinction of having a name and a fair amount of hype but as yet almost no practitioners. I suspect this has happened because people assume TDM is a natural extension of the slightly less nascent field of data mining (DM).

R Language

1. According GNU Project, 2018: R is a language and environment for statistical computing and graphics. It is a GNU project which is similar to the S language and environment which was developed at Bell Laboratories (formerly AT&T, now Lucent Technologies) by John Chambers and colleagues. R can be considered as a different implementation of S. There are some important differences, but much code written for S runs unaltered under R.

Problem area - Innovation area

Andrea

Solution to Problem - Innovation Solution

Machine learning model for fake news detection

In short, this project seeks to build machine learning models that allow to identify if a sport news article published on the Internet is fake according to the terms defined above.

In order to do so, we first need to recognize and extract features from the news article that can be related to the veracity of its content. There are many different techniques that have been used in similar projects to build models for fake news detection.

As mentioned by Granskogen (2018), this kind of analysis can be done through two approaches:

- The Linguistic approach: It is based only on the analysis of the content of the text itself. «This approach involves using techniques that analyses frequency, usage, and patterns in the text. [Granskogen (2018)]

- This approach is reasonable because news articles are usually intentionally created using inflammatory language and sensational headlines for specific purposes: i.e., to tempt readers to click on a link or to incite confusion. So, the linguistic analysis seeks to capture the writing styles in fake news articles. [Shuy et al] [Chen et al (2015)]

- The Contextual approach: «Incorporate most of the information that is not text. This includes data about users, such as comments, likes, re-tweets, shares and so on. It can also be information regarding the origin, both as who created it and where it was first published» [Granskogen (2018)]

Some of the most common techniques that have been used for fake news detection are:

- Linguistic approach:

- Sentiment analysis

- Naive Bayes

- Support VectorMachines

- Contextual approach:

- Network analysis

- Logistic regression

- Trust Networks

To build the Machine Learning Model we will need a dataset constituted by True and False news articles (Table 1). This data (called the training data) will be analyzed using the techniques mentioned above (sentiment analysis, Naive Bayes, Network Analysis, Logistic regression, etc).

It is very important to notice that there is not a unique way for fake news detection. We can actually say that this is recent problem that has been studied in the last years. So, to generate an accurate Machine Learning Model, we will have to perform lot of tests using different techniques and approaches. That is why, at this point of the project, it is not possible to determinate the exact methodology that will be used for building the Machine Learning Model.

In this project proposal we have decided to reduce the domain for fake news detection to «Sport news». We have taken this decision because most of the researches we have reviewed confirmed that Machine Learning Models for fake news detection have shown good results in closed domains. [Conroy et al (2015)]. However, more recent researches indicate that a contextual approach must improve accuracy in open domains. [Granskogen (2018)]

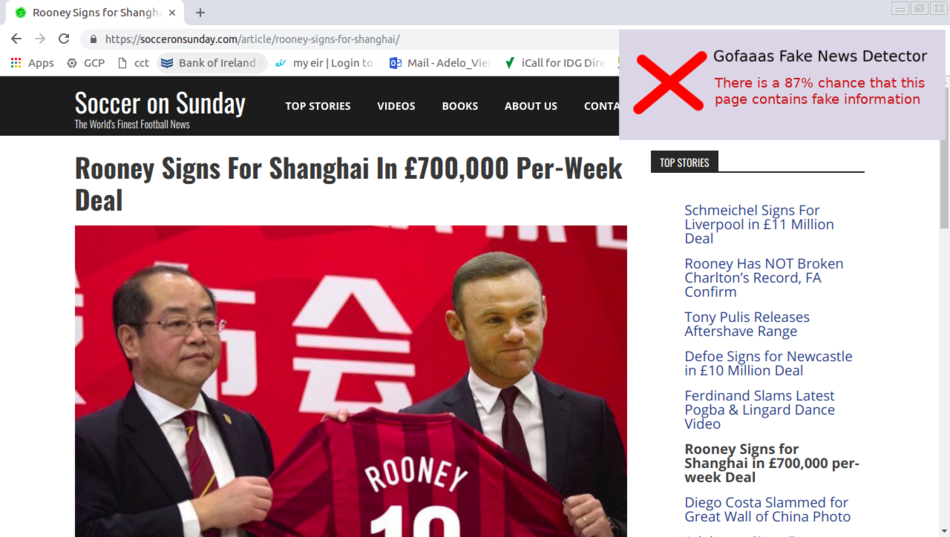

Prototype of an application to integrate the Machine Learning Model to a browser or App used to access the news article

The second part of this project consists of creating an application that provides a practical way of detecting fake news at the moment when the reader accesses them.

The idea is to develop an application that works as an extension of the internet browser or the application that is used to access the news (Twitter App for example). In this sense, when the reader accesses the news, the application (using the Machine Learning Model) must check the news article and give a feedback of its authenticity.

We want the application to display a kind of Pop Up Notification when the reader is acceding the news article. This notification must show a measure of the veracity of the news article.

Project Goal

Andrea, Adelo

Project Objectives

Adelo

Mining the data

Our first objective is to mine the data that will be used to build the Machine Learning Model. We have decided to start our project using data from Twitter.

Why Twitter data?:

- Twitter is one of the most important social networks. With millions of active users, Twitter provides a huge volume of data.

- «Unlike other social platforms, almost every user's tweets are completely public and pullable.» [Sistilli (2015)] [1]

- Another good reason to use Twitter platform is that «Twitter's API allows you to do complex queries like pulling every tweet about a certain topic» [Sistilli (2015)] [2].

- Mining data from a social network will allow us to use contextual approach techniques for fake news detection.

- We are going to use a Python code that interact with Twitter's API to mine the data.

- The data will be storage in a MySQL database (Table 1).

| News article | Data about users | Comments | Likes | Shares | T/F |

|---|---|---|---|---|---|

| text of the news 1 | data | data | data | data | T |

| text of the news 2 | data | data | data | data | T |

| text of the news 3 | data | data | data | data | F |

| text of the news 4 | data | data | data | data | T |

| text of the news 5 | data | data | data | data | F |

| ... | ... | ... | ... | ... | ... |

Develop a mathematical formulation for fake new detection

Build the Machine Learning model for fake news detection

- The first step will be to perform a linguistic-based approach using sentiment analysis.

- Then, we will test other linguistic-based algorithms.

- Finally, we will extend the analysis using contextual techniques.

- We are going to use R language to test and implement the different techniques that will allow us to extract features from the data and build the model.

Develop a prototype of an application that allow to integrate de Machine Learning Model for fake news detection to a browser or App used to access the news article

Resource Requirements

Ahsan

Project Scope

Ahsan

Summary Schedule?

Milestone table Part I

Milestone table Part II

Risk Analysis

Farooq

Conclusion

Farooq