Difference between revisions of "Data Science"

Adelo Vieira (talk | contribs) (→Importing data) |

Adelo Vieira (talk | contribs) (→Data preparation) |

||

| Line 307: | Line 307: | ||

===Data preparation=== | ===Data preparation=== | ||

| + | Prepare and clean up the data. | ||

| + | |||

| + | In reality data is never complete and without issues. | ||

| + | |||

| + | Here we'll show you some of the operators that help to prepare and clean up the data. | ||

==Data loading via a process== | ==Data loading via a process== | ||

Revision as of 12:46, 1 November 2018

Contents

[hide]Social Media Sentiment Analysis

https://www.dezyre.com/article/top-10-machine-learning-projects-for-beginners/397

https://elitedatascience.com/machine-learning-projects-for-beginners#social-media

https://en.wikipedia.org/wiki/Sentiment_analysis

https://en.wikipedia.org/wiki/Social_media_mining

Remote development

Eclipse - Connect to a remote file system

https://us.informatiweb.net/tutorials/it/6-web/148--eclipse-connect-to-a-remote-file-system.html

Mount a remote filesystem in your local machine

https://stackoverflow.com/questions/32747819/remote-java-development-using-intellij-or-eclipse

https://serverfault.com/questions/306796/sshfs-problem-when-losing-connection

https://askubuntu.com/questions/358906/sshfs-messes-up-everything-if-i-lose-connection

https://askubuntu.com/questions/716612/sshfs-auto-reconnect

root@sinfronteras.ws: /home/adelo/1-system/3-cloud

sshfs -o reconnect,ServerAliveInterval=5,ServerAliveCountMax=3 root@sinfronteras.ws: /home/adelo/1-system/3-cloud

sshfs -o allow_other root@sinfronteras.ws: /home/adelo/1-system/3-cloud

faster way to mount a remote file system than sshfs:

https://superuser.com/questions/344255/faster-way-to-mount-a-remote-file-system-than-sshfs

Git and GitHub

Installing Git

https://www.digitalocean.com/community/tutorials/how-to-install-git-on-ubuntu-18-04

sudo apt install git

Configuring GitHub

https://www.howtoforge.com/tutorial/install-git-and-github-on-ubuntu/

We need to set up the configuration details of the GitHub user. To do this use the following two commands by replacing "user_name" with your GitHub username and replacing "email_id" with your email-id you used to create your GitHub account.

git config --global user.name "user_name" git config --global user.email "email_id"

git config --global user.name "adeloaleman" git config --global user.email "adeloaleman@gmail.com"

Creating a local repository

git init /home/adelo/1-system/1-disco_local/1-mis_archivos/1-pe/1-ciencia/1-computacion/1-programacion/GitHubLocalRepository

Creating a README file to describe the repository

Now create a README file and enter some text like "this is a git setup on Linux". The README file is generally used to describe what the repository contains or what the project is all about. Example:

vi README

This is Adelo's git repo

Adding repository files to an index

This is an important step. Here we add all the things that need to be pushed onto the website into an index. These things might be the text files or programs that you might add for the first time into the repository or it could be adding a file that already exists but with some changes (a newer version/updated version).

Here we already have the README file. So, let's create another file which contains a simple C program and call it sample.c. The contents of it will be:

vi sample.c

#include<stdio.h>

int main()

{

printf("hello world");

return 0;

}

So, now that we have 2 files:

README and sample.c

add it to the index by using the following 2 commands:

git add README git add smaple.c

Note that the "git add" command can be used to add any number of files and folders to the index. Here, when I say index, what I am referring to is a buffer like space that stores the files/folders that have to be added into the Git repository.

Committing changes made to the index

Once all the files are added, we can commit it. This means that we have finalized what additions and/or changes have to be made and they are now ready to be uploaded to our repository. Use the command:

git commit -m "some_message"

"some_message" in the above command can be any simple message like "my first commit" or "edit in readme", etc.

Creating a repository on GitHub

Create a repository on GitHub. Notice that the name of the repository should be the same as the repository's on the local system. In this case, it will be "Mytest". To do this login to your account on https://github.com. Then click on the "plus(+)" symbol at the top right corner of the page and select "create new repository". Fill the details as shown in the image below and click on "create repository" button.

Once this is created, we can push the contents of the local repository onto the GitHub repository in your profile. Connect to the repository on GitHub using the command:

git remote add origin https://github.com/adeloaleman/GitHubLocalRepository

Pushing files in local repository to GitHub repository

The final step is to push the local repository contents into the remote host repository (GitHub), by using the command:

git push origin master

GUI Clients

Git comes with built-in GUI tools for committing (git-gui) and browsing (gitk), but there are several third-party tools for users looking for platform-specific experience.

Parece que la aplicación oficial GitHub Desktop no está disponible para Ubuntu. Entonces hay otras aplicaciones similares disponibles para Linux: https://git-scm.com/download/gui/linux

Para Linux existe, por ejemplo: https://www.gitkraken.com/

Anaconda

Anaconda is a free and open source distribution of the Python and R programming languages for data science and machine learning related applications (large-scale data processing, predictive analytics, scientific computing), that aims to simplify package management and deployment. Package versions are managed by the package management system conda. https://en.wikipedia.org/wiki/Anaconda_(Python_distribution)

Installation

https://www.anaconda.com/download/#linux

https://linuxize.com/post/how-to-install-anaconda-on-ubuntu-18-04/

Jupyter Notebook

https://www.datacamp.com/community/tutorials/tutorial-jupyter-notebook

Cursos

eu.udacity.com

https://classroom.udacity.com/courses/ud120

www.coursera.org

https://www.coursera.org/learn/machine-learning/home/welcome

Otros

https://www.udemy.com/machine-learning-course-with-python/

https://stackoverflow.com/questions/19181999/how-to-create-a-keyboard-shortcut-for-sublimerepl

R programming language

The R Project for Statistical Computing: https://www.r-project.org/

R is an open-source programming language that specializes in statistical computing and graphics. Supported by the R Foundation for Statistical Computing, it is widely used for developing statistical software and performing data analysis.

Installing R on Ubuntu 18.04

https://www.digitalocean.com/community/tutorials/how-to-install-r-on-ubuntu-18-04

Because R is a fast-moving project, the latest stable version isn’t always available from Ubuntu’s repositories, so we’ll start by adding the external repository maintained by CRAN.

Let’s first add the relevant GPG key:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys E298A3A825C0D65DFD57CBB651716619E084DAB9

Once we have the trusted key, we can add the repository: (Note that if you’re not using 18.04, you can find the relevant repository from the R Project Ubuntu list, named for each release)

sudo add-apt-repository 'deb https://cloud.r-project.org/bin/linux/ubuntu bionic-cran35/'

sudo apt update

At this point, we're ready to install R with the following command:

sudo apt install r-base

This confirms that we've successfully installed R and entered its interactive shell:

R sudo -i R

Installing R Packages from CRAN

Part of R’s strength is its available abundance of add-on packages. For demonstration purposes, we'll install txtplot, a library that outputs ASCII graphs that include scatterplot, line plot, density plot, acf and bar charts. We'll start R as root so that the libraries will be available to all users automatically:

sudo -i R

> install.packages('txtplot')

When the installation is complete, we can load txtplot:

> library('txtplot')

If there are no error messages, the library has successfully loaded. Let’s put it in action now with an example which demonstrates a basic plotting function with axis labels. The example data, supplied by R's datasets package, contains the speed of cars and the distance required to stop based on data from the 1920s:

> txtplot(cars[,1], cars[,2], xlab = 'speed', ylab = 'distance')

+----+-----------+------------+-----------+-----------+--+

120 + * +

| |

d 100 + * +

i | * * |

s 80 + * * +

t | * * * * |

a 60 + * * * * * +

n | * * * * * |

c 40 + * * * * * * * +

e | * * * * * * * |

20 + * * * * * +

| * * * |

0 +----+-----------+------------+-----------+-----------+--+

5 10 15 20 25

speed

If you are interested to learn more about txtplot, use help(txtplot) from within the R interpreter.

Package that provides functions to connect to MySQL databases

This package name is RMySQL. You just need to type the following command at R prompt:

> install.packages('RMySQL')

Package manager

Display packages currently installed in your computer:

> installed.packages()

This produces a long output with each line containing a package, its version information, the packages it depends, and so on.

A more user-friendly, although less complete, list of the installed packages can be obtained by issuing:

> library()

The following command can be very useful as it allows you to check whether there are newer versions of your installed packages at CRAN:

> old.packages()

Moreover, you can use the following command to update all your installed packages:

> update.packages()

RapidMiner

RapidMiner is a data science software platform developed by the company of the same name that provides an integrated environment for data preparation, machine learning, deep learning, text mining, and predictive analytics. It is used for business and commercial applications as well as for research, education, training, rapid prototyping, and application development and supports all steps of the machine learning process including data preparation, results visualization, model validation and optimization. RapidMiner is developed on an open core model. The RapidMiner Studio Free Edition, which is limited to 1 logical processor and 10,000 data rows is available under the AGPL license. Commercial pricing starts at $2,500 and is available from the developer.

Installing RapidMiner

Descargamos el paquete y seguimos las instruciones en el sitio oficial: https://docs.rapidminer.com/latest/studio/installation/

./RapidMiner-Studio.sh

We will need to create a RapidMiner account.

Description of the User Interface

The views

Design view

Work areas for specific taks...

Process panel

It is to dissing any process, like:

- Data loading

- Forecasting

- ...

- To get started with a very simple process we can place an operator into the process panel:

- For example, we can go to Data Access Operators and place (Drag and Drop) a «Retrieve» operator into the process panel.

- Luego de hacer esto y seleccionar (click) el operator en el Process panel, el Parameters Panel cambia y permitirá, a traveés del folder icon, seleccionar the file we want to load. Podemos, por ejemplo seleccionar la «Titanic data set» que se encuenra pre-loaded in RapidMiner-Studio.

- Then, in order to run the process, we need to connect the port of the «Retrieve» operator with the Result port.

- Then, to run the process we have to click the Run button (>) (or F11) y así RapidMiner ejecutará el proceso y automáticamente desplegará the Result View, where the data set is display by default as a table.

Ports:

Results view

Work areas for specific taks...

Auto Model view

Operators

Repository

- Through the repository panel you can access data and your process.

- Al iniciar un proyecto se recomienda crear un nuevo repositorio con dos sub-folders: data and processes

Parameters

Global Search

Adding extensions

- To add extensions go to: Extensions > Marketplace:

- Top Downloads: some of the most popular extensions.

- Se recomienda instalar las siguientes:

- Text Processing

- Web Mining

- Python/R integration

- Anomaly Detection

- Series extension

- RapidMiner Radoop

- Luego de instalar la extension se the «Extension» folder in the «Operators» panel mostrará una nueva carpeta por cada extension instalada.

- También hay extension que adicionan una nueva «View». Por ejemplo, the «Radoop» extension adds the «Hadoop Data» view.

- To manage and uninstall extensions go to: Extensions > Manage Extensions

- Otra forma de instalar extensions es ir directamente al Marketplace website at: https://marketplace.rapidminer.com

- Descargamos el .jar file y lo colocamos en la «extension folder»: /home/adelo/.RapidMiner/extensions/

- Reiniciamos RapidMiner and the extension will become available.

Importing data

- Iniciamos nuestro nuevo proyecto by creating a new repository with 2 sub-folders, let's call it:

- MyFirstPrediction

- data

- processes

- MyFirstPrediction

- Then, to import the data we can click the button «Import Data» and look for the file or we can just Drag and Drop the file into RapidMiner.

- In the second step (format your columns) we can change some of the properties of the attributes (columns):

- Change type: Real, Integer, etc

- Rename column

- Change Role: The default for each column is «General Attribute». We can change the role to: id, label, wight...

- In the second step (format your columns) we can change some of the properties of the attributes (columns):

Data preparation

Prepare and clean up the data.

In reality data is never complete and without issues.

Here we'll show you some of the operators that help to prepare and clean up the data.

Data loading via a process

- Define the read operator we want:

- Go to the Operator panel and for this case we well use the «Read Excel» operator. So we Drag and Drop this operator into our Process panel.

- Go to the Parameters panel and click «Import configuration wizard»

- Look for the file

- After the file is load into the «Import configuration wizard» you can make the configuration you want:

- Change the role of the Churn Attribute to «Label».

- Connect the port to the result port.

- Hit the Run process button (>) (F11)

- Es muy importante notar la diferencia enter esté método para cargar la data y el anterior explciado en la sección Machine Learning#Importing data. La diferencia es que el presente método load the data into the RAN Memory; it doesn't store it into the local repository.

- Save the process into your local repository:

- File > Save process: then select the folder, or

- Go to the folder into the correct repository where you want to save the process: Right click > Store process here.

- Import/Export processes: That is only to save the process in another folder (export) and then import the process to our Process panel

- File > Import/Export process

- After you import a process, it will be into the process panel but not saved into your local repository. You can save it by right clicking the processes folder in your local repository > store process here.

- Delete a Repository:

- When you delete it from the Repository panel, you are just deleting the lick to it but not the files and folders inside it; if you want to delete them, you need to go to the file system and to it there (~/.RapidMiner/repositories).

- We can also save the data into our repository through the process adding a «Store» operator»:

- Drag and Drop the «Store operator» into the process panel (sobre la línea que conecta el the «Read Excel operator» y the result port).

- Then, in the Parameters panel click in the folder icon and indicate where you want to store the data.

- Then, hit the Run process button. That will store the data into the your local repository.

Visualizing data

- Attribute = Column

- Regular attributes

- Special attributes:

- Label: Cuando una attributo es marcado como «Label» quiere decir que tal atributo es el que queremos que el modelo aprenda a predecir (It's the attribute that we want our model to learn to predict). So we are going to use the regular attributes to do so.

- Example = Row

- Example set = The entire data

- Data tab:

- When displaying data (in the Results View) we can sort the order of the attributes by clicking on the attribute (one click for ascending, a second click to descending and a third time to remove the sorting. By pressing the Ctrl key we can sort by multiple attributes.

- Statistics tab: RapidMiner do some automatic data discovery.

- We can display the data in different chart styles (Histogram, Scatter, Pie, etc).

- We can display multiple attributes in the same chart by clicking in the attributes shown in the «Plots box» using the Ctrl key.

- We can display the data in different chart styles (Histogram, Scatter, Pie, etc).

- To change the standard colors of the chart you can go to:

- Settings > Preferences:

- Color for minimum value in chart keys

- Color for maximum value in chart keys

- Settings > Preferences:

- Advanced charts tap:

- Example (using the «customer-churn-data»):

- Drag and Drop the «Age» attribute to the «Domain dimension» (that represent the x axis) and the «Last transaction» attribute to the «Empty axis» («Numerical axis) (that represent the y axis)

- Example (using the «customer-churn-data»):

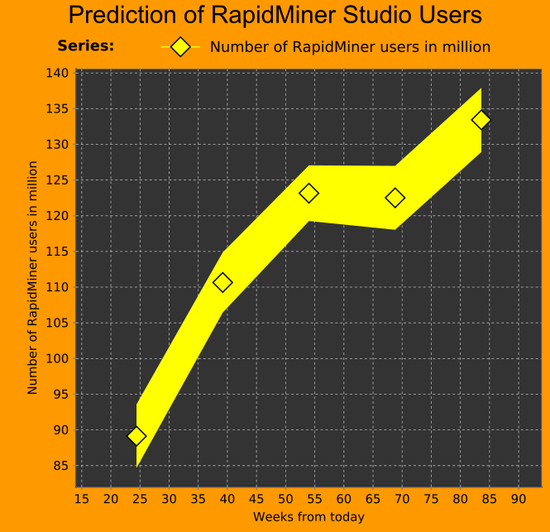

- Having «Series: «LastTransaction» selected (clicking) from chart configuration box:

- Title: Number of RapidMiner users in million

- Visualization: Lines and shapes

- Some format configurations:

- Item shape: Diamond

- Color: Yellow

- Line stile: solid

- Aggregation: Average

- Indicators:

- Indicator type: Band

- Indicator 1: Drag and Drop age to this field.

- Indicator 2: Drag and Drop age to this field.

- Selecting Domain dimension from chart configuration box:

- Title: Weeks from today

- Selecting global configuration from chart configuration box:

- Chart title: Prediction of RapidMiner studio users

- Plot background: Change color to black

- Then you can export the plot:

- File > Print/Export Image

Data Mining with R - Luis Torgo

http://www.dcc.fc.up.pt/~ltorgo/DataMiningWithR/

The book is accompanied by a set of freely available R source files that can be obtained at the book's Web site. These files include all the code used in the case studies. They facilitate the "do-it-yourself" approach followed in this book. All data used in the case studies is available at the book's Web site as well. Moreover, we have created an R package called DMwR that contains several functions used in the book as well as the datasets already in R format. You should install and load this package to follow the code in the book (details on how to do this are given in the first chapter).

Installing the DMwR package

One thing that you surely should do is install the package associated with this book, which will give you access to several functions used throughout the book as well as datasets. To install it you proceed as with any other package:

Al tratar de instalarlo en Ubuntu 18.04 se generó un error:

Configuration failed because libcurl was not found. Try installing: * deb: libcurl4-openssl-dev (Debian, Ubuntu, etc)

Luego de instalar el paquete mencionado, la instalación completó correctamente:

> install.packages('DMwR')

Chapter 3 - Predicting Stock Market Returns

We will address some of the difficulties of incorporating data mining tools and techniques into a concrete business problem. The spe- cific domain used to illustrate these problems is that of automatic «stock trading systems» (sistemas de comercio de acciones). We will address the task of building a stock trading system based on prediction models obtained with daily stock quotes data. Several models will be tried with the goal of predicting the future returns of the S&P 500 market index (The Standard & Poor's 500, often abbreviated as the S&P 500, or just the S&P, is an American stock market index based on the market capitalizations of 500 large companies having common stock listed on the NYSE or NASDAQ). These predictions will be used together with a trading strategy to reach a decision regarding the market orders to generate.

This chapter addresses several new data mining issues, among which are

- How to use R to analyze data stored in a database,

- How to handle prediction problems with a time ordering among data observations (also known as time series), and

- An example of the difficulties of translating model predictions into decisions and actions in real-world applications.

The Available Data

In our case study we will concentrate on trading the S&P 500 market index. Daily data concerning the quotes of this security are freely available in many places, for example, the Yahoo finance site (http://finance.yahoo.com)

The data we will use is available through different methods:

- Reading the data from the book R package (DMwR).

- The data (in two alternative formats) is also available at http://www.dcc.fc.up.pt/~ltorgo/DataMiningWithR/datasets3.html

- The first format is a comma separated values (CSV) file that can be read into R in the same way as the data used in Chapter 2.

- The other format is a MySQL database dump file that we can use to create a database with the S&P 500 quotes in MySQL.

- Getting the Data directly from the Web (from Yahoo finance site, for example). If you choose to follow this path, you should remember that you will probably be using a larger dataset than the one used in the analysis carried out in this book. Whichever source you choose to use, the daily stock quotes data includes information regarding the following properties:

- Date of the stock exchange session

- Open price at the beginning of the session

- Highest price during the session

- Lowest price

- Closing price of the session

- Volume of transactions

- Adjusted close price4

It is up to you to decide which alternative you will use to get the data. The remainder of the chapter (i.e., the analysis after reading the data) is independent of the storage schema you decide to use.

Reading the data from the DMwR package

La data se encuentra cargada en el paquete DMwR. So, after installing the package DMwR, it is enough to issue:

> library(DMwR) > data(GSPC)

The first statement is only required if you have not issued it before in your R session. The second instruction will load an object, GSPC, of class xts. You can manipulate it as if it were a matrix or a data frame. Try, for example:

> head(GSPC)

Open High Low Close Volume Adjusted

1970-01-02 92.06 93.54 91.79 93.00 8050000 93.00

1970-01-05 93.00 94.25 92.53 93.46 11490000 93.46

1970-01-06 93.46 93.81 92.13 92.82 11460000 92.82

1970-01-07 92.82 93.38 91.93 92.63 10010000 92.63

1970-01-08 92.63 93.47 91.99 92.68 10670000 92.68

1970-01-09 92.68 93.25 91.82 92.40 9380000 92.40

Reading the Data from the CSV File

Reading the Data from a MySQL Database

Getting the Data directly from the Web

Handling Time-Dependent Data in R

The data available for this case study depends on time. This means that each observation of our dataset has a time tag attached to it. This type of data is frequently known as time series data. The main distinguishing feature of this kind of data is that order between cases matters, due to their attached time tags. Generally speaking, a time series is a set of ordered observations of a variable :

where is the value of the series variable at time .

The main goal of time series analysis is to obtain a model based on past observations of the variable, , which allows us to make predictions regarding future observations of the variable, . In the case of our stocks data, we have what is usually known as a multivariate time series, because we measure several variables at the same time tags, namely the Open, High, Low, Close, Volume, and AdjClose.

R has several packages devoted to the analysis of this type of data, and in effect it has special classes of objects that are used to store type-dependent data. Moreover, R has many functions tuned for this type of objects, like special plotting functions, etc.

Among the most flexible R packages for handling time-dependent data are:

- zoo (Zeileis and Grothendieck, 2005) and

- xts (Ryan and Ulrich, 2010).

Both offer similar power, although xts provides a set of extra facilities (e.g., in terms of sub-setting using ISO 8601 time strings) to handle this type of data.

In technical terms the class xts extends the class zoo, which means that any xts object is also a zoo object, and thus we can apply any method designed for zoo objects to xts objects. We will base our analysis in this chapter primarily on xts objects.

To install «zoo» and «xts» in R:

install.packages('zoo')

install.packages('xts')

The following examples illustrate how to create objects of class xts:

1 > library(xts)

2 > x1 <- xts(rnorm(100), seq(as.POSIXct("2000-01-01"), len = 100, by = "day"))

3 > x1[1:5]

> x2 <- xts(rnorm(100), seq(as.POSIXct("2000-01-01 13:00"), len = 100, by = "min"))

> x2[1:4]

> x3 <- xts(rnorm(3), as.Date(c("2005-01-01", "2005-01-10", "2005-01-12")))

> x3

The function xts() receives the time series data in the first argument. This can either be a vector, or a matrix if we have a multivariate time series.In the latter case each column of the matrix is interpreted as a variable being sampled at each time tag (i.e., each row). The time tags are provided in the second argument. This needs to be a set of time tags in any of the existing time classes in R. In the examples above we have used two of the most common classes to represent time information in R: the POSIXct/POSIXlt classes and the Date class. There are many functions associated with these objects for manipulating dates information, which you may want to check using the help facilities of R. One such example is the seq() function. We have used this function before to generate sequences of numbers.

As you might observe in the above small examples, the objects may be indexed as if they were “normal” objects without time tags (in this case we see a standard vector sub-setting). Still, we will frequently want to subset these time series objects based on time-related conditions. This can be achieved in several ways with xts objects, as the following small examples try to illustrate:

> x1[as.POSIXct("2000-01-04")]

> x1["2000-01-05"]

> x1["20000105"]

> x1["2000-04"]

x1["2000-02-26/2000-03-03"]

> x1["/20000103"]

Multiple time series can be created in a similar fashion as illustrated below:

> mts.vals <- matrix(round(rnorm(25),2),5,5)

> colnames(mts.vals) <- paste('ts',1:5,sep='')

> mts <- xts(mts.vals,as.POSIXct(c('2003-01-01','2003-01-04', '2003-01-05','2003-01-06','2003-02-16')))

> mts

> mts["2003-01",c("ts2","ts5")]

The functions index() and time() can be used to “extract” the time tags information of any xts object, while the coredata() function obtains the data values of the time series:

> index(mts)

> coredata(mts)

Defining the Prediction Tasks

Generally speaking, our goal is to have good forecasts of the future price of the S&P 500 index so that profitable orders can be placed on time.

What to Predict

The trading strategies we will describe in Section 3.5 assume that we obtain a prediction of the tendency of the market in the next few days. Based on this prediction, we will place orders that will be profitable if the tendency is confirmed in the future.

Let us assume that if the prices vary more than , «we consider this worthwhile in terms of trading (e.g., covering transaction costs)» «consideramos que vale la pena en términos de negociación (por ejemplo, que cubre los costos de transacción)». In this context, we want our prediction models to forecast whether this margin is attainable in the next days.

Please note that within these days we can actually observe prices both above and below this percentage. For instance, the closing price at time may represent a variation much lower than , but it could have been preceded by a period of prices representing variations much higher than within the window

This means that predicting a particular price for a specific future time might not be the best idea. In effect, what we want is to have a prediction of the overall dynamics of the price in the next days, and this is not captured by a particular price at a specific time.

We will describe a variable, calculated with the quotes data, that can be seen as an indicator (a value) of the tendency in the next days. The value of this indicator should be related to the confidence we have that the target margin will be attainable in the next days.

At this stage it is important to note that when we mention a variation in , we mean above or below the current price. The idea is that positive variations will lead us to buy, while negative variations will trigger sell actions. The indicator we are proposing resumes the tendency as a single value, positive for upward tendencies, and negative for downward price tendencies.

Let the daily average price be approximated by:

where , and are the close, high, and low quotes for day , respectively

Let be the set of percentage variations of today's close to the following days average prices (often called arithmetic returns):

Our indicator variable is the total sum of the variations whose absolute value is above our target margin

The general idea of the variable is to signal -days periods that have several days with average daily prices clearly above the target variation. High positive values of mean that there are several average daily prices that are higher than today's close. Such situations are good indications of potential opportunities to issue a buy order, as we have good expectations that the prices will rise. On the other hand, highly negative values of suggest sell actions, given the prices will probably decline. Values around zero can be caused by periods with "flat" prices or by conflicting positive and negative variations that cancel each other. The following function implements this simple indicator:

T.ind <- function(quotes, tgt.margin = 0.025, n.days = 10) {

v <- apply(HLC(quotes), 1, mean)

r <- matrix(NA, ncol = n.days, nrow = NROW(quotes))

for (x in 1:n.days) r[, x] <- Next(Delt(v, k = x), x)

x <- apply(r, 1, function(x) sum(x[x > tgt.margin | x < -tgt.margin]))

if (is.xts(quotes))

xts(x, time(quotes))

else x

}

The target variation margin has been set by default to 2.5%

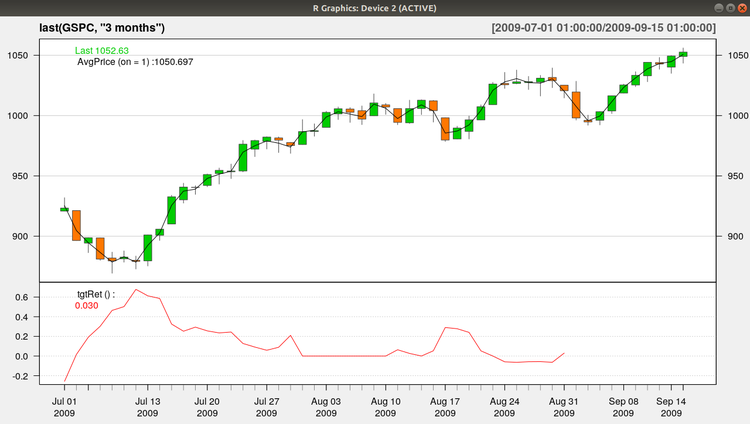

We can get a better idea of the behavior of this indicator in Figure 3.1, which was produced with the following code:

https://plot.ly/r/candlestick-charts/

https://stackoverflow.com/questions/44223485/quantmod-r-candlechart-no-colours

Esta creo que es la única librería que hace falta para esta gráfica:

library(quantmod)

> candleChart(last(GSPC, "3 months"), theme = "white", TA = NULL)

> avgPrice <- function(p) apply(HLC(p), 1, mean)

> addAvgPrice <- newTA(FUN = avgPrice, col = 1, legend = "AvgPrice")

> addT.ind <- newTA(FUN = T.ind, col = "red", legend = "tgtRet")

> addAvgPrice(on = 1)

> addT.ind()

Which Predictors?

We have defined an indicator () that summarizes the behavior of the price time series in the next k days. Our data mining goal will be to predict this behavior. The main assumption behind trying to forecast the future behavior of financial markets is that it is possible to do so by observing the past behavior of the market.

More precisely, we are assuming that if in the past a certain behavior was followed by another behavior , and if that causal chain happened frequently, then it is plausible to assume that this will occur again in the future; and thus if we observe now, we predict that we will observe next.

We are approximating the future behavior (f ), by our indicator T.

We now have to decide on how we will describe the recent prices pattern (p in the description above). Instead of using again a single indicator to de scribe these recent dynamics, we will use several indicators, trying to capture different properties of the price time series to facilitate the forecasting task.

The simplest type of information we can use to describe the past are the recent observed prices. Informally, that is the type of approach followed in several standard time series modeling approaches. These approaches develop models that describe the relationship between future values of a time series and a window of past q observations of this time series. We will try to enrich our description of the current dynamics of the time series by adding further features to this window of recent prices.

Technical indicators are numeric summaries that reflect some properties of the price time series. Despite their debatable use as tools for deciding when to trade, they can nevertheless provide interesting summaries of the dynamics of a price time series. The amount of technical indicators available can be overwhelming. In R we can find a very good sample of them, thanks to package TTR (Ulrich, 2009).

The indicators usually try to capture some properties of the prices series, such as if they are varying too much, or following some specific trend, etc.

In our approach to this problem, we will not carry out an exhaustive search for the indicators that are most adequate to our task. Still, this is a relevant research question, and not only for this particular application. It is usually known as the feature selection problem, and can informally be defined as the task of finding the most adequate subset of available input variables for a modeling task.

The existing approaches to this problem can usually be cast in two groups: (1) feature filters and (2) feature wrappers.

- Feature filters are independent of the modeling tool that will be used after the feature selection phase. They basically try to use some statistical properties of the features (e.g., correlation) to select the final set of features.

- The wrapper approaches include the modeling tool in the selection process. They carry out an iterative search process where at each step a candidate set of features is tried with the modeling tool and the respective results are recorded. Based on these results, new tentative sets are generated using some search operators, and the process is repeated until some convergence criteria are met that will define the final set.

We will use a simple approach to select the features to include in our model. The idea is to illustrate this process with a concrete example and not to find the best possible solution to this problem, which would require other time and computational resources. We will define an initial set of features and then use a technique to estimate the importance of each of these features. Based on these estimates we will select the most relevant features.